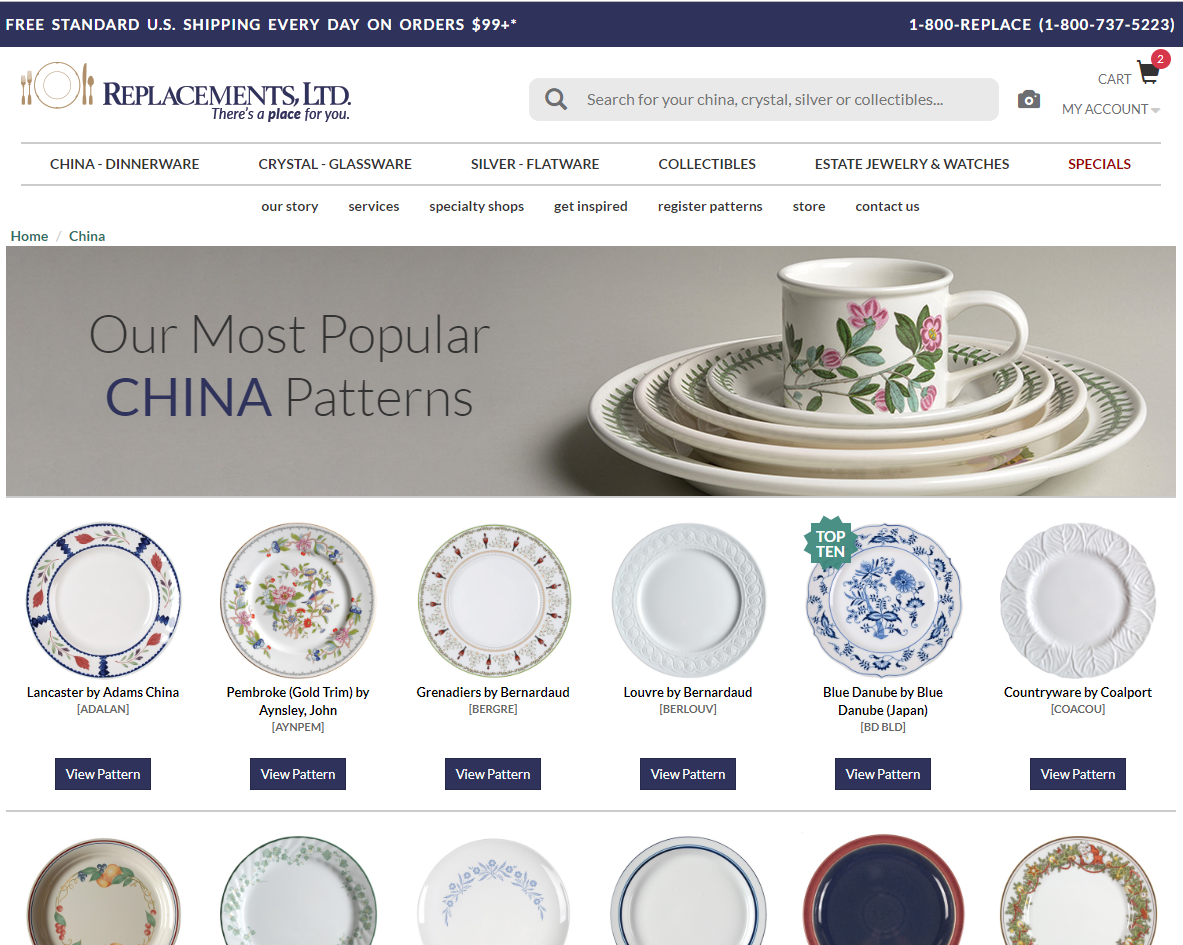

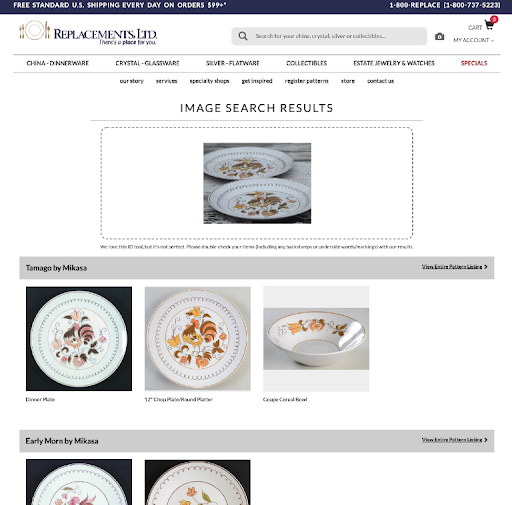

A visual search for dinnerware patterns with Replacements.com

Replacements, Ltd. is the world's largest retailer of tableware, silver and estate jewelry, including patterns still available from manufacturers as well as those discontinued. Customers looking to expand their collections, along with those who have misplaced or broken pieces, use replacements.com’s vast catalog to track down specific items.

The challenge of matching a pattern

Finding a matching plate or mug is a challenging process for the customer. Often, they can’t remember the name of their pattern or the company that produced it. In these cases, the customer must resort to a keyword search and faceted navigation on the site, which may produce thousands of similar results from the company’s massive inventory of more than 11 million items.

To assist the customer in the daunting task of searching the huge catalog, Replacements.com offers a pattern identification service to identify the pattern based on customer-submitted photographs — a team of experienced curators, trained to identify dinnerware brands and patterns, can complete this task in 3 - 5 days. The retailer was looking for opportunities to automate this identification process and enable self-service for its customers.

Grid Dynamics engaged with Replacements.com to design and train a set of deep learning models, create a service infrastructure in Google Cloud Platform and develop a set of visual search APIs capable of matching patterns based on a photo uploaded by a customer.

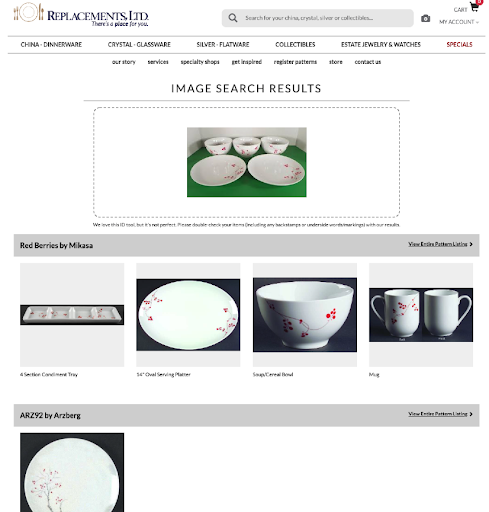

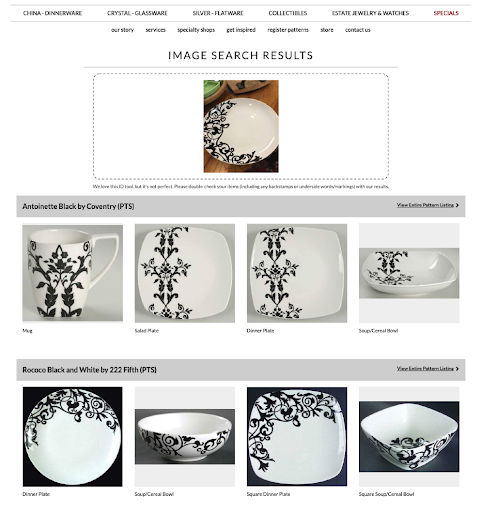

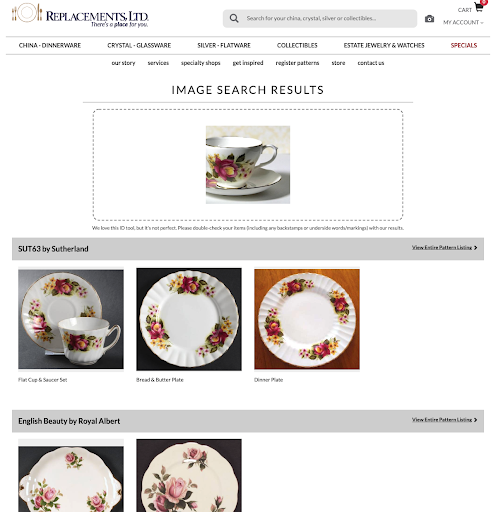

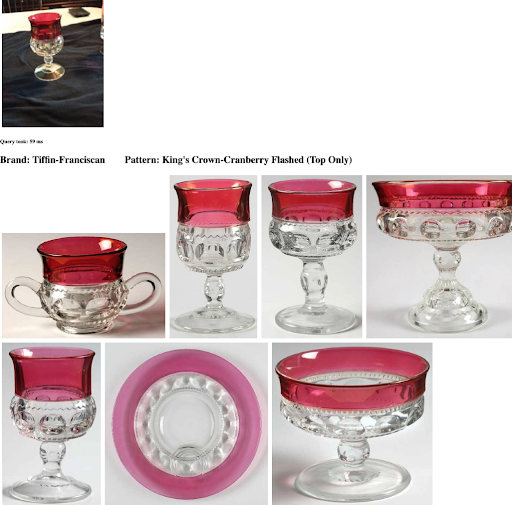

Here are some samples of the visual search results which speak for themselves:

|

|

|

|

Blair Friday, CIO of Replacements, shares the same sentiment. “We are looking forward to Replacements customers appreciating the convenience and accuracy of visual search. We’re already seeing success in our dinnerware identification and are now researching the possibility of extending visual search capabilities to additional product categories, such as flatware and glassware.”

|

|

Visual search under the hood

At the core of the solution is a deep learning model trained to recognize dinnerware pattern similarities.

Replacements.com’s dataset contained several millions of SKUs with pattern tags, as well as tens of thousands of images submitted by customers which were manually associated with the correct SKU/patterns by curators. There was a lot of noise in the data, such as broken images and identical images shared between different patterns. These abnormalities were removed during data preprocessing.

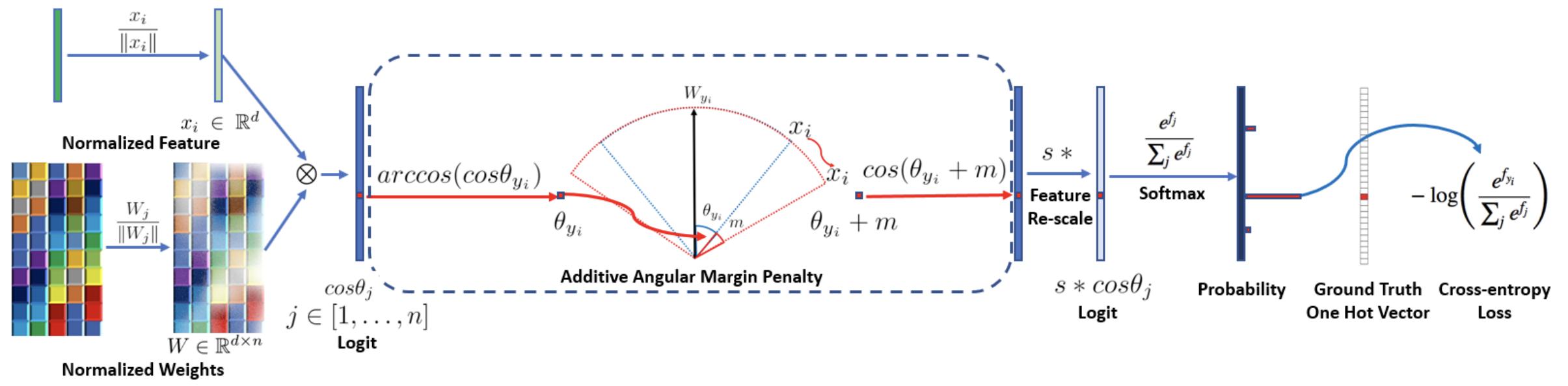

We decided to focus on model training on artistic pattern recognition that deliberately disregards product shape or type. We stated an image classification problem where each pattern represents a separate class.

A problem of recognizing hundreds of thousands of different artistic patterns with just a handful of products per pattern is similar to facial recognition, it requires the model to differentiate across a considerable number of small classes. We based our model on the state-of-the-art face recognition model ArcFace. This model introduces an Additive Angular Margin (ARCFace) loss, used to supervise the training of DCNN. ARCFace loss pushes DCNN features to the hypersphere where the vector direction discriminates classes and ensures good class separability with angular margin:

As a result of the training, we now get high-quality feature vectors representing the pattern of the dinnerware piece. Nearest neighbor search and other downstream tasks can directly use extracted feature vectors.

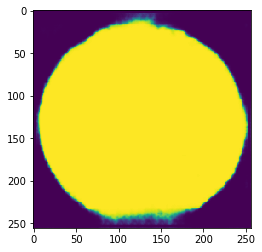

To improve the quality of the model, we employed several techniques that mostly revolved around data augmentation. Beyond common distortions and rotations, we trained and used a separate segmentation model based on UNet architecture to augment images with a variety of backgrounds which helped to improve the quality of the model quite a bit:

|

|

Additionally, barcode labels partially obscured many of the images in the dataset, such as this:

For those images, we performed special data augmentation to add barcodes on top of existing images artificially.

For model evaluation, we used Accuracy@1, Accuracy@10 and Accuracy@100 metrics as having a correct pattern within the top 10 and 100 results correlate well with the goal of the customer identifying the correct pattern at a glance or after minimal scrolling.

All models are implemented using PyTorch deep learning framework and trained using the Google ML Engine platform with Tesla V100 GPU to the point where metrics stopped improving, typically achieved within 24 hours of training.

DCNN model is based on ResNeXt architecture (Se-ResNeXt-50 (32×4d)) and is pre-trained on Imagenet data. The first epoch model is trained with frozen DCNN layers and for subsequent epochs, DCNN layers are unfrozen and fine-tuned. Image vectors are obtained from the last fully connected layer of the DCNN with the vector size of 128.

Visual search put to production

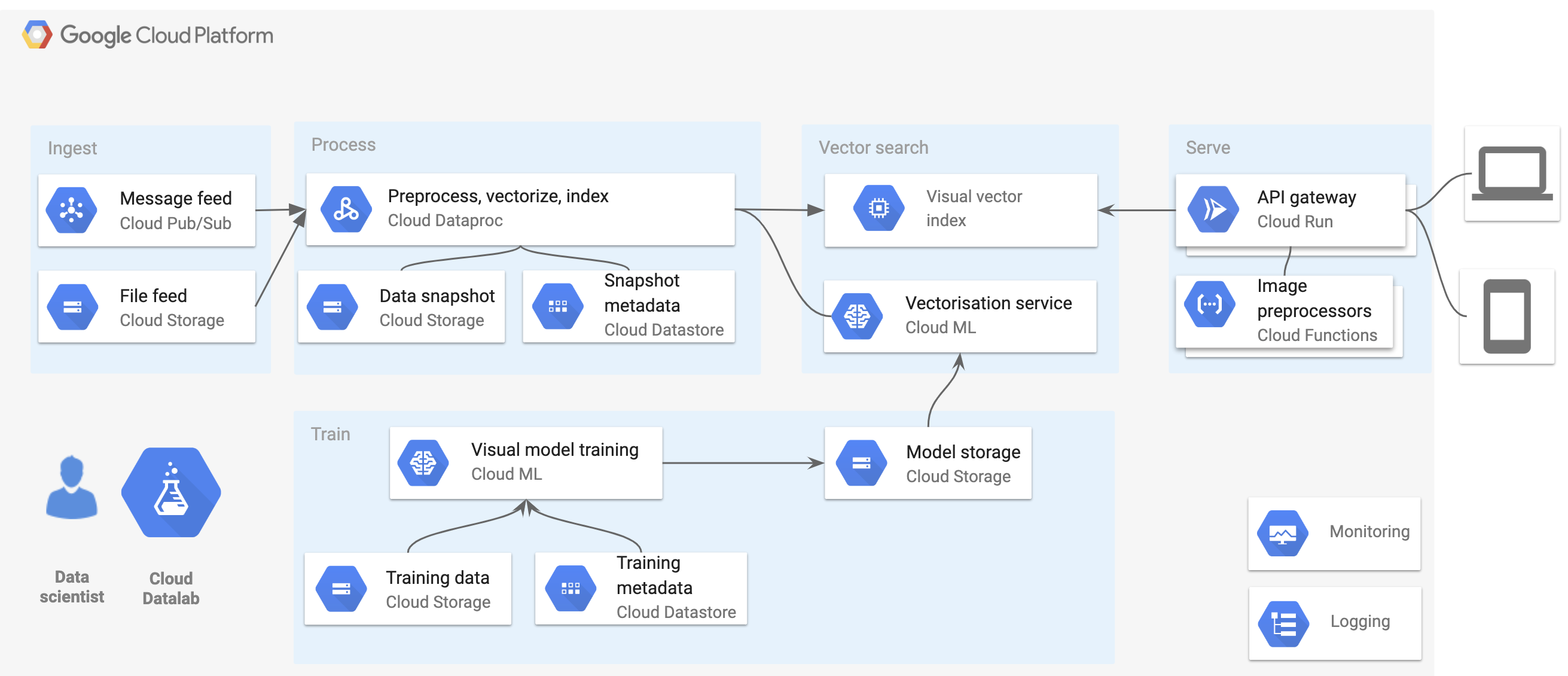

The Google Cloud Platform deployed a visual search solution with the extensive use of the Google Cloud services ecosystem. Terraform automated the infrastructure deployment.

Raw catalog data is ingested with a data file feed through Cloud Storage and messaging using Pub/Sub. A data pipeline, such as preprocessing, data cleansing and vectorization jobs, is implemented using Dataproc. Initially, raw data gets converted into a versioned data snapshot and stores the associated metadata in Datastore. Then, all the new images in the data snapshot are converted into semantic vectors using a vectorization service deployed in the ML Engine platform. Finally, new vectors are indexed into the vector index and become available for the visual search API.

A vector index is implemented using the Solr search engine with a custom plugin which enables vector search for image vectors while allowing keyword search by other attributes such as brand if the user provides them.

Visual model training was implemented in PyTorch and trained in ML engine with GPU support. Newly trained model versions are deployed into a vectorization service based on AI Platform Prediction and used for subsequent reindexing of the catalog data.

Conclusion

The visual search solution, already used by the client, was put into production in mid-August. The immediate impact is that a core service that was advertised to take several days now takes a few seconds. The client looks forward to automating this process and delighting its customers with this new feature.

Matt Kleweno, Software Development Manager at Replacements.com, says, “Because of the large number of SKUs and the similarities between the characteristics, we’re excited about the potential capabilities visual identification can bring to our customers and our company.”

This case study is just another illustration of how deep learning can improve your business, automate processes and deliver cool new features for customers.