AR-based Indoor Navigation

Today, many areas of our life are transitioning to online solutions. But despite this trend, there are still plenty of times when we need to move around in the physical space in order to take particular actions or achieve certain goals. In most cases, having an address, a unique name, or a set of coordinates makes this task fairly straightforward. It is further simplified by the various applications in the market that can help you reach your destination via step-by-step movement instructions, for example via GPS directions on your smartphone.

However, there remain some limitations to these types of solutions. In the case of relying on GPS services, it is well known that GPS sensors don’t always provide accurate location information. This is especially the case when inside buildings that block clear line of sight to the satellites that calculate your position. GPS satellites don't transmit strong enough signals to reach indoor users. And signals that enter buildings through windows are often unreliable and can produce location errors up to hundreds of meters. This is why an entirely different technology solution is required in order to provide accurate directions to indoor locations.

AR indoor navigation, or augmented reality indoor navigation, is a solution that provides turn-by-turn directions to locations or objects where GPS and other technologies cannot accurately work.

Where to use AR indoor navigation

Indoor navigation augmented reality can be useful in various environments and applications including:

- Office buildings: Finding a conference room, restroom, or desk is simplified by indoor navigation in smart offices. And more than just finding a conference room, employees can find which conference rooms are empty and available. In addition to people and places, assets such as printers, tools, or test equipment can be located.

- Shopping malls and large stores: The obvious use of indoor navigation in malls is to get turn-by-turn directions to a particular store. In large stores it can lead you to specific products. Collecting useful marketing data from indoor navigation systems is also possible. For example, it can measure how much time people spend in a particular store or how often people need directions to specific products or store areas. With this information you can make popular items or stores easier to find, or even reconfigure the store layout based on user requests or behaviors.

- Hospitals: Hospitals often have multiple departments and sections spread across multiple floors or even multiple buildings. As people tend to visit hospitals infrequently, indoor navigation can be extremely helpful in finding specific locations they are trying to find for the first time.

- Airports: Today’s international airports are enormous, sprawling structures that can be miles across. When people have limited time to reach flights, having turn based navigation can help people move to gates far more quickly and efficiently.

- University campuses: Various virtual objects can be attached to specific positions in campus buildings. It means that in addition to helping people get to where they want to go, indoor navigation can provide useful information to building managers and facilities coordinators.

What is augmented reality?

Augmented reality (AR) is the technology that shows the real-world environment with digital information overlaid onto it. AR allows adding any type of 3D models as objects into the real world. Users can then interact with them in real time, change object placements, scale, or rotate them.

Frameworks

The ARKit (iOS) or ARCore (Android) frameworks can be used for visual recognition of various objects such as text, images, or other 2D or 3D shapes. These frameworks provide for the rotation or positioning of each object and give useful information about detected vertical and horizontal planes.

The SceneKit (iOS) or Sceneform (Android) frameworks are designed to build and render 3D objects on the camera view. Using these frameworks it’s possible to show any visual information related to a specific location inside a building.

There are some restrictions on the use of ARCore indoor navigation, as it only works on supported devices. The device must have a high quality camera, motion sensors, and the design architecture to ensure it performs as expected. In addition, the device needs to have a powerful enough CPU that integrates with the hardware design to ensure good performance and effective real-time calculations.

Indoor navigation solutions overview

There are several approaches that can be taken to implement Indoor navigation AR. We’ve investigated each of the main approaches that have been developed and will present a review of the advantages and disadvantages of each:

Beacon and Wi-Fi RTT based indoor navigation

Beacon technology is a current buzzword when it comes to indoor AR navigation. The most common example is the 2,000 battery-powered Bluetooth Low Energy beacons installed at Gatwick airport in the UK which gives, as claimed, +/-3 metre accuracy.

Disadvantages:

According to Apple documentation, beacons give only an approximate distance value. We need at least three nearby sensors to determine our location using triangulation, which means the approximation error will be even greater. This solution will not provide acceptable accuracy levels.

When you consider other factors such as cost ($10-20 per item), battery replacement (once every 1-2 years) and the working range (10-100 metres), it’s clear that the use of beacons for indoor navigation is effective only under certain conditions.

Built-in mobile sensors

This solution relies on built-in mobile sensors that can provide users with an estimated value based on an interpretation of the electromagnetic field in the immediate area around the user.

Disadvantages:

The magnetic field can change over time due to external factors, so you need to repeatedly check and update the magnetic map of the building.

AR Cloud with visual world saving

The next two solutions are based on augmented reality technology. The cloud solution provided by Google allows adding virtual objects to an AR scene. Multiple users can then view and interact with these objects simultaneously from different positions in a shared physical space. The position of the location around which the world is anchored is saved to the cloud. In this case, what is meant by “the world” is images that are taken from the camera view.

Disadvantages:

- Cloud anchors can be resolved for no longer than 24 hours after they are hosted. Google is currently developing persistent Cloud Anchors, which can be resolved for much longer time periods.

- This solution only works with a stable network connection.

- The identica (not unique) environment can cause inaccurate results.

Visual Markers – AR-based indoor navigation

This solution is based on creating visual markers, also known as AR Markers, or ARReferenceImage, and then detecting their position. In this case all you need is your camera. Having access to data about the world around your camera field of view, you can process this data and apply any logic you want. That’s why we ultimately selected this method.

| A visual marker is an image recognized by Apple’s ARKit indoor navigation, Google’s ARCore, and other AR SDKs. Visual markers are used to tell the app where to place AR content. If we place a visual marker somewhere in space, on a floor or wall surface for example, then when scanning we will not only be able to see the marker represented in AR but also its exact coordinates in the real world. |  |

So let’s take a more detailed look at this approach in the GD Navigator App and how it can be applied to indoor navigation problems.

GD Navigator App

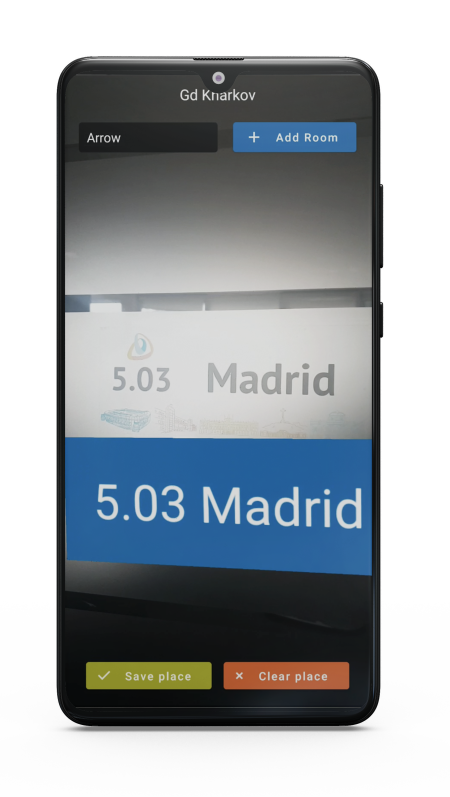

|

We selected the Visual Markers solution for our application primarily because this approach doesn’t require GPS, additional hardware, or a permanent network connection. The data can be downloaded to the device on demand, for example due to changes on the server side. Once data is downloaded and cached, a route represented by visual markers can be created on the device without an internet connection. The app has two modes - Admin and User. In Admin mode, an admin manually creates routes with different destinations. In User mode, users can choose their desired destination, define their current location inside the building, and then see the route represented by arrows on the screen. |

Admin Mode

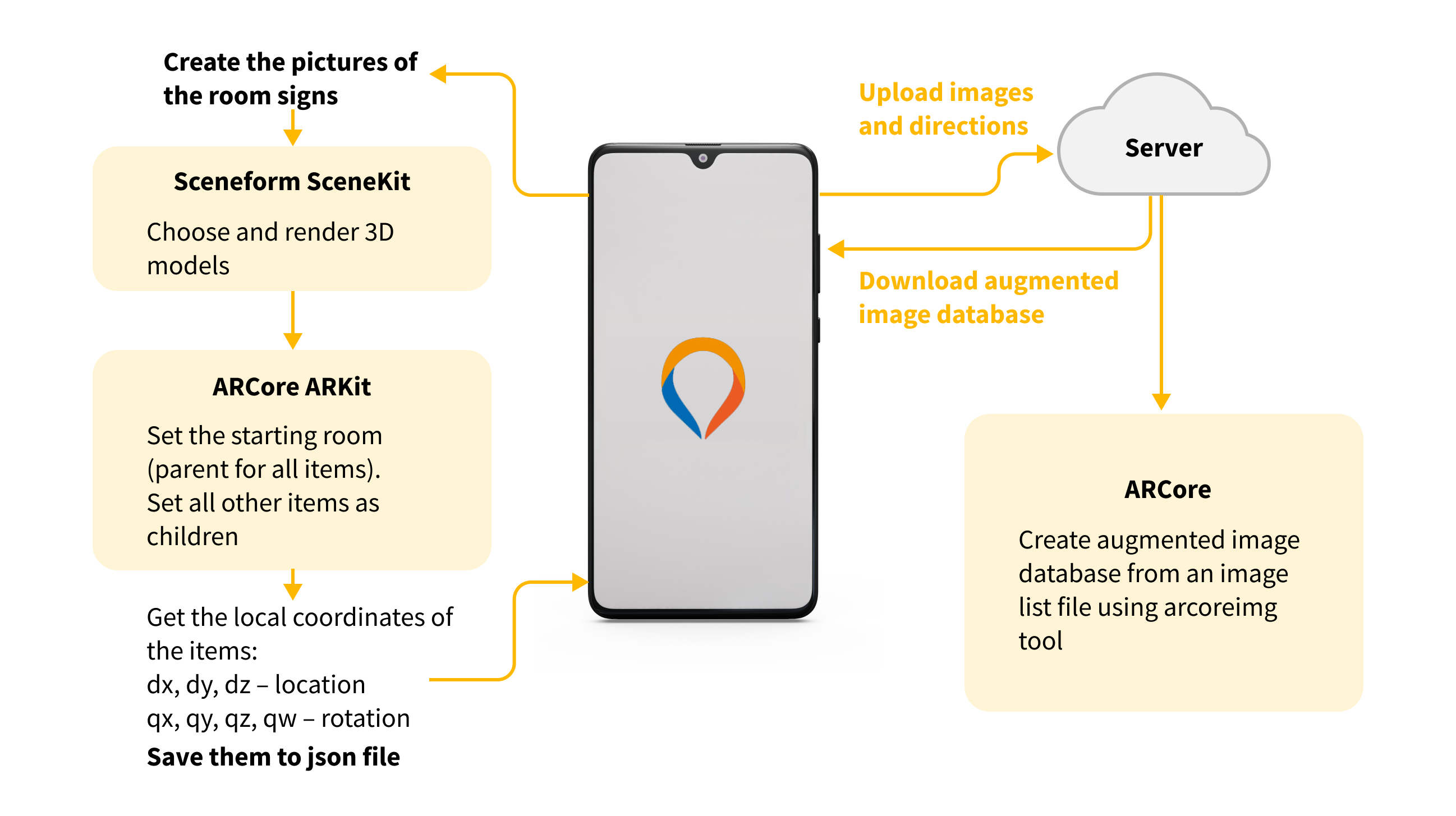

1. Create visual markers

In the first step, an admin creates a list of images that can be recognized by ARCore/ARKit. Images can be selected from a gallery or captured from the camera.

Images are recognized as visual markers if they are in the Augmented Image Database format. All images are uploaded to the server, where they are converted to Augmented Image Database using the arcoreimg tool. Each database can store information for up to 1,000 reference images. There's no limit to the number of databases, but only one database can be active at any time. Then the database is downloaded to the device.

|

Image requirements:

|

|

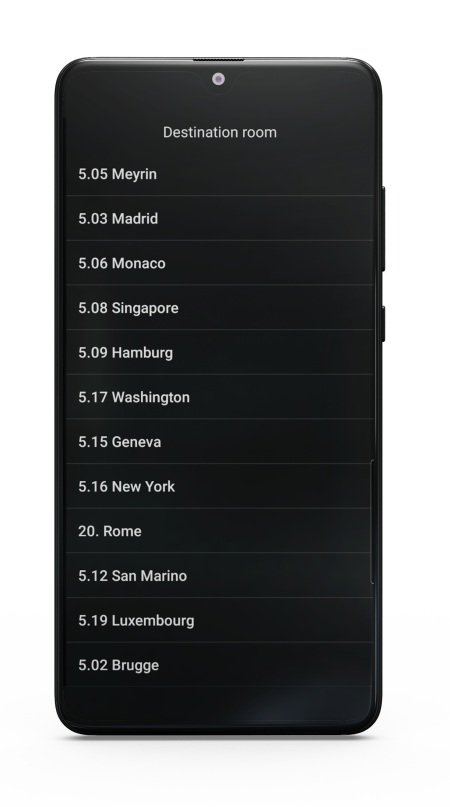

In this GD Navigator example, the images are room signs. Each image has its own unique name to make it clear to the user. In reality, every object in the real world could be considered as a visual marker, provided it meets the requirements above and cannot be easily confused with another object. When the image database is downloaded to the device, it is stored locally on the device and is only updated as needed. For the user, the database is updated if it was changed on the server. For the admin, the database is updated every time the admin alters an image or adds new ones. In the app image database, it shows a list of rooms from which the user can choose their desired destination. |

2. Create the routes

|

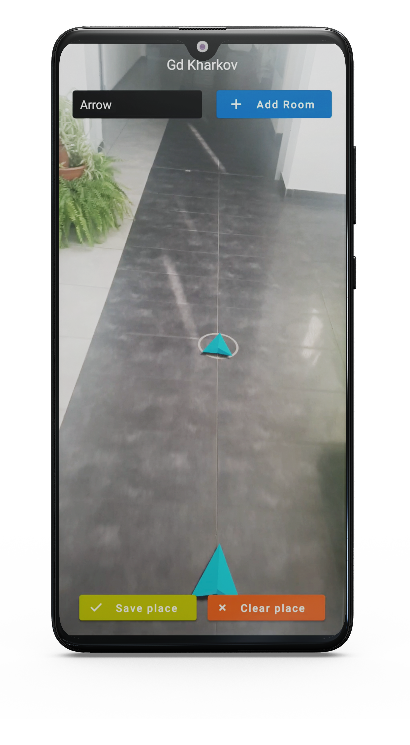

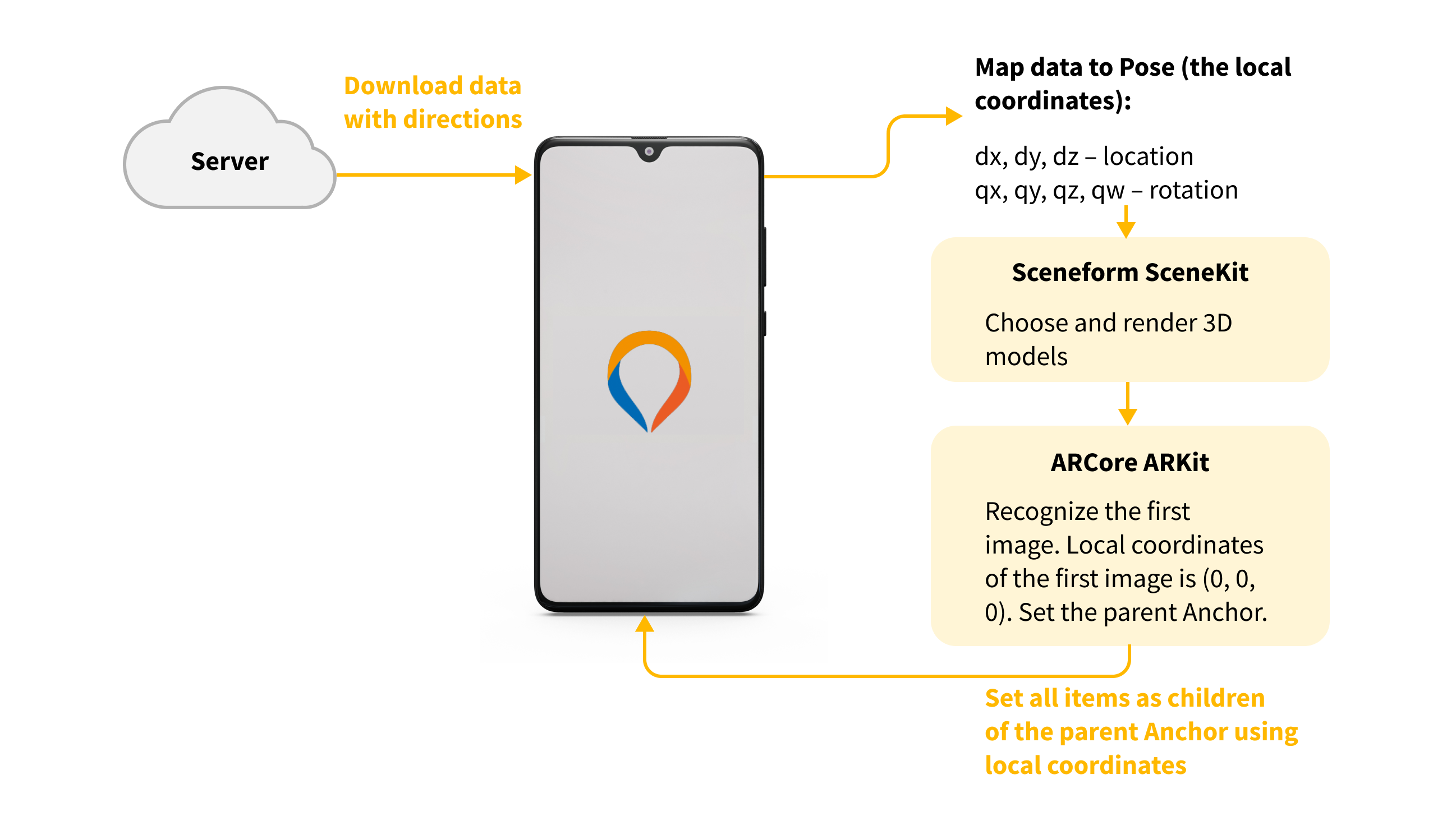

After creating the list of images (visual markers), the admin can use them to create routes between the rooms. In our implementation a route is represented as a set of items of two kinds: Arrow or Room. To create a route, at first we need to determine the starting position (first room), from which the route will be built from. The admin sets the starting position (starting room) by scanning the room sign using the camera. The room sign is recognized by ARCore/ARKit since this image has already been added to the Augmented Image Database. It will be the first anchor that the admin uses to create a route. Having recognized the image, we place a virtual object near the door sign. The framework provides the world position and rotation of the starting room according to the camera position (x, y, z - location; x, y, z, w - rotation). |

|

Defining the starting position is the most important step, since all other route elements (arrows and other rooms) will be placed as children of the first element in its local coordinate system. It is very easy to add new items to the route. The admin just needs to navigate their route by tapping the screen where they want to leave the arrows. New virtual objects will be placed there. It can be any type of rendered 3D model. The center of the door sign is the origin of the local coordinate system (x = 0, y = 0, z = 0). From ARCore/ARKit, we get local coordinates of each item (dx, dy, dz - location; qx, qy, qz, qw - rotation) and programmatically set references to the previous and next items. Thus, we create a chain of items, in other words, a route. All routes created by the admin are downloaded to the server and saved. The admin can create many routes within one building or across different buildings. |

User Mode

In this mode we need to receive the map on the app launch before we can proceed. It can be downloaded from the server or taken from cache and updated if needed. The map is represented by a set of objects with the data that can be used to recognize the object. It also contains information about mutual arrangement of the objects.

The first step for the user is to scan a room sign. As each sign is unique, we are able to determine the user's position on the map after the successful scanning.

Then coordinates of all objects are recalculated relative to the entry point. Of course, distance and the relative position between any two objects remains the same as it was set in the admin mode. So, for each object on the map we know its neighbours, which means that we can build a graph. Also basing on coordinates we can calculate the distance between any two objects, which means we can assign weight to each edge in the graph.

The second and final step for the user is to select start and finish points. The user is able to choose between any two different objects on the map.

Our task has been simplified to finding the shortest path in the graph. We know that all weights of edges are positive because the distance can’t be negative or zero. It allows us to use Dijkstra’s algorithm and receive the shortest path from the entry point to the destination point.

Now it’s only about rendering the result. To convert the path into navigation instructions we need to add intermediate objects. This is needed because an object can be physically invisible from its neighbour. Our approach is to add an intermediate object every 2 meters along the path and to render it as an arrow pointing to the next object.

So, in two steps the user is able to get visual step-by-step navigation between two rooms, which means we have effectively implemented indoor navigation.

Our demo video

We have put together an indoor navigation augmented reality tutorial that demonstrates all of the features described above. Seeing the app work as it would for a regular user really puts into perspective how useful augmented reality apps will be for shoppers. Check out the video, and see for yourself!

Difficulties

From official documentation: creating an AR experience depends on being able to construct a coordinate system for placing objects in a virtual 3D world that maps to the real-world position and motion of the device. When you run a session configuration, ARKit/ARCore creates a scene coordinate system based on the position and orientation of the device. Any objects you create or that the AR session detects are positioned relative to that coordinate system.

It means that coordinate systems in different sessions are also different. And according to our requirements, we need to display objects in the same real-world positions regardless of the coordinate system base. Position offset between coordinate system bases can be retrieved using comparisons of the detected object, however, the angle of axes rotation is not available. In other words, we don’t know how much the direction of the coordinate axes has changed between two different AR sessions.

The first approach to solve this problem is to use a built-in device compass and align the axes according to it. In this case, axis rotation remains the same between sessions. So, applying position offset calculations will be enough to determine the object locations. In practice, the compass data is not perfect and is being constantly changed during device usage, which makes all our virtual worlds visually move during the session. Besides, not all devices have a compass. In addition, this type of method is not implemented in ARCore by default, and our requirement was to use only camera data. So we needed another approach to solve this issue.

The second approach is to use some other coordinate system that will remain the same in all sessions. As mentioned above, each object added to the AR session also has its own coordinate system. The local coordinate system of the object remains the same in all sessions. Hence, by choosing a stable object that can be detected by AR (for example, a unique image on the door), we can use this object’s local coordinate system to place all other objects in relation to it. After detecting this object we can use its coordinate system, and it will be the same in any session. That was our solution.

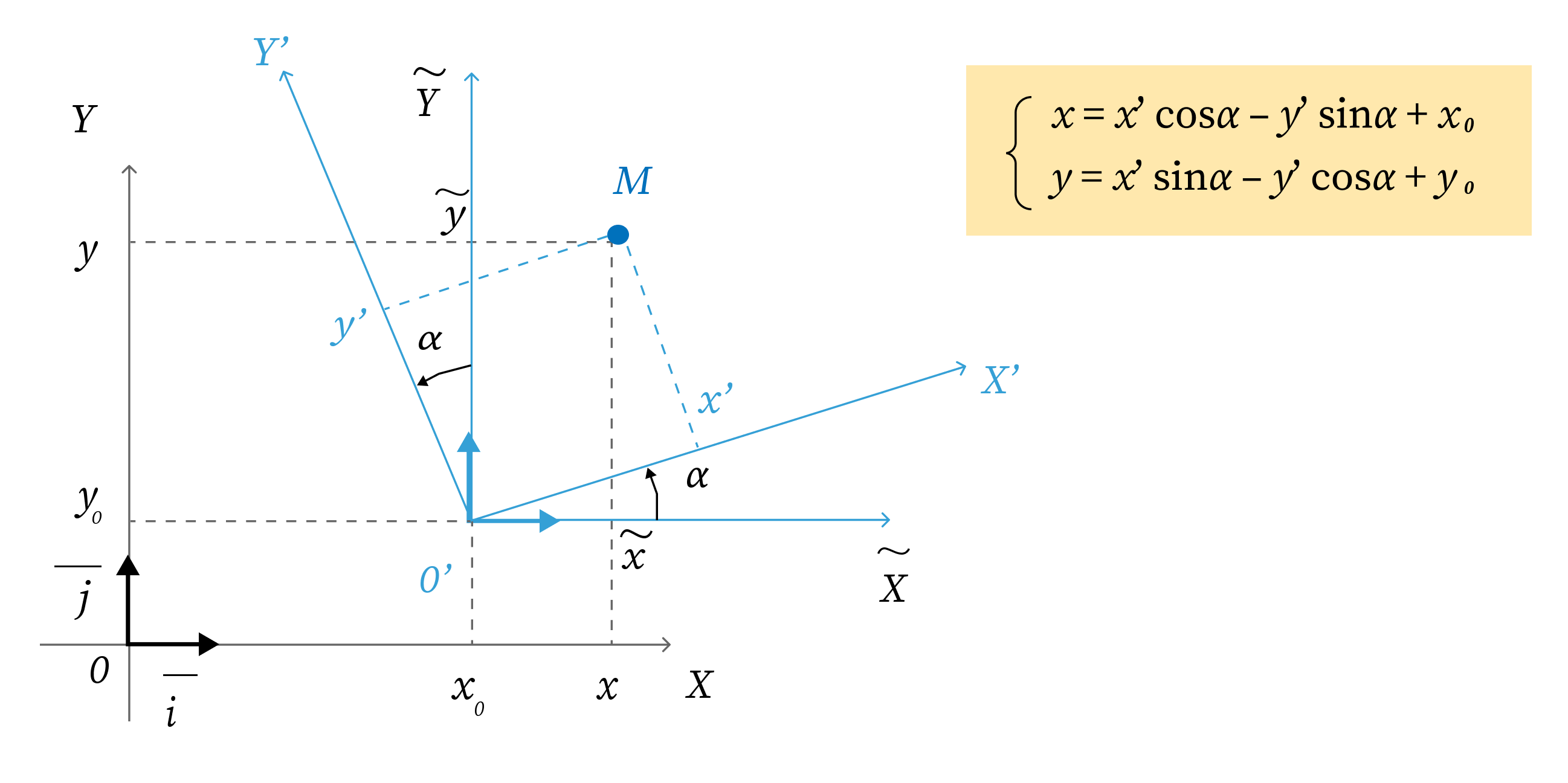

When an admin builds a map, all objects are created in the local coordinate system of the first object. At the same time a user should be able to select any object as a starting point. In the case when the object selected is not the first object, we need to apply mathematical transformations to recalculate the positions of all other objects relative to the selected one. Having difference in coordinates (x0,y0) and rotation angle ɑ, we can recalculate the position of any object (x,y) for the “new world” (x’,y’) using the following formulas:

Conclusion

We have built up a significant amount of knowledge and experience from working with augmented reality and its core features. We learned how AR tracks the world around it and how we can combine real and virtual worlds. We also have a strong understanding of how AR features can be used effectively for indoor navigation.

In the process of completing the above tasks, we also encountered some of the practical limitations of augmented reality. Learning about its strengths and weaknesses has allowed us to better understand this technology and to improve our engineering skills. After encountering various technical challenges, we ultimately completed our goal. We now have a fully functioning application that can provide turn-by-turn navigation to any room on one of our office floors.

This tool can be highly valuable to newcomers and guests as they can locate their destination rooms faster and more easily. Some people may find an additional advantage in not needing to seek the assistance of strangers if they get lost in a new building.

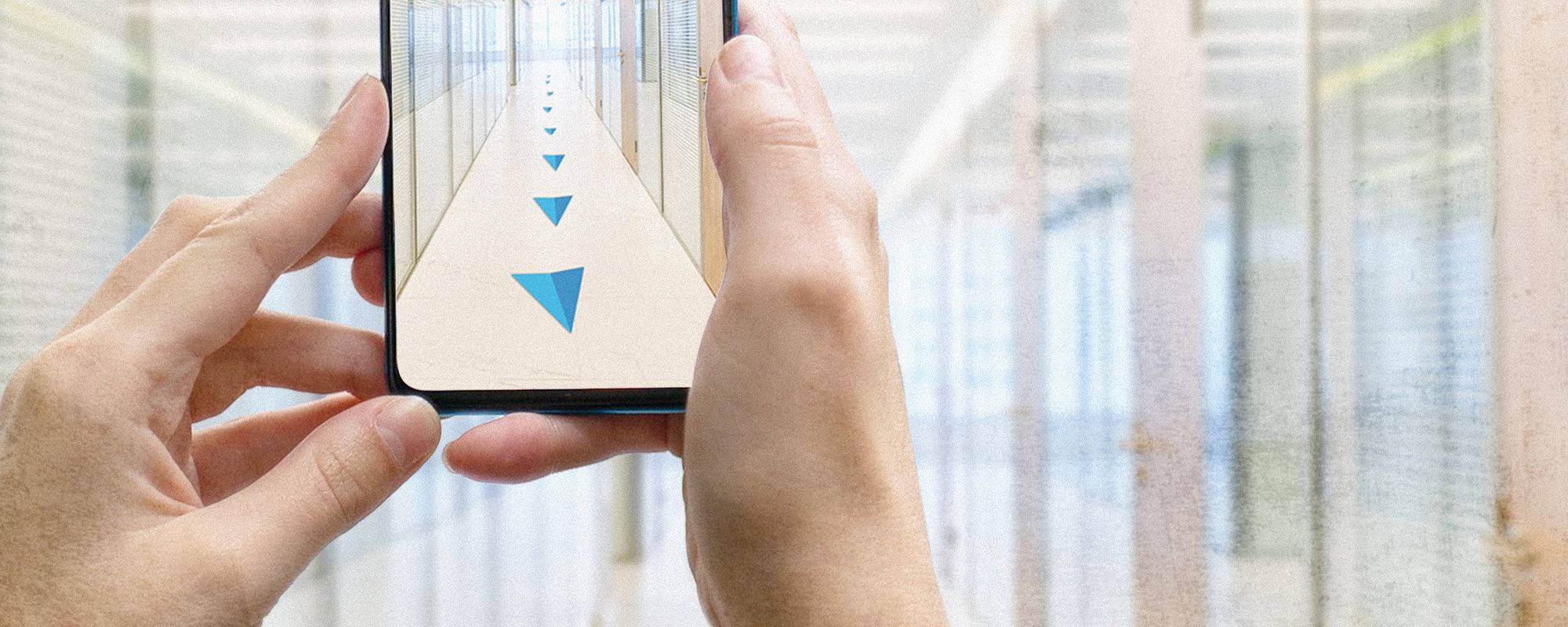

One of the great benefits of the AR solution is that the navigation instructions are displayed onto the view from your camera. It means you can see what you need to on your smartphone, and at the same time see the physical world around you. You can walk fast while staring at your screen without being afraid to bump into an obstacle.

Usage of our application starts from the determination of an entry point. At this point the application suggests detecting a room by scanning an image on the entrance door. The next features are dependent on the user role, whether it is an administrator or regular user.

If you are an administrator, you can add and save virtual objects at desired locations. This can be done either automatically, by scanning an entrance door to the room, or manually, by tapping at any place on your camera view.

If you are a regular user, after detecting an entry point you can choose a destination room from the given list. Then the application builds and renders the route, and you can see the step-by-step directions to your destination on the camera view of your device.

One of the future steps we intend to undertake is to add support for more complicated routes, for example, if you need to change a floor, use an elevator, or even take a train in the case of large airports. Another idea is to support creating a virtual objects map using only the plan of the building instead of needing to rely on the administrator’s work in the field.

Having such a solution in place, our application could be scaled and adapted to offer indoor navigation in any environment.