Building an IoT Platform in GCP: A Starter Kit

Over the past decade, the complexity of manufacturing processes and assembly lines have significantly increased. Whereas in the past, businesses relied on linear assembly lines, today’s assembly lines can produce customized products, as seen in the automotive industry. However, introducing customization and flexibility into the production process does not come without challenges. It requires effective management of assembly lines and machines on the floor, as well as the ability to identify issues and anomalies during the assembly process, rather than after the fact. Automated process management involves gathering data from assembly lines and machines, identifying problems with consignments or hardware, and more. Modern cloud platforms offer a range of capabilities for building an IoT platform, including integration with facilities, data collection, and ML platform integration.

For companies embarking on this journey for the first time, it can be a complicated process. With this in mind, Grid Dynamics has developed a starter kit for building an IoT platform from scratch in GCP. The goal of this starter kit is to provide modular components to address various capabilities such as data collection, deployment to the edge, IoT device management, and more, thereby reducing the time-to-market for developing an IoT platform from scratch.

The GCP IoT Platform Starter Kit is open source and will be available on the GCP marketplace soon.

Why should smart manufacturing enterprises consider cloud?

Manufacturing is not the only industry that has seen significant change over the past decade. Public clouds, which once operated on a “zero trust” model, have gained widespread trust and adoption across all industries. These clouds have also evolved their service offerings to provide the necessary capabilities for modern business, including the ability to connect to edge sites, stream metrics, process data, and build anomaly detection in batch or near real-time mode. The advances in public clouds have not gone unnoticed by the manufacturing industry, which has begun its own journey to the cloud. In general, a public cloud provides building blocks that are easier for businesses to adopt into their processes rather than building similar functionality from scratch on-premise, and it offers the ability to scale on demand.

The most common problems solved by smart manufacturing processes in the cloud are:

- Health monitoring and anomaly detection;

- Predictive maintenance;

- Metric-based quality control;

- Visual quality control; and

- Remote device control and configuration management.

Monitoring and anomaly detection typically involve a combination of rule-based or machine learning (ML) based checks at the facility level, data collection in the cloud, and an ML inference layer in the cloud. This can be implemented entirely in the cloud, with data flowing from the facility to the cloud for processing.

Predictive maintenance is typically an ML-based model running in the cloud on top of data gathered from the facility. It can be implemented in real-time or as a batch process, with the latter being more common in manufacturing facilities.

Metric-based quality control is usually run on a gateway server, controlling data close to the data sources. In the event of any issues, an alert is sent to the cloud and to the corresponding facility service.

Visual quality control is often deployed at the facility to avoid sending large amounts of video and image data to the cloud. It typically only sends data to the cloud in the event of an identified anomaly, and triggers the facility service to report or take action on the issue.

The layout of IoT devices at a facility can be complex, with a large number of devices and management challenges. An IoT platform can help manage device configuration, remotely control individual devices or groups of devices, and maintain connectivity between the cloud IoT Core and the devices.

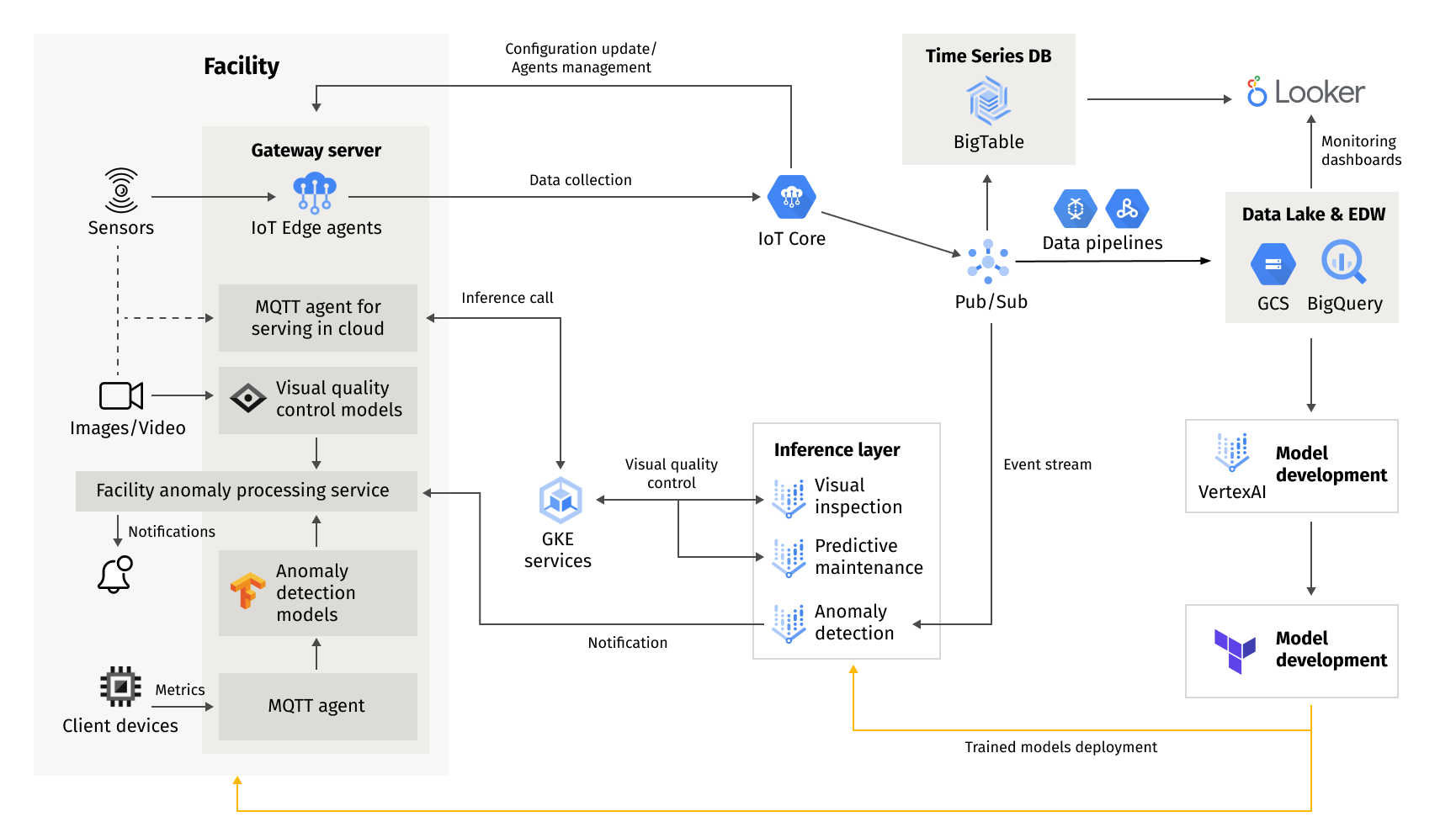

The general architecture that might host any of the aforementioned business use cases is shown below:

The reference architecture illustrates how serving on the gateway and in the cloud can be implemented and integrated into a single IoT platform. The gateway layer integrates with manufacturing conveyors and runs ML models in Kubernetes to perform real-time inference on the gateway. It also has agents that are connected to the IoT platform in the cloud. The cloud portion of the architecture includes a data platform for data collection, an ML platform for model development and real-time inference, and a middleware service responsible for integrating the gateway with the inference layer. In the following sections, we will discuss how the gateway and cloud can be connected and how inference can be performed on the gateway, and in the cloud.

Architecture of the IoT platform for smart manufacturing

The IoT platform roughly consists of the following components:

- An edge layer;

- An IoT service in the cloud;

- An inference layer in the cloud; and

- A data and ML platform.

In this blog post, we will primarily focus on the design of the edge layer, the IoT service in the cloud, and the inference layer in both the cloud and at the edge. The edge layer may have a direct connection to the cloud, providing a low-latency stream of data, batch data exports, or a connection through an intermediate device where there is no direct connection between the facility and the cloud. We will focus on streaming cases and building the inference layer on the gateway and in the cloud.

Data collection and model development

Data collection begins with IoT Core, which receives data from the edge layer and pushes to Pub/Sub. IoT Core is responsible for encrypting the data if the connection between the facility and the cloud is over an unsecured network, as well as collecting data and sending commands to devices. Data collection can be implemented in batch mode, where the device sends data at intervals, or in streaming mode. Batch processing is similar to traditional data processing and typically does not require strong SLAs. Stream processing, on the other hand, requires low SLAs on data collection, extraction, and validation, as well as model serving on top of the incoming data, and the ability to trigger a device if an anomaly is identified.

IoT Core provides a bidirectional connection between devices and allows data to be collected and pushed to Pub/Sub. From there, various consumers can use the data for visual inspection and provide feedback to the device, store the data in a data lake or Google Cloud Storage for archival purposes, or write it directly to BigQuery for self-service access. If necessary, specific business logic can be implemented using Cloud Functions or Cloud Run.

IoT Core also manages configuration updates on devices. A new configuration update is sent to the device through a command.

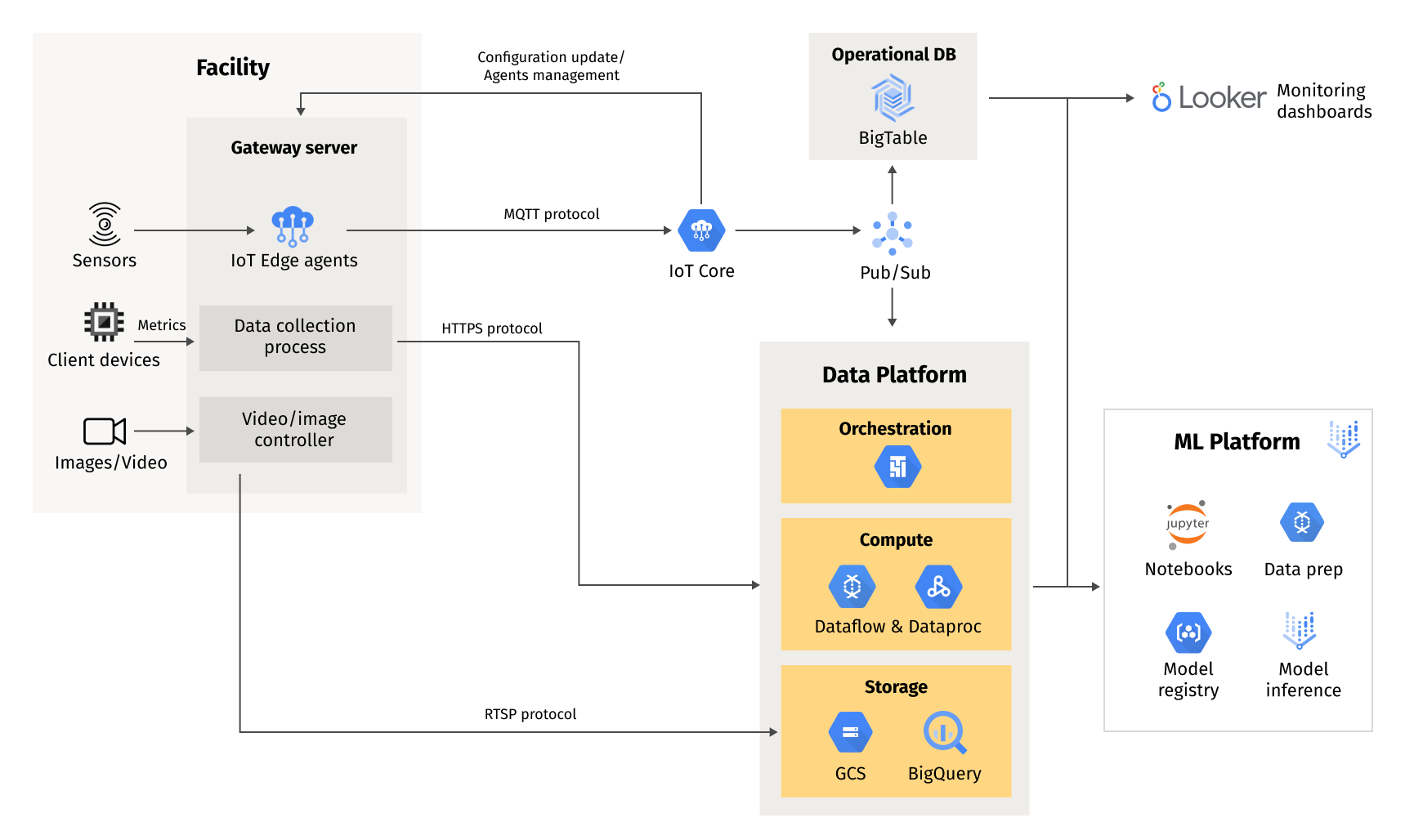

Data collection can be implemented in various ways, such as using MQTT to integrate with facility devices, and stream data to the data and ML platform through IoT Core. If this is not possible, a data collection agent can send data in batch mode to the cloud.

Video processing can be challenging, and it is recommended to process video on-premise, and identify anomalies as soon as they appear. Streaming video to the cloud can be costly, and building an inference layer in the cloud would require a sophisticated architecture that can scale up or down. If video streaming is still necessary, then RTSP protocol can be used to stream data and store it in Google Cloud Storage.

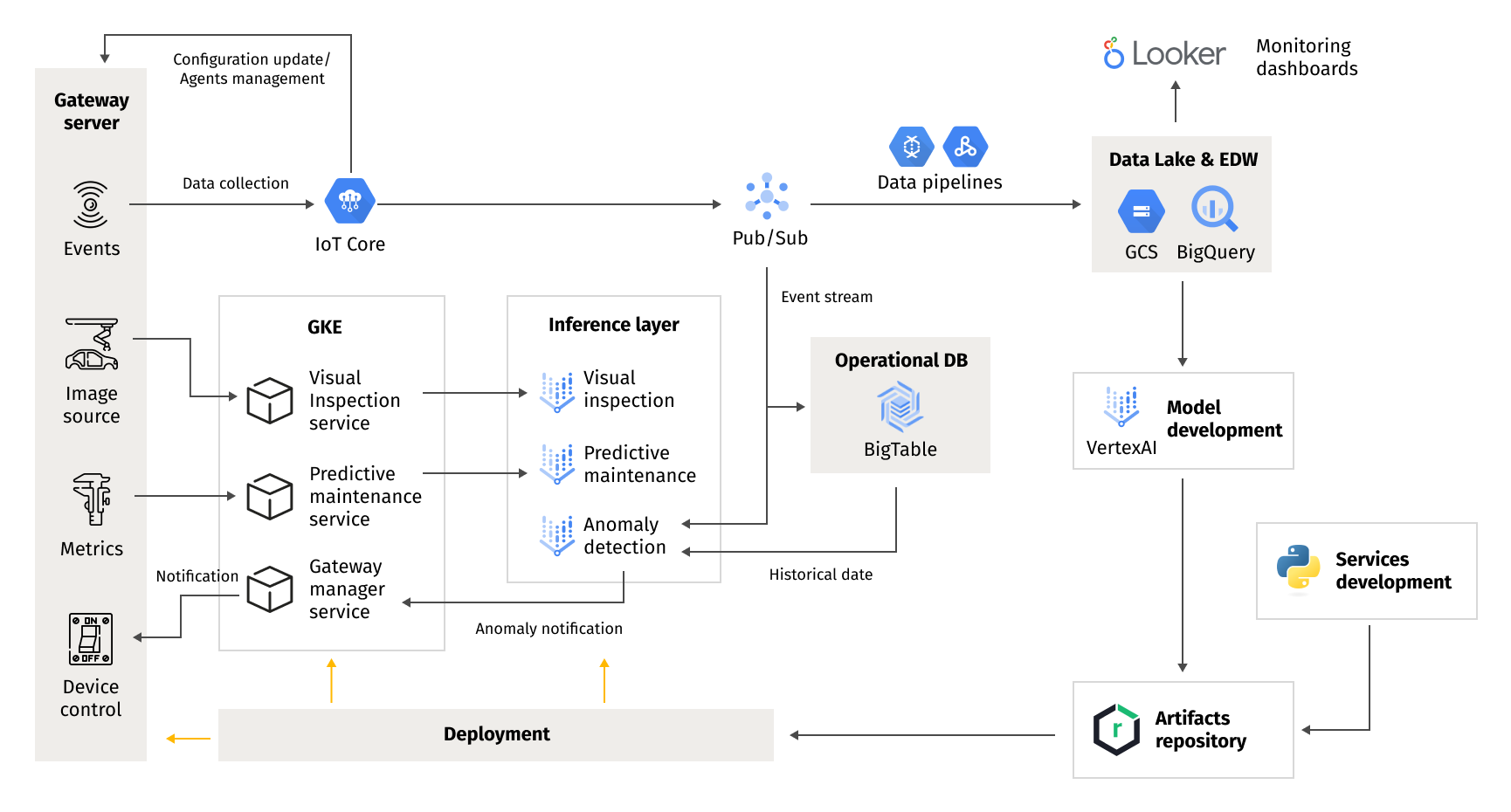

Inference in the cloud

Inference in the cloud is typically built in a traditional data platform/ML platform manner, with external data sources ingested into the platform and processed by ML models. The most challenging aspect of this architecture is latency and anomaly notification for manufacturing. Data collection begins with a connector on the edge layer, which streams data to the data platform or loads it in batch mode. The data is ingested, processed, and passed to an ML prediction service, which sends a notification if an anomaly is detected. The reference architecture for Google Cloud Platform provides an end-to-end perspective on this process:

Data collection is built on top of IoT Core, which is connected to gateway agents via the MQTT protocol. Data is streamed via Pub/Sub to Google Cloud Storage for archival purposes and loaded into BigQuery for specific use cases such as analysis, reporting, and visualizations.

Gateway agents invoke services running in Kubernetes, which process the request and call the model in VertexAI. These services, which make up the intermediate layer, process the data, retrieve any historical time series, and invoke the model with the defined parameters. Any identified anomalies are passed to the gateway management service, which again has an MQTT connection with the gateway anomaly handler. This handler processes anomalies and manages the manufacturing facilities.

Gateway inference

Hosting on gateway nodes requires hardware that is capable of running multiple microservices, or potentially even a Kubernetes cluster. Modern conveyor manufacturing is a complex process that often requires extremely low latency (under 100ms). In cases where the manufacturing facilities are located in Asia and the IoT platform in the US or Europe, it may be difficult to achieve latency under 100ms, including routing and model inference, if the model is not running at the edge layer. Inference at the edge layer can help meet stringent SLAs and integrate anomaly detection of any complexity into the assembly process. Inference at the edge can be implemented in several ways:

- Statistical process control, which does not involve machine learning;

- Rule-based anomaly detection;

- Lightweight models running on the edge; and

- CPU/GPU intensive models running on the edge.

The appropriate approach will depend on the hardware capabilities: if it is not feasible to run models at the edge, then statistical process control can be used to identify outliers, anomalies, or defects. This approach involves defining the quality control process, creating run charts and control charts, and performing inspections. The primary advantage of statistical process control (SPC) is that it emphasizes early detection and prevention rather than correcting existing problems. It is the most commonly used approach in manufacturing in general.

While SPC can help to define and follow data quality process, it does not provide real-time anomaly detection and cannot assist with identifying issues on the conveyor.

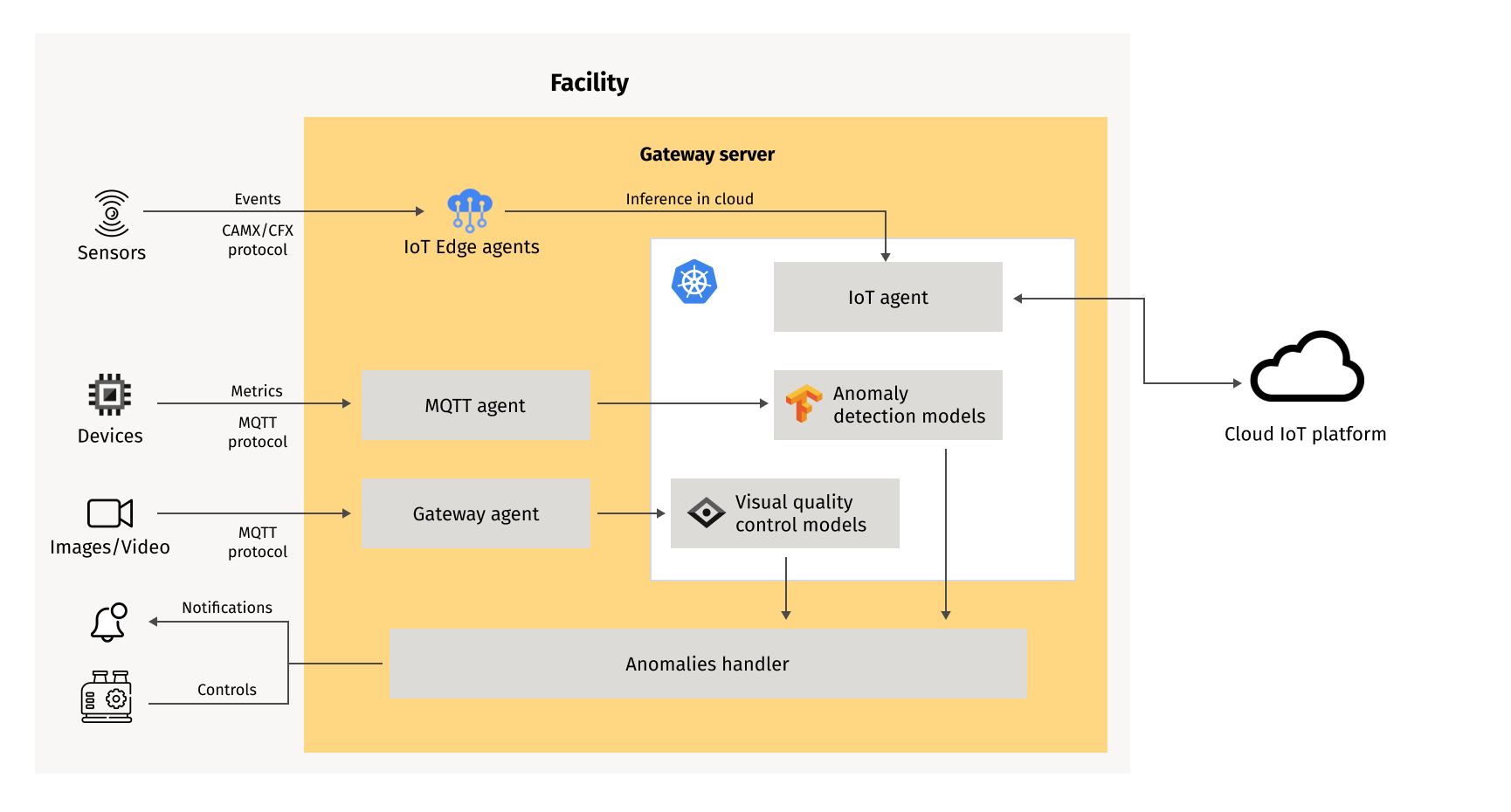

The reference architecture of the gateway layer is portrayed in the graphic below:

Models running on the gateway are those running in the cloud and are typically Docker containers. These containers are deployed to the Kubernetes cluster on the gateway using Terraform, to establish connection with MQTT agents, and expose a REST interface if necessary. Scaling on the gateway is usually not required because the workload volumes are known in advance.

Business cases that require low latency responses are handled by models running in Kubernetes, while the IoT agent is responsible for sending data to the cloud. IoT agents are connected to IoT core via MQTT, sending data and receiving controls such as configuration updates or agent shutdown.

Models running in Kubernetes are invoked by corresponding agents when an image or sensor is received. The model processes the signal and, if it is an anomaly, calls the anomaly handler. In various manufacturing deployments, this handler might be a conveyer API, Cogiscan adaptors, or custom-built services. Machine adaptors collect the data and send it to an agent running on the gateway using either the MQTT, CAMX, or CFX protocol. The agent is responsible for preparing the data, which may include parsing, formatting, decrypting, or preprocessing before it is passed to the model.

Google Cloud IoT Platform Starter Kit: Conclusion

Developing a modern IoT platform can be challenging and may require a change in software development paradigms. Cloud offers a wide range of capabilities, which can sometimes create uncertainty in terms of choosing the right solution. To address this challenge, Grid Dynamics has developed a blueprint and starter kit for building an IoT platform on Google Cloud Platform. The starter kit includes infrastructure automation with Terraform, integration between services, proper security configuration, and an application layer that brings together data collection, data landing, sample anomaly detection models, and a serving layer in both batch and stream modes. It is worth noting that the starter kit requires integration with a specific edge layer and configuration to achieve proper automation for the cloud part. This automation helps accelerate time-to-market, and avoid common pitfalls when building your first IoT platform in the cloud.

Interested in the Google Cloud IoT Platform Starter Kit? Get in touch with us to start a conversation with one of tech experts.