Composable commerce essentials: Demystifying modern e-commerce platforms

For many years, e-commerce applications have been built from custom development services, leveraging great open-source frameworks and tools. However, making all these components work together as one ecosystem—from technical aspects to managing teams and aligning their milestones—is no easy feat.

What if there was a way to significantly reduce this effort without sacrificing flexibility? Imagine a new foundation that combines the benefits of traditional e-commerce platforms and contemporary approaches to building scalable applications. Enter composable commerce.

You may already be familiar with composable commerce, but how does it actually change the commerce game, and what components do we need to "compose" this "commerce" from? In this discussion, we'll delve beyond a surface-level understanding of composable commerce and explore the technical aspects, uncovering challenges and insights that can guide you in making informed e-commerce decisions.

Composable commerce is the prime strategy for building scalable e-commerce solutions today. This blog post will guide you through this complex landscape, ensuring you're well-prepared with answers to the following key questions:

- What is the primary value proposition of composable commerce and why does it matter for your business?

- How do you assess various packaged business capabilities and composable commerce solutions in the market, aligning them with your business requirements?

- What integration patterns are available to connect PBCs, and how do you determine the best fit? Explore examples like commercetools, Algolia, and more.

- How do you approach data modeling, importation, and validation effectively?

- When integrating new PBCs and establishing a connection to your storefront, how can you ensure data consistency throughout the process?

- What strategies can you employ to implement intricate business workflows using specialized PBCs?

Why opt for composable commerce?

Composable commerce is an emerging solution design approach to digital commerce. The trend is well supported and promoted by SaaS product companies that offer specialized packaged business capabilities (PBCs), as well as the MACH Alliance, which advocates for modular and agile business models. This approach extends to enterprises a value proposition centered around "flexibility," allowing for effortless customization and substitution of third-party PBCs—such as payment systems, search functionalities, shopping carts, or any feature that enhances the customer experience—on the backend, all while facilitating smooth integration with frontend applications. Constructing composable commerce solutions through adherence to MACH principles—namely, microservice-based, API-first, cloud-native, and headless—further bolsters this business adaptability.

Composable Commerce naturally aligns with cloud-native strategies, while also encompassing broader possibilities. It benefits significantly from the serverless approach and event-driven architectures, enabling multi-channel experiences for customers at a global scale.

If you’re eager to learn more, check out these comprehensive whitepapers:

- Digital commerce transformation: A journey to composable architecture

- Achieving business flexibility with composable commerce

- Composable commerce 101: a guide for luxury retail brands

Although composable commerce is an abstract concept that can be implemented using a range of technologies and cloud providers, we'll focus on providing a clearer understanding through concrete examples (but keep in mind that you will always have the option to select different solutions for every specific business need). In this instance, we will center our discussion on Amazon Web Services (AWS) cloud and commercetools as the primary backend commerce platform, paired with Next.js & React for the frontend. Furthermore, we’ll showcase a few other integrated PBCs to demonstrate how the functionality can be extended. If you’re looking for practical steps to take and more hands-on examples, visit our Composable Commerce Starter Kit Page. Now, let’s dive into the considerations of building a composable commerce ecosystem using MACH architecture, as well as understand the key components and challenges.

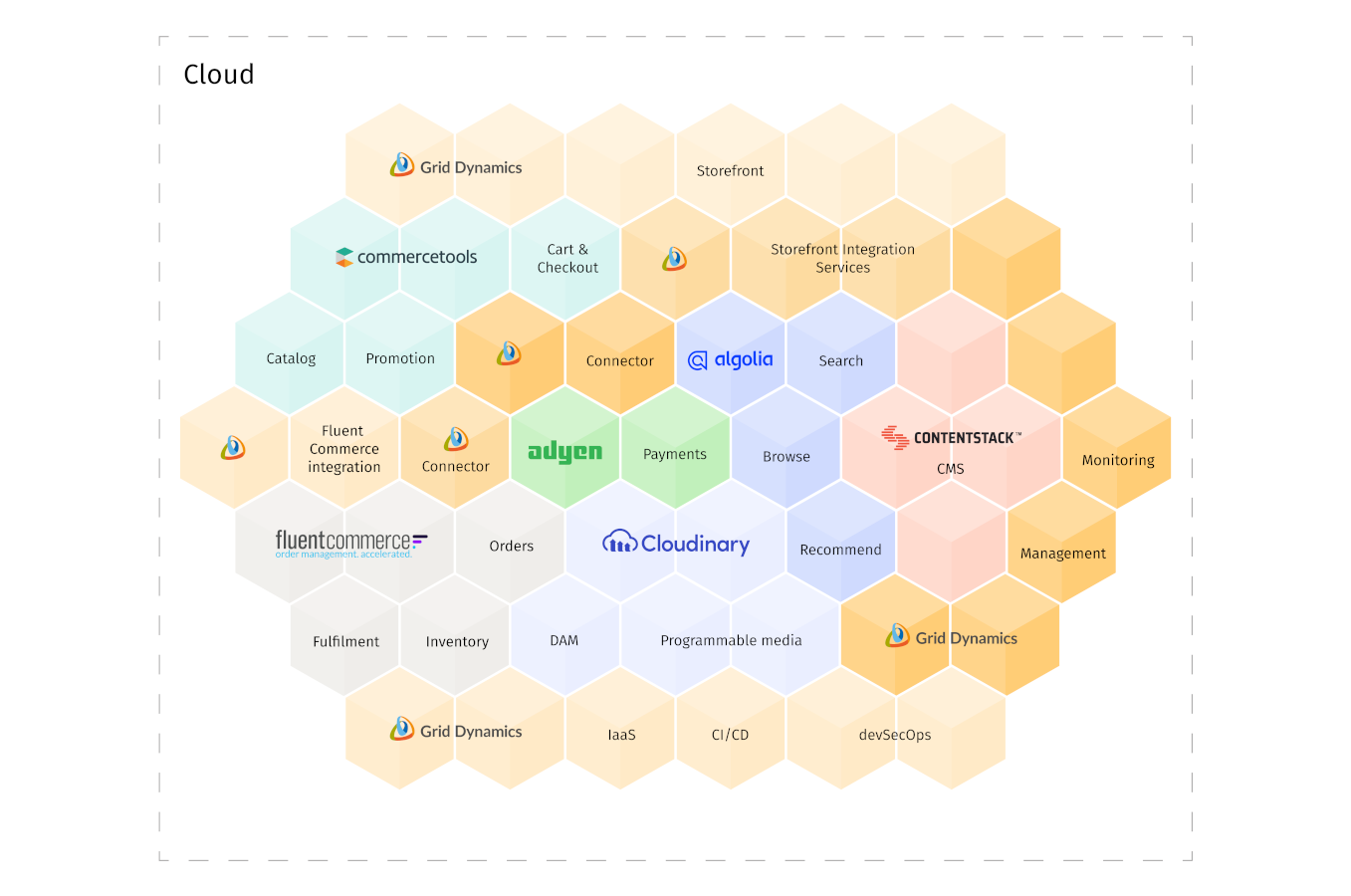

Bringing best-of-breed products together to design your composable solution

The fundamental steps of the composable commerce process include identifying your domain, and then selecting, combining and integrating various specialized products (PBCs) that cater to precise business functions or domains. These PBCs could include, for example, product information management (PIM), content management, and search functionality. After identifying your domain requirements, you can select the best solutions from various PBC vendors for each of these domains.

The initial focus is on establishing a transactional bedrock for the new architecture—the commerce platform. You can explore the marketed features provided by different commerce vendors and analyze if they effectively serve your business flows and requirements. If you conclude that your target solution lacks some features, you can easily look for a more feature-rich solution. The realm of available PBCs is expansive, yet your objective should remain to pinpoint the cream of the crop that perfectly aligns with your requirements.

In this context, commercetools, a robust contender, stands out. It has consistently secured its position as a leader in the 2022 Gartner Magic Quadrant for Digital Commerce for the third consecutive year. The platform distinguishes itself through a rich array of features within the composable commerce landscape, an arena where no single product can comprehensively encompass all the business capabilities, as older monolithic solutions once did.

commercetools is primarily designed to provide core commerce capabilities such as product catalog management, customer profile handling, shopping cart oversight, shipping alternatives, and orchestration of the checkout procedure. It also provides a rich collection of other basic capabilities such as full-text search, order management, digital asset management, and promotions. However, to access an even more advanced range of features within these domains, the inclusion of specialized PBCs could be a viable consideration.

As you proceed to build your composable commerce architecture, you can augment the commerce platform with the following domain-leading PBCs, integrating them on top of commercetools:

- Algolia - Search and Recommendations

- Contentstack - Content Management System

- Adyen - Payments

- Cloudinary - Digital Asset Management

- FluentCommerce - Order Management System and Inventory Management System

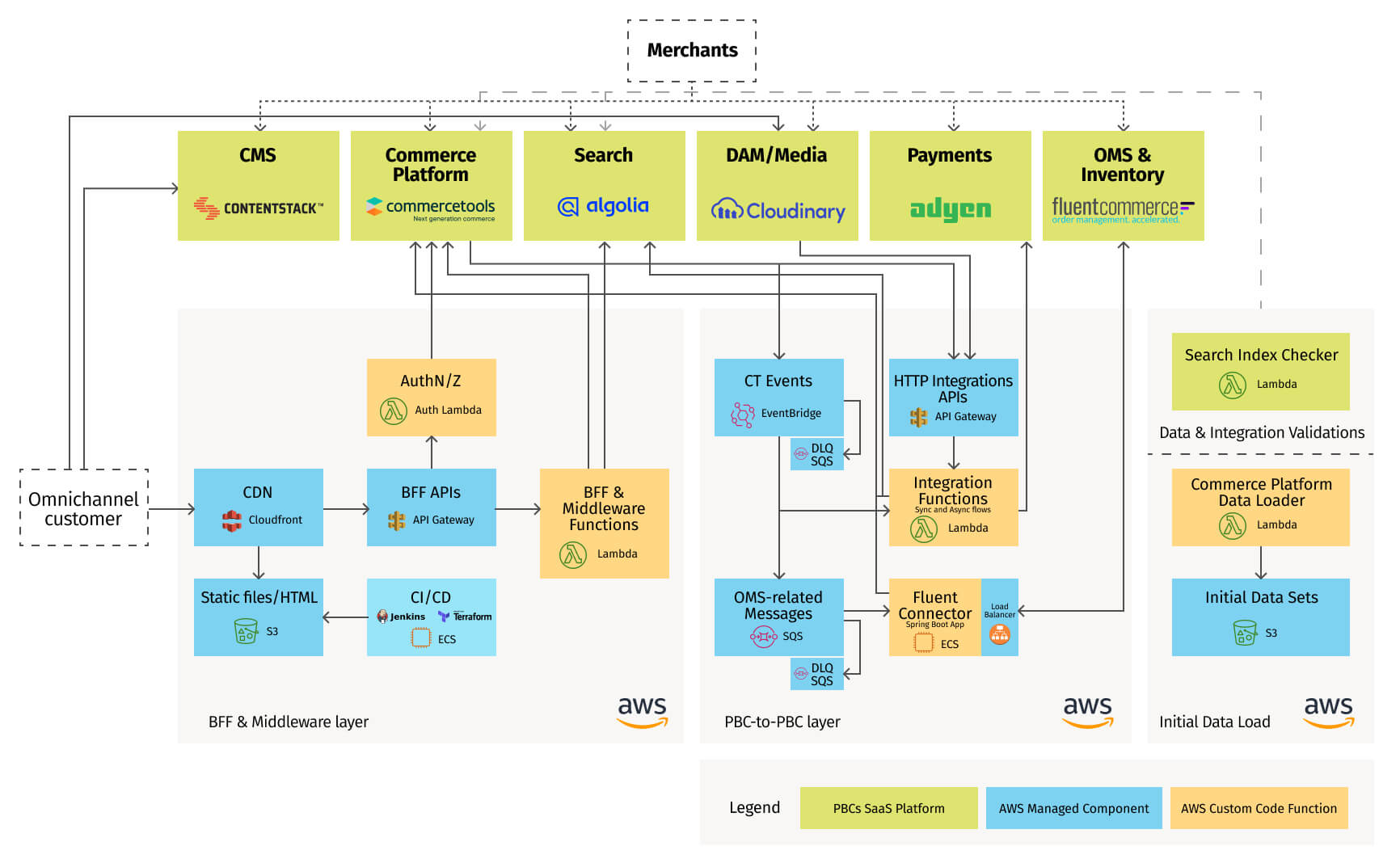

An essential aspect to be addressed within the architecture involves establishing seamless integration between PBCs to facilitate data exchange and the sharing of notifications pertaining to specific state changes. One way to implement these PBC-to-PBC integrations is with an event-driven and serverless approach. When dealing with platform events—such as updates to product information or changes in inventory levels—AWS Lambda functions are employed to transform and process events, facilitating asynchronous communication between the source system (e.g., commercetools or FluentCommerce) and other PBCs.

As composable commerce continues to expand and evolve, new PBCs will emerge within the market. Simultaneously, existing ones will undergo enhancements, incorporating novel features and capabilities. Furthermore, it's worth acknowledging that the PBC vendor you favor might change, prompting the necessity for an adaptable solution design.

When evaluating potential PBCs for your solution, it's prudent to consider the following aspects in addition to pricing:

Exploring integration patterns for diverse components

As we delve into integration patterns for various components, the interplay between the composable commerce architecture and the chosen design pattern plays a pivotal role in determining how PBCs integrate within a solution. It's important to recognize that the inherent integration capabilities of the products themselves can impose certain limitations and guide this integration process to some extent. Traditionally, we consider three core integration patterns:

- Asynchronous messaging;

- API synchronization extensions; and

- Orchestration.

The breadth of integration options provided by the product vendor significantly impacts the flexibility available for constructing your solution. However, it remains incumbent upon you to harness these capabilities effectively.

With the orchestration pattern, you assume complete control over managing the workflows. The API synchronization extensions empower you to establish connections with the functionalities of PBCs by making synchronous HTTP endpoint calls. For example, this could include triggering a specialized system for cart promotion calculations or inventory allocation. This approach grants us the authority to intervene in the process by responding, particularly in cases of errors that necessitate halting. However, it's vital to consider factors like latency (typically a few seconds for completion) as well as standard considerations such as API availability and the implementation of an effective HTTP retry mechanism.

While API synchronization extensions might be a requirement in your design (e.g., for authorizing payment during the order placement process), the asynchronous messaging capability—commonly offered by PBCs—harmonizes well with event-driven architecture. This capability allows us to subscribe to notifications concerning asynchronous shifts in data state, aligning seamlessly with the principles of event-driven systems. Even though asynchronous notifications might also involve an HTTP call to a Webhook (ensuring that the source system isn't stalled for the result), this essentially means you can configure a target message queue or topic for publishing events.

It's pertinent to mention that certain PBCs offer more extensive integration capabilities, while others might be limited to specific cloud or middleware products.

For example, commercetools subscriptions provide deployment-ready integrations for the major cloud providers, such as AWS, Google Cloud Platform (GCP), and Microsoft Azure Cloud, as well as a choice of cloud middleware services to pick as a destination. For AWS, these could be Amazon Simple Queue Service (Amazon SQS), Amazon Simple Notification Service (Amazon SNS), or Amazon EventBridge.

However, the selection of appropriate middleware technology raises a valid concern, contingent on the specific use case at hand. It’s important to recognize that distinct subscriptions with varying destinations can be established for each resource type (e.g., products, orders, etc.). Depending on the specific business flow you're implementing, you can set up a First-In-First-Out (FIFO) Amazon SQS or Amazon SNS configuration to achieve out-of-the-box exactly-once semantics. Alternatively, for idempotent or non-critical functionality, you may opt for at-least-once processing, utilizing standard Amazon SNS and SQS or Amazon EventBridge. The latter option also offers additional capabilities, such as the ability to enrich events before forwarding them for further processing.

In our example, we selected Amazon EventBridge to publish product and inventory changes from the commercetools platform to downstream systems such as a search and recommendations engine (Algolia).

The high-level diagram presented below outlines the key architecture components of a composable solution made up of the integrated PBCs. We will delve further into the details of the diagram, focusing on the PBCs and integration components, in the upcoming discussion.

Migrating your data

Once the overall vision is finalized, the high-level architecture is outlined, and the integration patterns are defined, the next phase entails the migration and assimilation of your data into the commerce platform and other PBCs. Some of the PBCs will act as a source of truth or a system of record for certain datasets, while others may need some derived data elements.

Taking this into consideration, a pivotal decision arises: the choice between executing an initial bulk data loading into these systems or instituting a data synchronization mechanism for the propagation of data from designated system of record PBCs, such as the commerce platform. Opting for the latter approach enables potential reusability of the synchronization process for subsequent data updates. However, it's important to note that not all data from the commerce platform may be required; for instance, a Product Information Management (PIM) PBC could hold a broader range of product data, necessitating targeted uploads.

Mastering data modeling

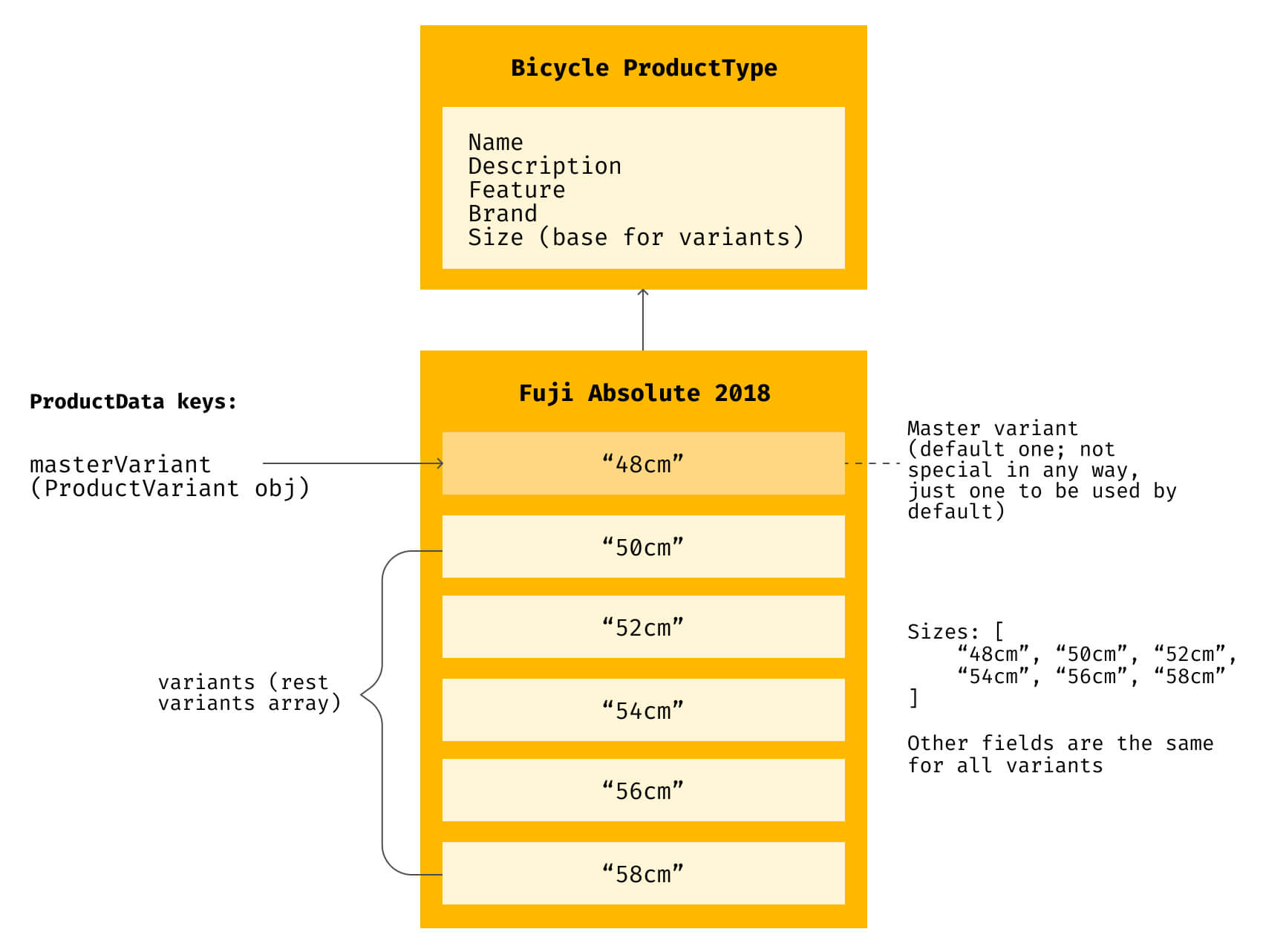

To give you an idea of what it takes to design a data model in a PBC, let's consider the example of commercetools and its extended approach to product modeling. Instead of creating a product as a set of attributes, commercetools considers products as abstract sellable goods with a set of Product Attributes defined by a Product Type. This means that each Product must have at least one Product Variant (which is called the Master Variant). Furthermore, a Product Variant represents a tangible sellable entity, usually linked to a specific stock-keeping unit (SKU). The modeling of inventory is granular, operating on a per-variant basis. Consequently, meticulous planning of your variants in advance is crucial, as this constitutes a pivotal decision.

It’s also worth highlighting an alternate approach offered by certain platforms—a more adaptable strategy for inventory planning and modeling. This methodology permits the direct combination of attributes to form variants. However, it's vital to grasp that this presents a fundamental trade-off between runtime performance and flexibility. Opting for pre-modeled variants considerably enhances query performance at runtime, in contrast to executing multiple intricate queries for each request. This is akin to envisioning the execution of numerous JOIN operations while querying a database.

Optimizing data import

After the data modeling phase, the focus shifts to transforming and importing your existing dataset. Depending on a multitude of factors, the structure of the dataset can vary and often appears as a blend of heterogeneous fragments scattered across diverse files (in varying formats) or stored within different data repositories (SQL tables, NoSQL documents, and the like). This mandates a customized approach to the data transformation process, tailored to harmonize with the unique context at hand. However, automating this process is imperative to minimize the potential for errors during the initial data load. Given that this process is typically a one-time endeavor, it's pragmatic to deploy a suite of self-developed command-line interface (CLI) tools. For instance, these tools can adeptly facilitate the conversion of data from numerous .csv files into .csv and/or .json output formats, rendering them ready for seamless upload to the commercetools platform.

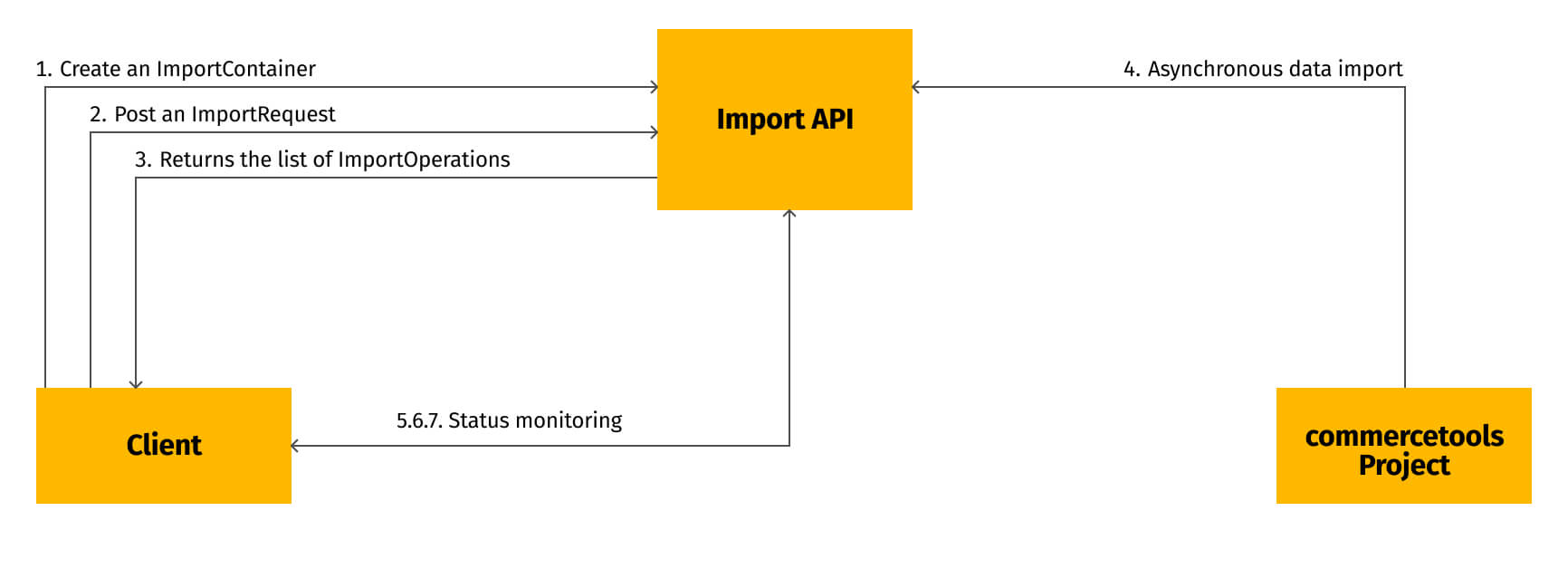

commercetools offers an asynchronous Import API capable of importing datasets of any size. The platform also provides the ImpEx (Import/Export) Tool with a Web UI that provides a list of different commands to perform with the main commercetools entities (product types, categories, products, inventories, etc). Alternatively, you have the option to interact directly with the Import API. For enhanced convenience, software development kits (SDKs) are accessible in multiple programming languages (such as TypeScript), streamlining the integration process.

The high-level steps to import the data resources include:

A clear advantage of the Import API lies in its asynchronous nature, allowing substantial volumes of data to be processed in the background.

Data accuracy: Effective validation and synchronization techniques

Following the initial data import, two crucial aspects require attention:

- Validating the import process; and

- Maintaining data synchronization with the source.

The validation includes error analysis and an extra process or a tool to compare the source dataset with the result we obtained in commercetools. This includes proper information and error logging, accompanied by statistical data for in-depth analysis and predefined or "on-error" values. Consider a scenario where you've introduced a required CustomField in commercetools, but the corresponding data is absent in your source dataset for certain products. Alternatively, envision a situation where a complex data loading tool interfaces with remote services that experience a few seconds of unresponsiveness. A custom validation tool could carry out a thorough examination of the entire new dataset against the source. While sampling a portion of the data for validation is an option, conducting a comprehensive check is undeniably safer, unless dealing with relatively straightforward cases involving small catalogs. Simultaneously, a "sampling" approach could be advantageous when data synchronization is consistently maintained.

Keeping data in sync with the source hinges on whether you intend to provide support for it, even if only during a transitional phase, or if you aim for commercetools to immediately assume the role of the source of truth. In the former scenario, it's prudent to strategize on the method for obtaining data updates from the existing (source) system. For example, if it already supports event-driven flows or has the potential to be expanded accordingly, an optimal approach would involve developing functions that actively listen to updates. These functions would then synchronize the updates with commercetools in near real-time through the use of entity APIs, such as the Product API. If that’s not the case, a periodical delta export process (including only updated products and attributes) could be established by pushing data to commercetools via the entities' APIs or Import APIs to sync the updated attributes in bulk. Note: In both APIs, the entire product information isn't necessary for updates; only the attributes that have been changed need to be provided.

Adding a new PBC to your solution

Whether you’re using an existing composable commerce solution or building a net new one, the need to integrate new PBCs can always arise—and this is where the beauty of composability lies. Embracing these new products offers various adoption pathways: direct connectors may exist between your selected PBCs, while in other cases, you might need to independently implement synchronization mechanisms to harmonize data across diverse systems.

As an illustrative example, we can consider a search engine. While commercetools provides a Search API, its capabilities might be relatively basic and could lack the desired level of search optimization and advanced features you need. You might find it necessary to incorporate advanced search rules, integrate a recommendations engine, leverage AI solutions, and more, to enhance your search capabilities. This is the juncture where selecting a specific PBC for search becomes crucial, and here Algolia, as our example search tool, comes into play. Algolia is an AI-powered search and analytics engine that enables your marketplace to quickly and efficiently present relevant results to your customers.

When integrating Algolia into your solution to activate its core functionalities, the process typically comprises several fundamental components:

The first step should involve importing your product data into Algolia. In general, the process is similar to the commercetools data import. However, when you start mapping your CT models to Algolia records, a pertinent question may arise: Should you treat the entire product (along with all its variations) as a single Algolia record, or should you opt for a per-record approach based on Variations/SKUs? Recalling our prior discussion on commercetools data modeling, it's important to acknowledge that it can influence your approach here as well.

Beyond the straightforward technical limitations associated with Algolia records (capped at 100KB per document), there's a pivotal decision to be made regarding whether you wish to enable "cross-variant" searchability for products. This decision hinges on factors like variant descriptions, colors, and other attributes. If you opt for a "per variant" strategy, it paves the way for a streamlined association process by storing either the variant's ID or the product ID within the record, creating a direct link to the corresponding product in the commercetools dataset.

In order to upload our data from commercetools to Algolia, you need to export objects (e.g., in JSON format) and transform them to Algolia records. To simplify the orchestration of the product data model transformation, Grid Dynamics has developed custom tools and a reference implementation, which are available as part of our Composable Commerce Starter kit.

However, if your goal is to enable result sorting based on different attributes, the story does not end here. For performance reasons, Algolia doesn’t support sorting parameters during searches, so it requires separate pre-sorted replica indexes. Achieving this entails a two-step process: creating a replica index and creating a sort-by attribute in a newly created replica index.

Enabling query suggestions entails the creation of a distinct index separate from your primary index. The setup is as simple as selecting the Query Suggestions tab in the Algolia dashboard, clicking on New Query Suggestions Index, and choosing the source index.

Algolia creates query suggestion indexes based on the analytics of the source index data to identify the most frequently searched terms. If you don’t have analytics data yet, you can use external analytics or faceted data to pre-populate the index. Keep in mind that generating suggestions through full-text search isn't feasible, and to ensure relevance, the index should be reconstructed at least once a day to assimilate new analytics data.

In terms of data synchronization between Algolia and commercetools, which involves product creation and removal, the process mandates the creation of a subscription via the commercetools API. The Composable Commerce Starter kit includes a Terraform module that facilitates declarative data synchronization through subscriptions. This mechanism establishes rules for subscribing solely to specific message types, like data change events and publication. By harnessing cloud-native middleware, the data is subsequently transformed and transmitted to Algolia. Through attentive monitoring of these messages, along with considerations for states like the publication status, Algolia's data can be updated accordingly. For example, commercetools’s own search functionality uses isOnStock boolean attribute, and you can leverage the same or something similar.

Connecting the storefront to your platform

Once the integration of backend flows is in place, the focus pivots to establishing a connection with the storefront.

Given our serverless orientation, Amazon API Gateway in tandem with AWS Lambda can serve as an intermediary layer, commonly referred to as the Backend For Frontend (BFF), for client UI operations. This arrangement effectively manages HTTP requests from end customers to the APIs of the PBCs. Leveraging the BFF concept yields several notable advantages, particularly pertinent to our potential use cases:

However, it's important to acknowledge that adopting this approach may introduce a slight increase in latency and add complexity to the solution. Therefore, consider your use cases and decide where it is beneficial and where it merely contributes to unnecessary additional complexity. For instance, in our example solution, we don’t use BFF for CMS calls from the frontend. And, with a serverless approach, regardless of the request volume, both API Gateway and Lambda scale automatically to support the exact needs of your application (refer to API Gateway Amazon API Gateway quotas and important notes for scalability information). By combining these two services, you can create a tier that enables you to write only the code that matters to your application. This frees you from grappling with the multifarious facets associated with implementing a multi-tiered architecture, which often encompasses architecting for high availability, creating client SDKs, managing servers and operating systems, ensuring scalability, and implementing client authentication (AuthN) and authorization (AuthZ) mechanisms.

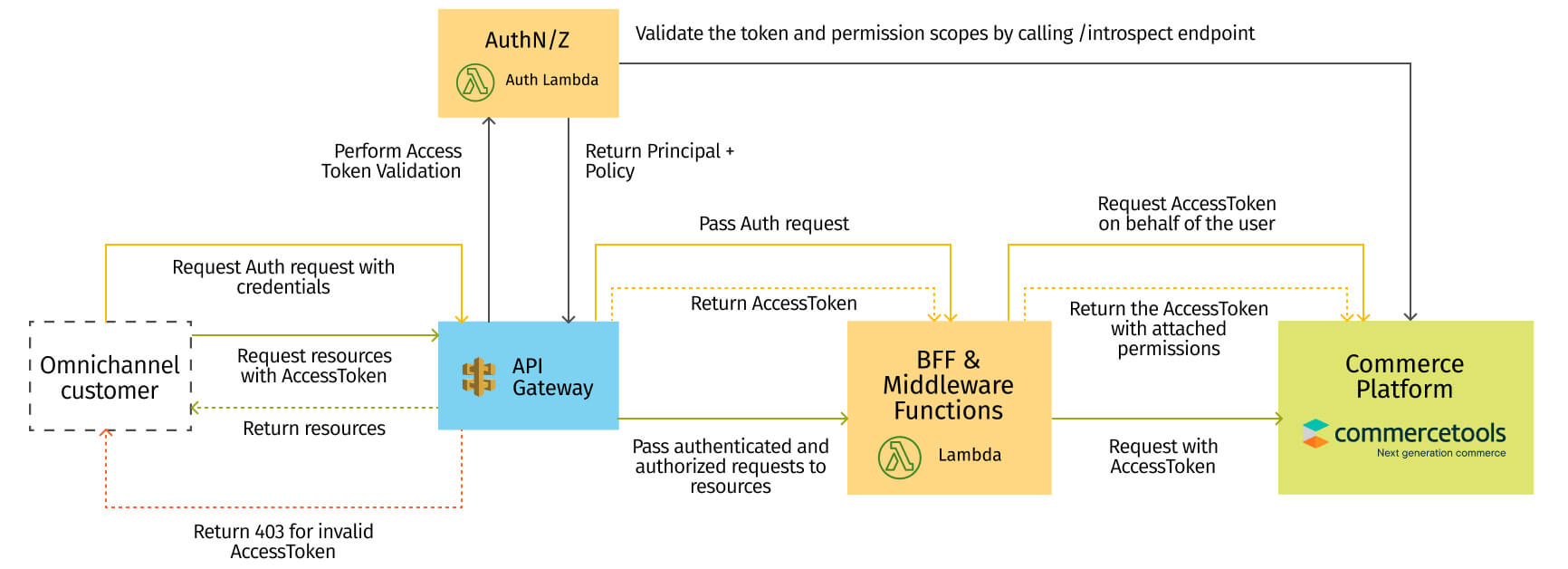

For the specific aspect of AuthN/AuthZ, configuring an API Gateway Authorizer becomes essential. This involves employing a simple Lambda function, which provides a conduit to connect with any Identity Provider. In our context, we harness commercetools token management for this purpose. This configuration ensures a robust and streamlined client authentication and authorization mechanism.

Consequently, we obtain API endpoints that seamlessly integrate with various PBCs (such as Inventory Management, CMS, and Search). These endpoints act as the gateway for our storefront and other distribution channels.

Implementing complex business flows

The complexity of composable commerce solutions invariably increases as your business grows. Success begets a need for enhanced features to elevate user experiences and drive further business growth. As you progress, the need to establish more sophisticated interactions between PBCs becomes evident, potentially leading to a diversification of infrastructure components.

Order and inventory management solutions

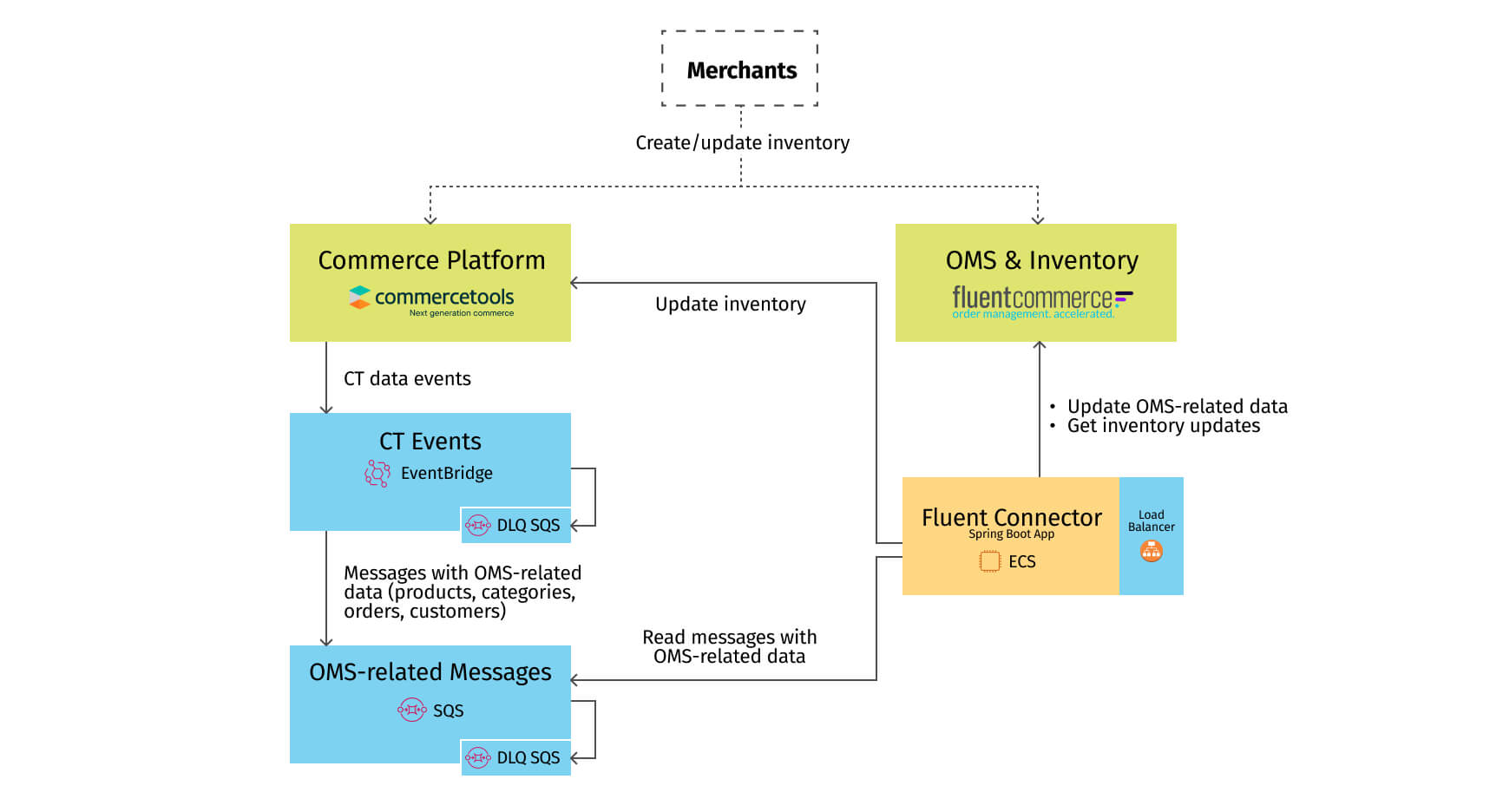

Let's explore the integration of the Order Management System (OMS) and Inventory Management platform, specifically FluentCommerce, into our solution. Our objective is twofold: first, to establish a data flow for orders from commercetools to Fluent Commerce; and second, to enable inventory updates to flow from Fluent Commerce back into commercetools. While an event-driven approach appears ideal for achieving seamless data flow in both directions, it might not always be feasible, especially when dealing with high-frequency updates. Real-time synchronization may encounter challenges in such scenarios.

Depending on the capabilities of a given PBC and your business requirements, the solution could vary from sending filtered and pre-processed data in an event-driven manner to using polling to receive the changes in a batch. However, it’s important to recognize that any delay will only impact the display of stock information on product details pages (PDP) or within the shopping basket. At the same time, the checkout process maintains synchronous real-time interaction with the Inventory system, thereby preventing oversells in the checkout flow.

Once again, the strength of the composable ecosystem lies in the availability of pre-built components for deployment and streamlined integration processes. FluentCommerce provides a connector tailored for commercetools integration. This Java Spring Boot Application can be effortlessly deployed within Amazon Elastic Container Service (ECS), exposing the requisite endpoints via an Application Load Balancer (ALB). Notably, this connector efficiently manages connections to both the PBCs, facilitating seamless communication between systems. Being highly configurable and extensible, the connector significantly accelerates the integration, shifting the primary focus from API integration to configuration management.

Seamless payments

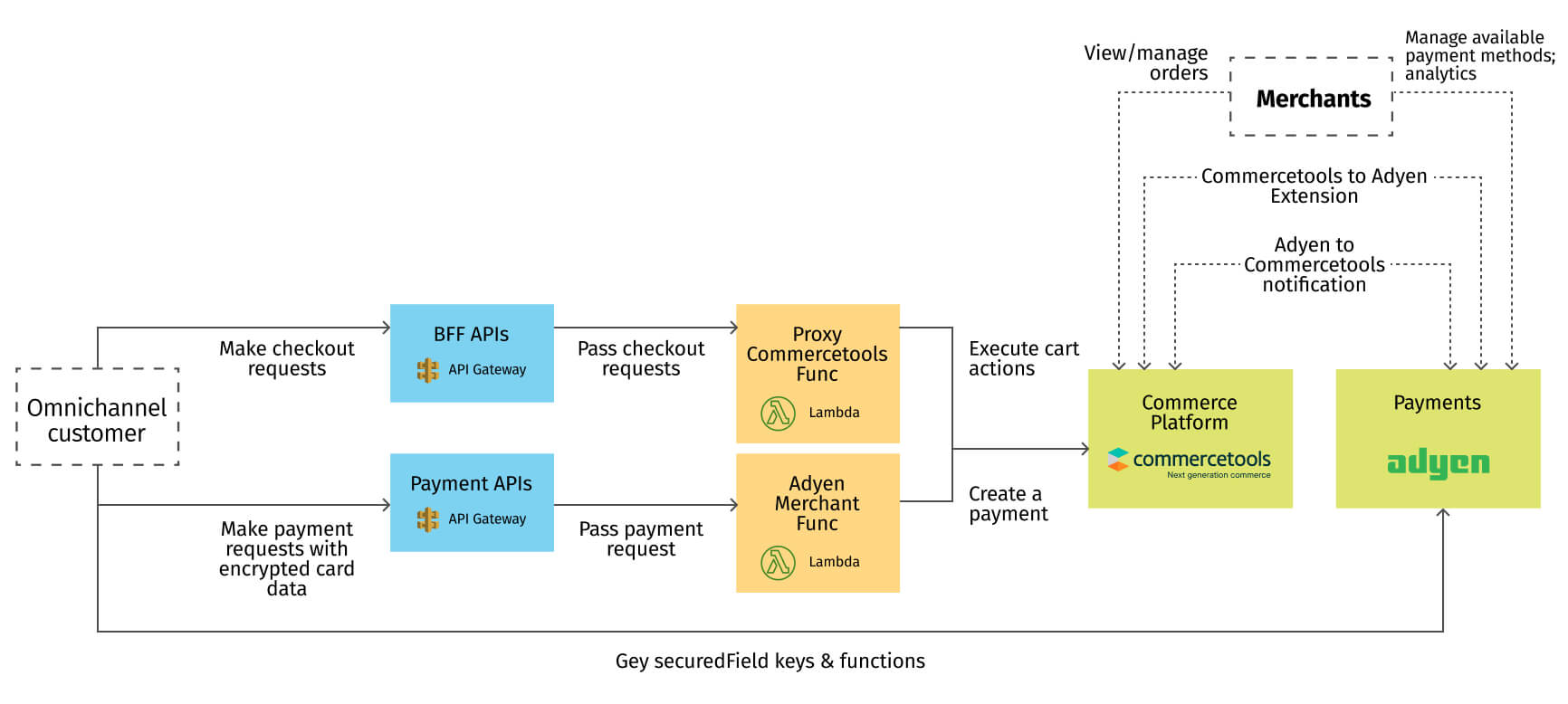

The implementation of the order-capturing process is greatly simplified thanks to payment providers, but understanding the process is critical for the business and warrants acknowledgment. To make our example tangible, we’ll focus on a specific provider, Adyen, to illustrate how your composable solution can continue to evolve.

Upon transitioning the order to the 'ready for payment' state, the imperative arises to collect payment information from the customer. This can encompass card details or other supported payment methods (such as PayPal, ApplePay, etc.). To avoid dealing with raw payment method details, Adyen provides customizable UI components that are directly incorporated into your storefront to capture the card information from the customer, encrypt it, and send it directly to Adyen services. This approach ensures that the original card data is neither parsed nor stored; instead, it is transmitted directly to the Adyen platform from the frontend application. This discrete functionality ensures Payment Card Industry Data Security Standard (PCI DSS) compliance out of the box.

What remains is the receipt and storage of payment tokens within the commercetools system. To facilitate this, an integration component serves as a two-way communication conduit between the systems. This includes an API extension that seamlessly integrates payment processing into your checkout workflow within commercetools. Additionally, notifications are employed to relay payment status updates from Adyen to commercetools, maintaining a synchronized ecosystem.

Prepared for action? Putting all the pieces together

At the end of the composable commerce implementation journey, our diligent efforts have brought all the pieces together to compose a working mechanism. By interconnecting the commerce platform with a range of additional PBCs, we've fortified the solution's capabilities and enhanced user experiences, all while preserving a commendable level of adaptability. The cloud-native architecture offers the flexibility to select optimal components based on load profiles, and the event-driven design ensures efficient load handling.

Composability serves as the backbone of the e-commerce solution evolution, granting us the agility to extend existing PBCs with new integrations, introduce novel PBCs, or replace existing ones, driven by both business and technological considerations. However, it's essential to recognize that all of this relies heavily on a well-managed infrastructure, which forms the cornerstone of composable architecture. A substantial portion of the custom work involves integration at the cloud component level–creating, managing and establishing connectivity, and controlling access to and from PBCs. Robust infrastructure automation, therefore, emerges as a pivotal asset for continued growth, evolution, and reliable support.

If you're seeking expert guidance and assistance in implementing and optimizing your composable commerce solution, consider partnering with Grid Dynamics. With our proven expertise in cloud-native architecture, event-driven design, and seamless integrations, we can help you harness the full potential of your solution. Grid Dynamics also provides valuable insights into selecting the right PBCs, ensuring that your solution is tailored to meet your unique business needs.

Reach out to us to explore how we can elevate your business through the power of composable architecture. #PrepareToGrow