Creating training and test data sets and preparing the data

In the previous post we discussed how we created an appropriate data dictionary. In this post we’ll address the process of building the training data sets and preparing the data for analysis.

What are good and bad training and test data sets?

The training process aims to reveal hidden dependencies and patterns in the data that will be analyzed. Therefore, the training and test data set must be a representative sample of the target data. It has to be broad enough to cover all cases; e.g. if it is time-series data about sales, the training and test dataset ought to represent a reasonable business cycle that covers peak and off-peak times, weekends, etc.

Coming back to natural language processing, of which sentiment analysis is a subset, it’s important to cover as many words as possible that express sentiment and represent the lexicon used in the target texts. When we speak about supervised learning for natural language processing, the labeling has to be performed manually to guarantee correct classification of the training and test data. The training dataset must contain enough, and balanced enough, entries representing each value for the target variable to be representative. In our case, these are text perceived as either positive or negative. There is no universal guidance for the size of the training data set; it depends on the number of variables used for training and it must contain several examples of every typical observation. In our case variables are words from the dictionary. Every of this word must be be represented in several texts of corresponding sentiment. Texts may contain several dictionary words of opposite sentiment so we need several such examples to understand which word bears stronger sentiment. Because of all that. the number or texts in our training dataset must be at least 10 times the number of words in our dictionary.

Exploring training and test data sets used in our sentiment analysis

As a training data set we use IMDB Large Movie Review Dataset. The dataset consists of two subsets — training and test data — that are located in separate sub-folders (test and train). Every subset contains 25000 reviews including 12500 positive and 12500 negative. Every review is stored in a separate file corresponding to the sentiment (train/neg or train/pos) subfolder. The size of a review can vary from 100 bytes to 14 kilobytes with a median size of about 850 bytes.

Here is a typical viewers’ movie review:

“Bromwell High is a cartoon comedy. It ran at the same time as some other programs about school life, such as "Teachers." My 35 years in the teaching profession lead me to believe that Bromwell High's satire is much closer to reality than is "Teachers." The scramble to survive financially, the insightful students who can see right through their pathetic teachers' pomp, the pettiness of the whole situation, all remind me of the schools I knew and their students. When I saw the episode in which a student repeatedly tried to burn down the school, I immediately recalled ......... at .......... High. A classic line: INSPECTOR: I'm here to sack one of your teachers. STUDENT: Welcome to Bromwell High. I expect that many adults of my age think that Bromwell High is far fetched. What a pity that it isn't!”

This dataset was used in a research paper titled Learning Word Vectors for Sentiment Analysis published by Stanford University, which also includes additional data related to the research.

We will use 25000 reviews from the “train” subfolder. As you can see from the movie review above, many of them don’t look like tweets. Tweets tend to be much shorter than typical IMDB reviews, emojis are used in tweets much much often than in IMDB reviews, And there tweets often contain less rigorous grammar and spelling than IMDB reviews. All that may negatively impact the efficiency of the learning process where the training set will be used, namey, with tweets. On the positive side, the texts are about the same topic. Ideally, we would need an appropriate library of tweets. Unfortunately, there is no available labeled tweet library of a reasonable size (several dozens of thousands tweets) pertaining to movie reviews. Therefore, IMDb became a reasonable tradeoff between the level of resources needed to get the data and the data set quality.

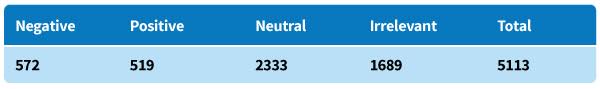

As a test dataset we will use 5000 manually-labeled tweets from Niek Sanders. It contains tweets labeled as positive, negative, neutral or irrelevant.

Many tweets labeled as irrelevant are in languages other than English, so we removed them from the test dataset. We also combined positive- and neutral-labeled tweets in one category as our task is to identify (specifically)negative tweets so we must be able to separate negative from neutral tweets, but separating neutral from positive is beyond our scope. So we can see that the negative and positive classes are very skewed: 572 negative and 2852 positive samples. It will be crucial during evaluation of the modeling results to understand which part of the ROC-diagram is more important in our case.

Introducing user segmentation based on “follower numbers”

Twitter users have widely varying social power based on their reputations and number of followers. Reputation is a latent characteristic, while number of followers is observable, therefore especially important. Any Twitter user’s tweet or retweet produces light or heavy social impact directly linked to the number of the tweeter’s followers as that is the number of people who will see the user’s post.

It’s interesting to see who makes the main buzz around a movie. What segments should we explore? The “followers number” can range zero to hundreds of thousands. About 50% of Twitter users have 300-600 followers.

In 2012 an average twitter user had 208 followers. Unfortunately there is no more recent information, but we can guess that now, in 2016, an average Twitter user has more followers than that, but not a huge increase, so we will call users who have fewer than 500 followers regular users.

Another ~45% have up to 5000 followers. These users are likely not professional tweeter but their influence is much greater than regular users’ since their tweets or retweets will be seen by more than 500, possibly even thousands of people. Let’s call them opinion leaders.

Apparently, about 5% of Twitter users have more than 5000 followers. In many cases these Twitter accounts belong to business like magazines, cinemas and movie studios. They are professionals. We will explore which of them — regular users, opinion leaders or professionals— make the greatest buzz about a movie.

Drawing conclusions on the data structures we’ll use to train, tune, and evaluate our model

Now it’s time to do the last task in the scope of the data understanding phase: drafting the solution. We will build the simplest but still effective model. By “effective,” I mean the model should work significantly better than random guessing. In other words the percentage of correctly classified tweets with the suggested solution ought to be at least 10% higher than random guessing.

Here is a list of the basic principles we will follow while building the solution:

- The feature vector will consist of dictionary words that are present in a tweet

- Multiple dictionaries will be used for the feature extraction process so that we can select the best one

- Dictionaries and tweets will be normalized via stemming to increase the rate of matched words

- Two classification models will be evaluated. The better-performing one will be selected for implementation at-scale in a lexicon-based model we call “naive Plus/Minus” and logistic regression

- Three classes of Twitter users based on “number of followers” will be tracked to capture sentiment distribution by the “power” of their influence.

Data preparation

In order to train and later apply the models to the Twitter data it is first important to clean and prepare the data. This is the “dirty work” that occupies large swaths of data scientists’ time. In our specific case, models accept binary matrices indicating whether or not a word was present in the text as an input. So we have to transform every tweet into a vector of binary values. This operation is called “feature extraction.” This is accomplished in a number of steps.

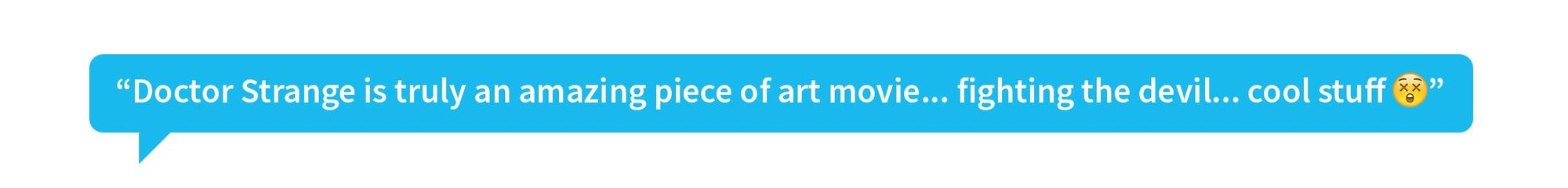

First the text is extracted (assume we got a text filtered by the keyword “Doctor Strange”):

Then several normalization steps are performed — removing capitalization, punctuation, keywords used for tweet filtering, and unicode emoticons. Ideally we should preserve emoticons by replacing them with simple “synonyms” like “:)” or “:(“.

Leveraging synonyms, hyponyms, and hypernyms is a much more comprehensive natural language processing technique than we’re using here; indeed, this topic deserves its own dedicated article, so we’re consciously omitting it here for the sake of simplification.

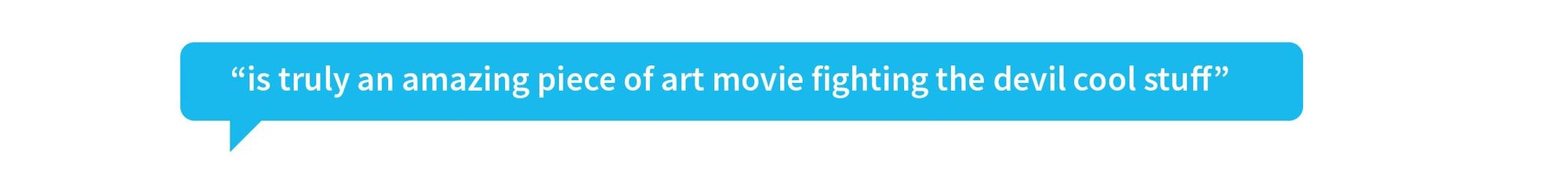

Now the text looks like this:

Next the text is tokenized— split into tokens. Pay attention at the “cool stuff” substring. While performing tokenization we should recall that some dictionaries contain n-grams like “cool stuff” or “does not work.” The tokenization process should not lose those tokens. The described cleansing and tokenization may be accomplished by many means, and the most comprehensive solution deserves a separate article. We do it here in a very straightforward way: unnecessary symbols are removed with a regular expression, the text is separated spaces considering exceptions (like “cool stuff”).

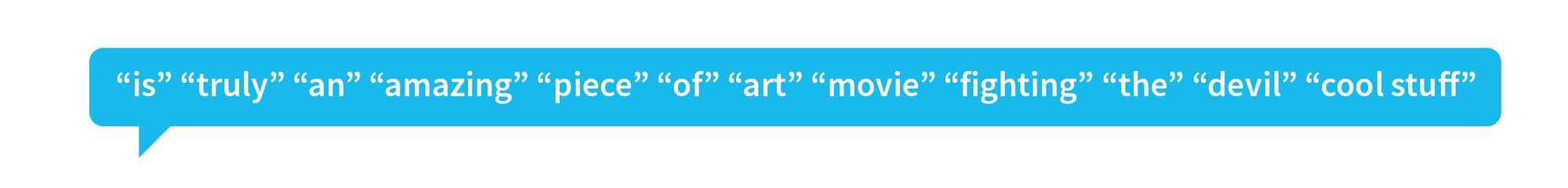

Now we are almost ready to search for word matches in texts and dictionary entries. The last processing step we are going to perform is stemming. It’s a reduction of various forms of a word to their so-called stem. In fact, this transformation is a type of dimensionality reduction that solves two challenges: It increases matching rate and reduces the computational complexity of the machine learning algorithm. Stemming has to be performed on both the text and the dictionary. After this step the text from the tweet looks like this:

Once the words are stemmed, it is possible to search for dictionary words within the text.

So summarizing the simplified process of data preparation:

- selection of the dictionary to be used,

- stemming dictionary entries, deduplication of the dictionary

- receiving tweets

- extraction of text from tweets

- removing capitalization, punctuation, keywords used for filtering, an unicode symbols

- tokenization

- text stemming

- filtering tokens, matching dictionary entries

Now we have all data necessary and properly prepared to perform the modeling or, in other words, build a classifier that will tell whether a tweet is negative or positive. How to do that — that is, how to evaluate classification performance and determine which approach appears to be better — is what we will discuss in the next blog post.

References

Anton Ovchinnikov, Joseph Gorelik, Victoria Livschitz