Using CRISP-DM methodology for Twitter sentiment analysis

As we explained in our introduction to this series of posts, we are exploring a data scientist’s methods of extracting hidden patterns and meanings from big data in order to make better applications, services, and business decisions. We will perform a simple sentiment analysis of a real public tweet stream, and explain the data science process.

In this blog post, we discuss the general-purpose scientific process behind data science and how it was applied to our project.

Definitions of general data science terms

A good place to start is a quick review of the glossary of basic terms from the data science domain we’ll be using throughout the project:

- Model: the predictive “black box” function that finds patterns in data.

- Dictionary: a list of words and expressions the model will encounter, with pre-assigned “value labels” that reflect the degree of positivity or negativity of that word or expression. We used existing open-source dictionaries where positivity or negativity was already established by the creators of that dictionary.

- Lexicography: the craft of compiling, editing, and creating dictionaries; the field of study analyzing the relationship between the various vocabularies of a language.

- Training and testing data sets: the data samples used to train the model and see how well it predicts.

- Labeled and unlabeled data set: data for the analysis consists of two parts. The first part is observations; a set of observable characteristics that can be directly measured or tracked. The second part is not observable, but consists of valuable characteristic that can’t be measured directly. These characteristics are derived from the observations. The dataset containing both observations and non-observable characteristics is a labeled dataset, while the dataset containing only the raw observations is an unlabeled one.

- Supervised Learning: a process of inferring a function to adequately predict a specific result based on the labeled training dataset.

- Unsupervised Learning: a type of machine learning algorithm used to find patterns within an unlabeled dataset.

Specifically, in the context of our project, definitions of our terms:

- Model: we will assume that every tweet attributed to movie X can be measured on a numeric scale between positive and negative sentiment. The scale depends on the model employed. It will be either a probability (0;1) or a sentiment score (-∞;+∞). Our models will produce that measure for every tweet.

- Dictionaries: existing open-source dictionaries were used where positivity or negativity was already established by the creators of that dictionary. The responsibilities of our team was not to build its own dictionary from scratch, but to identify the most relevant of available free dictionaries published by multiple contributors, test their performance on our datasets, and choose which ones to include in our dictionary. In the end, three dictionaries will be used: the root analysis dictionary by WhileTrue; MPQA Subjectivity Lexicon and Jeffrey Breen's positive and negative unigram dictionary.

- Lexicography: our model will employ an external dictionary which has positive and negative scores for the words it contains. The model will represent the simplest possible approach to classifying tweets.

- Training and testing data sets: we can use the Twitter streaming API to get the tweets attributed to the movies we’re tracking. We’ll use it to run the models in production and track sentiments in real time. The large IMDB movie review dataset provided by Stanford University will be used for training. For model evaluation purposes, we will use the dataset of manually labeled tweets from Niek Sanders.

- Supervised and unsupervised learning: we will employ a supervised learning model, logistic regression, to compute the probability of a tweet bearing a positive or negative sentiment.

The data scientist’s process: CRISP-DM methodology

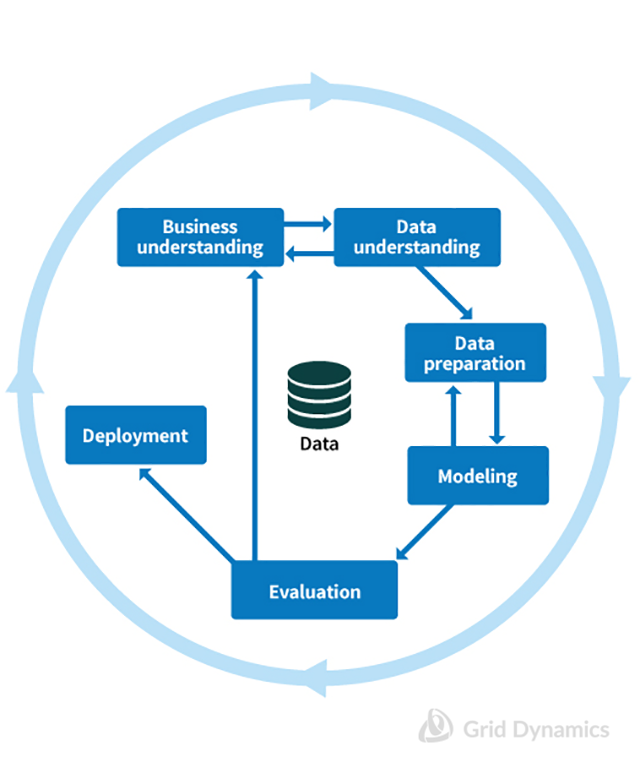

Before the trendy phrase “data science” captured the imagination of the industry, there was a mature discipline — data mining — that concerned itself with similar questions. Since every data mining project followed the same patterns, good folks created a Cross Industry Standard Process for Data Mining or simply CRISP-DM. It includes six phases: Business Understanding, Data Understanding, Data Preparation, Modeling, Evaluation, and Deployment. The diagram below shows the sequence of phases and reflects the iterative nature of the design process:

This approach is simple and pragmatic — and useful in explaining the scientific process behind the work of data scientists. This is the process we use in our project as well. Below, we’ll discuss how our understanding of social sentiment analysis has progressed from business understanding to data understanding to data preparation to data modeling, evaluation of the models, and, ultimately, a scalable implementation of machine learning.

Specifically, this process is applied in the following way:

Business understanding

To formulate a precise business problem that will be solved by the predictive model, it is critical to understand data sources and the structure of the data itself, and formulate a set of hypothesis of what range of business business “questions” can be answered predictively. This is an iterative process — initial analysis of the data leads to an initial formulation of the business problem and a hypothesis of how that problem can be solved. As the project matures, more is known about the data and the model’s predictive powers, and a more refined understanding of the business applications of predictive modeling emerges.

In our case, the team will begin by looking at samples of tweets related to movies to formulate a set of assumptions about what kind of models can be used, and what kind of business questions these models can answer. For example, the decision to segment the population of Twitter users into “power groups” based on the number of followers so that the business can distinguish the sentiments of highly influential individuals (with over 5,000 followers) from non-influential ones (fewer than 500) came pretty late in the project and altered the business understanding, and thus the way the models were used.

Data understanding

Study samples of the data to answer critical questions about this data’s structure; sources; volumes; frequency; fields that are critical, promising or irrelevant; security; accessibility, and so on. In our case, this means initially studying the structure of Twitter streams to learn enough to begin building data dictionaries and training data sets. As the project progresses, understanding of the data grows and so does the quality of the training data sets and the performance of the model.

For starters, we will assume that every tweet attributed to movie X can be measured on a numeric scale between positive and negative sentiment. The scale depends on the model employed. It will be either a probability (0;1) or a sentiment score (-∞;+∞). Our models will produce that measure for every tweet.

Data preparation

Data is never as “clean” and error-free as we would like it to be. Common offenses include

- Nominal values don’t match: for example, ”gender” field can be encoded as male/female, man/woman, true/false.

- Missed or incorrect values

- Weak correlation with the “target variable”: for example, using a tweeter’s precise age when an age range (18-25, 26-35, etc.) would be more useful

- Issues with unification of data from multiple sources: when aggregating data from multiple sources into a single dataset, getting all the “useful” characteristics in while leaving all obviously useless ones out is hard — but must be done

The goal of the data preparation stage is to identify data quality issues and write code that automatically detects data quality problems and corrects them

Modeling

The process typically begins by choosing one or more models from a standard library of mathematical models that are deemed the most “promising” based on the hypotheses generated in the previous stages. Subsequently, systematic training and tuning leads to the gradual improvement of the models’ performance. This tends to be an iterative process. Based on the performance of the models, the team will likely need to go back and revisit the assumptions from the data understanding or even business understanding states. After some number of iterations, the models start producing increasingly more promising results until they eventually become good enough to be production-worthy.

Evaluation

Evaluation of the models’ performance and assessment of whether it is adequate to meet the business objectives. For example, a model that delivers 70% accuracy in predictions might be “good enough” for some applications but not for others. If the accuracy is deemed insufficient or there are still promising hypotheses on how the accuracy might be improved to deliver better business results, the team goes back to the data preparation / modeling stages. Once a model and its results have been validated and deemed acceptable by the business team, the data science team is ready to go further and productize the solution.

Deployment

Now the job of the data scientists is complete and the software developers’ job begins. In order to implement the model at scale, it needs to change from a data scientist’s tool into production-ready code running on a scalable platform. Developers can then use technologies like Hadoop, Kafka, Spark Streaming, Cassandra, Tableau and others to capture data streams, run thousands of concurrent events through the model, persist all intermediate and final results to survive any failures, and ultimately deliver resulting insights to different business systems that will use them.

The cycle continues

After the model is up and running, delivering the algorithmic discovery of business insights and application of those insights to marketing, sales and operations, the data science team continues to refine the model, identify more insights, and drive new business applications.

Armed with CRISP-DM methodology, we are ready to take you step by step through the process of creating predictive sentiment analytics system for real-time social movie reviews and attempt to tackle the following business problem:

Based on tweets from the English-speaking population of the United States related to selected new movie releases, can we identify patterns in the public’s sentiments towards these movies in real -time and track the progression of these sentiments over time?

Our quest begins with data understanding part of our data science process.

References

- CRISP-DM

- IMDB Large Movie Review Dataset

- Learning Word Vectors for Sentiment Analysis, Stanford University 2011

- 5K manually labeled tweets from Niek Sanders

- A new ANEW: Evaluation of a word list for sentiment analysis in microblogs - by Finn Arup Nielsen

- Dictionary of root-words with sentiment scores

- Jeffrey Breen's positive and negative unigram dictionary

- MPQA Subjectivity Lexicon

- Sentiment analysis approaches overview

Victoria Livschitz, Anton Ovchinnikov, Joseph Gorelik