DevOps stack for In-Stream Processing Service using AWS, Docker, Mesos, Marathon, Ansible and Tonomi

This post is about the approach to the “DevOps” part of our In-Stream Processing blueprint — namely, deploying the platform on a dynamic cloud infrastructure, making the service available to its intended users and supporting it through the continuous lifecycle of development, testing, and roll-out of new features.

Doing “DevOps” right or wrong can bring shine or shame to the project, so it is important to pay close attention to this aspect of the platform architecture and delivery. So let’s begin the discussion of the design, implementation, and maintenance of infrastructure-related aspects for our blueprint with selection of the operational technology stack.

Chosen DevOps technology stack

We have analyzed a number of technology choices to use for the reference implementation of the service and chose the following stack:

- Cloud: AWS

- Deployment unit: Docker container

- Container management: Mesos + Marathon

- Bootstrapping Mesos + Marathon on bare cloud infrastructure: Ansible

- Application management and orchestration of Docker containers over Mesos + Marathon: Tonomi

Let’s discuss each technology in some details and explain our rationale for the choice.

Bare cloud infrastructure: AWS

Since we are designing a service that can process massive amounts of events and scale easily with growing loads, it is obvious that the computing infrastructure should reside in a cloud. Faced with a cloud choice for the reference implementation, we considered a number of popular platforms including AWS, Google Cloud and Azure, and chose AWS as the most mature, most commonly used, best-documented, and most feature-rich cloud service on the market.

It is important to point out that we consider a choice of the specific cloud a matter of convenience, not architectural significance. Moreover, we fully expect each customer to make their own choice of the cloud platform, which may differ from AWS. The blueprint goes to great lengths to make sure a specific cloud can be simply “plugged in,” with the rest of the system working without any modification. This is a bit idealistic, perhaps, since the choice of a particular cloud carries a number of operational considerations. Still, we purposefully avoided relying on any AWS-specific APIs that would lock the solution into a single platform.

In a nutshell, cloud portability is achieved by placing the responsibility for application deployment and management on other layers of the stack — Docker, Mesos, Marathon, Ansible and Tonomi. AWS simply provides the VMs that are used by Mesos to place and manage containers. Any cloud that can provide its VMs to Mesos should work equally well, at least conceptually.

Porting our reference implementation to another cloud would require replacing AWS APIs with the equivalent APIs from a different cloud provider. We will discuss this in detail later on, when we deal with deploying and configuring Mesos on AWS.

Deployment unit: Docker

We had a choice between two approaches for deployment and management of our platform: “classic” VM-based and “modern” container-based. These two approaches can be roughly described as follows:

- “Classic” deployment is where an application is configured to run directly on the hardware or VM. There are many tools on the market that can help support this approach — like Chef, Puppet, Ansible, SaltStack, and other configuration management utilities.

- “Modern” container-based deployment aims to provide one more layers of isolation and independence by packaging applications as containers and deploying containers on top of Hardware or VMs. Docker is the most popular container technology choice.

The advantage of the “classic” approach is its maturity and wide usage in production today. We can rely on many existing operational practices and cookbooks, but it has two principal weaknesses:

- Lack of portability between different cloud platforms. VM management is usually tightly integrated into the core cloud platform, along with image management, user access, security, metering, etc. Launching a VM on a different cloud requires changing all this machinery.

- Difficulty in launching and scaling applications on demand. While clouds make it possible to request yet-another-VM on-demand, actually adding a new VM to a running production application dynamically is anything but trivial. In fact, most companies still rely on manually-processed tickets to request adding capacity to an existing production cluster rather than auto-scaling the existing footprint.

By contrast, containers in general, and Docker specifically, take application portability and on-demand launching and scaling to a different level:

- Docker containers can be deployed on any cloud infrastructure unchanged. A class of middleware products — like Mesos (described below) — takes care of that.

- There is no need to provision new VMs and other parts of the infrastructure in order to spin up more containers. Provisioning new container on an existing infrastructure takes minutes or even seconds. Most of the time, there is no need for an actual deployment either, as the containers are often pre-loaded with everything required for them to be operational

For these reasons, containers are quickly becoming the mainstream technology of choice for new application deployment. We decided that the benefits of containers outweigh their challenges, and implemented operational support using Docker and related technologies from the Docker ecosystem.

Container management: Mesos + Marathon

Once we figured out that we are going ahead with Docker, we had to decide how to manage the container fleet, at scale. There are several possibilities available on the market that required consideration, most notable being:

- Docker Swarm

- Kubernetes

- Mesos + Marathon

- AWS ECS/BeanStalk for AWS cloud

- Google Container Engine for Google cloud

Docker Swarm is a “native” solution that offers portability across a wide range of infrastructure choices, from laptops to production clusters. Unfortunately, by mid-2016 the technology is still too young and according to many reports from early adopters, suffering from “childhood issues”. While we decided to pass on this technology for the time being due to its current maturity level, we will follow its progress and reevaluate in the future. Here are some links to discussions of Docker’s “teething pains”:

Docker 1.12 swarm mode load balancing not consistently working

Lessons learned from using Docker Swarm mode in production

Docker Not Ready for Prime Time

5 Problems with Docker Swarm

Containers are unable to communicate on overlay network

Docker Swarm - Multiplatform Containers Will Not Start in Windows Server 2016

Swarm unstable if host's address is different from the public IP address

ElasticSearch Cluster with Docker Swarm 1.12

The last two choices, AWS ECS/BeanStalk and Google Container Engine have to be eliminated since they are cloud-specific and we are looking for a portable solution that can run on any cloud.

So, in reality we are down to 2 main competitors, Mesos + Marathon and Kubernetes. Both technologies are relatively new, yet reasonable choices. There are several nuances between the two that make them somewhat more suitable for different use cases. Since this a hotly debated topic in the industry, there is no shortage of the opinions. Here are a few quality white papers and articles that describe the differences, if you would like to read more about this topic:

- Mesos and Kubernetes

- A Brief Comparison of Mesos and Kubernetes

- Apache Mesos turns 1.0, but it’s no Kubernetes clone

- Container Orchestration: Docker Swarm vs Kubernetes vs Apache Mesos

- Docker, Kubernetes, and Mesos: Compared.

- Container Orchestration Tools: Compare Kubernetes vs Mesos

We chose Mesos + Marathon because it is more mature, in wider use, and has a good track record regarding production use cases — and also because our team has more experience with Mesos and its popular container fleet management framework, Marathon, than with Kubernetes.

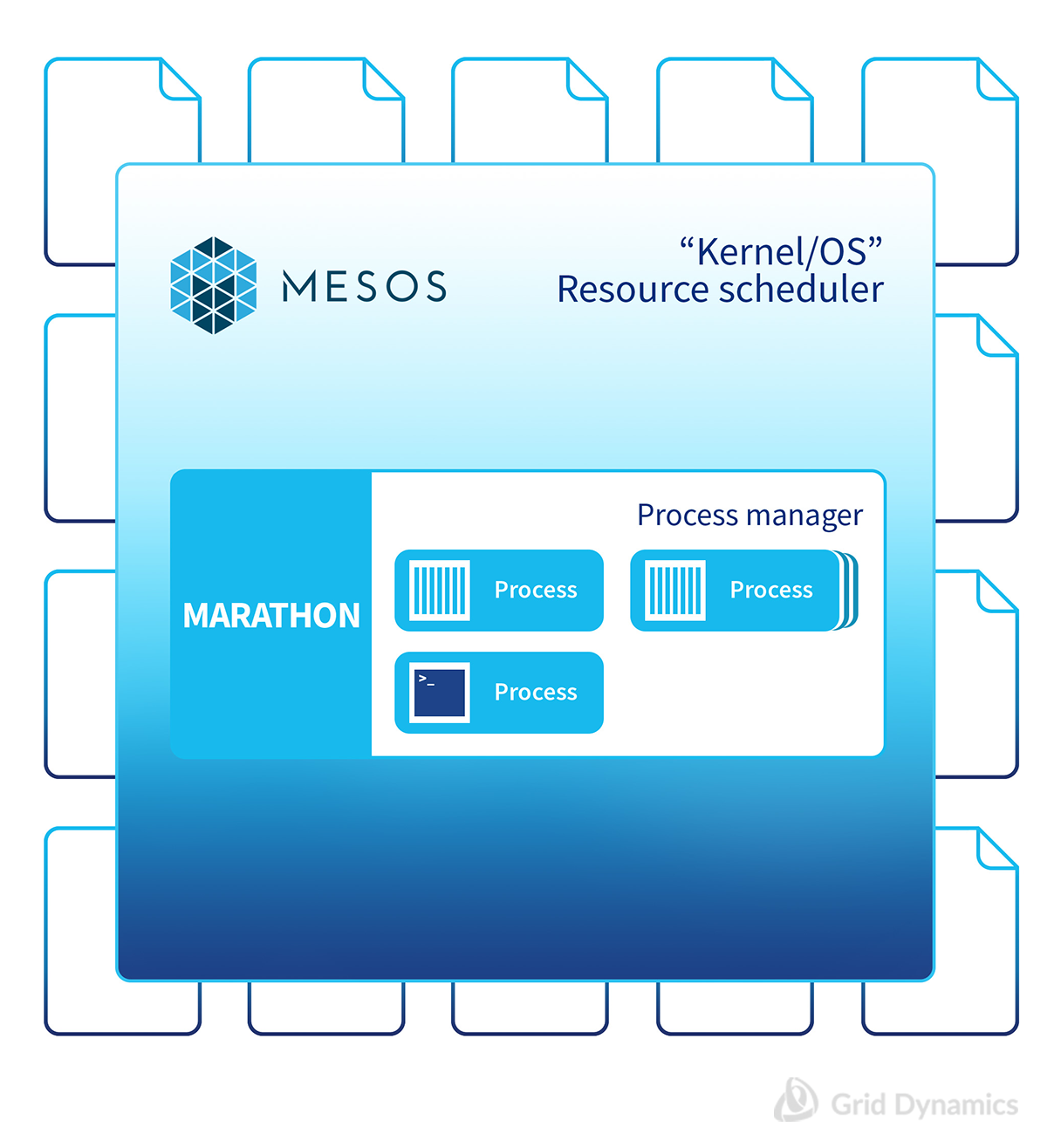

What is Mesos?

Mesos is designed to help manage a fleet of individual compute units (hardware, VMs) as a whole, giving access to joint resources (cpu, ram, disk) as if they’re one huge VM, providing resource allocation with load balancing and migration of individual tasks across an entire cluster when needed, at least to a large extent. There are some exceptions: for example, you cannot allocate a single chunk of resources larger than you have it on an individual compute unit. If we continue drawing parallels between the cluster as a whole and a single VM, Mesos is the kernel in charge of resource allocation.

What is Marathon?

It’s hard to run modern software using only the capabilities of the OS kernel, so in order to extend its functionality, Mesos has a powerful extension mechanism called frameworks. One of the most commonly used Mesos frameworks is Marathon — which in our analogy will substitute for a process manager. It allows us to easily run and manage Mesos tasks, and one of the supported task types is running a Docker container.

Marathon also provides some very handy features, such as easy scaling and integration with load balancers for running tasks, which we are going to leverage.

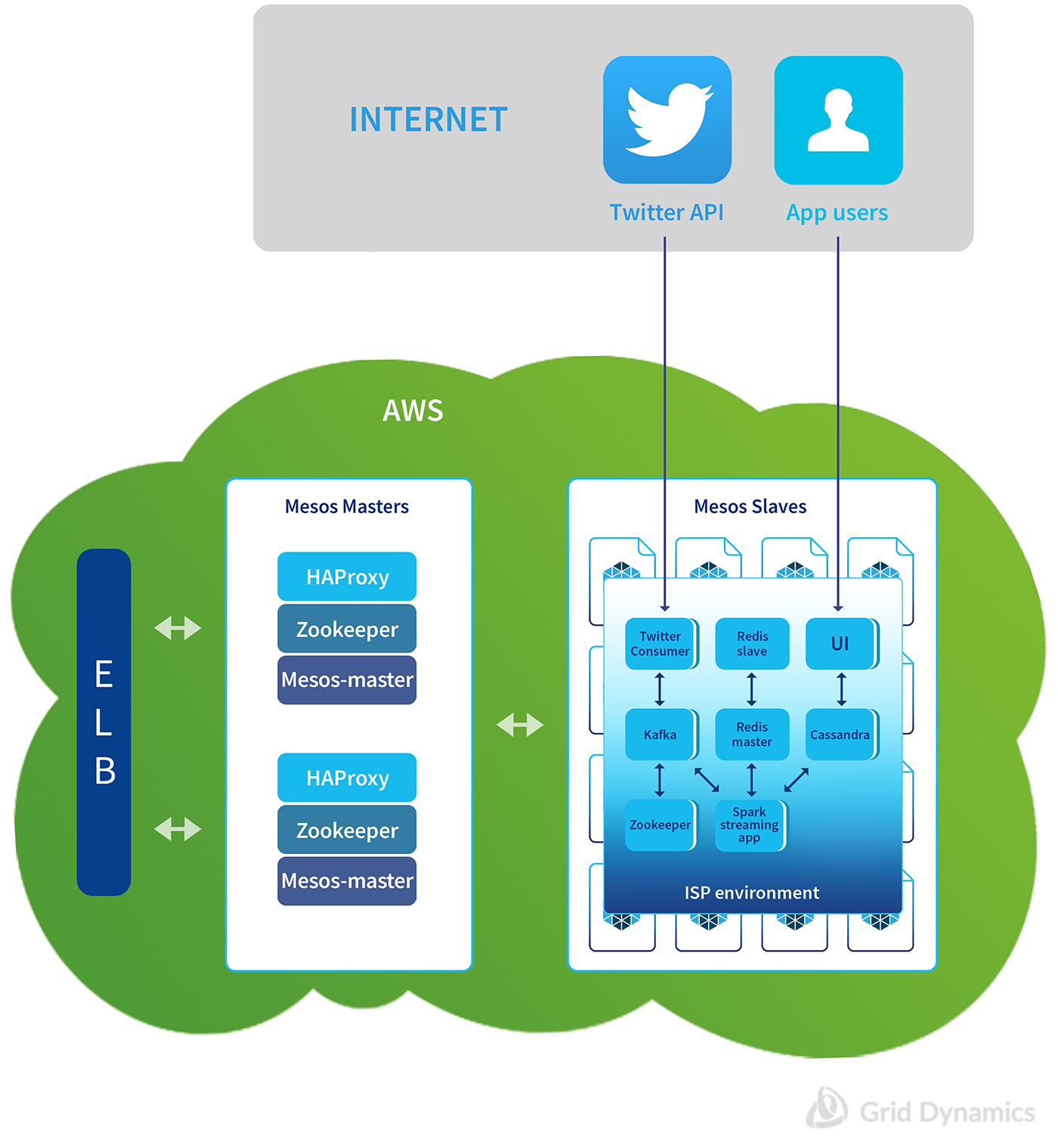

The desired deployment topology of the In-Stream Processing platform we want to get from the DevOps platform looks like this:

To achieve this, Mesos will be managing VMs used underneath of all these application containers so that Marathon can launch and place the containers in the respective groups during the initial application deployment, or in response to scaling or failover situations.

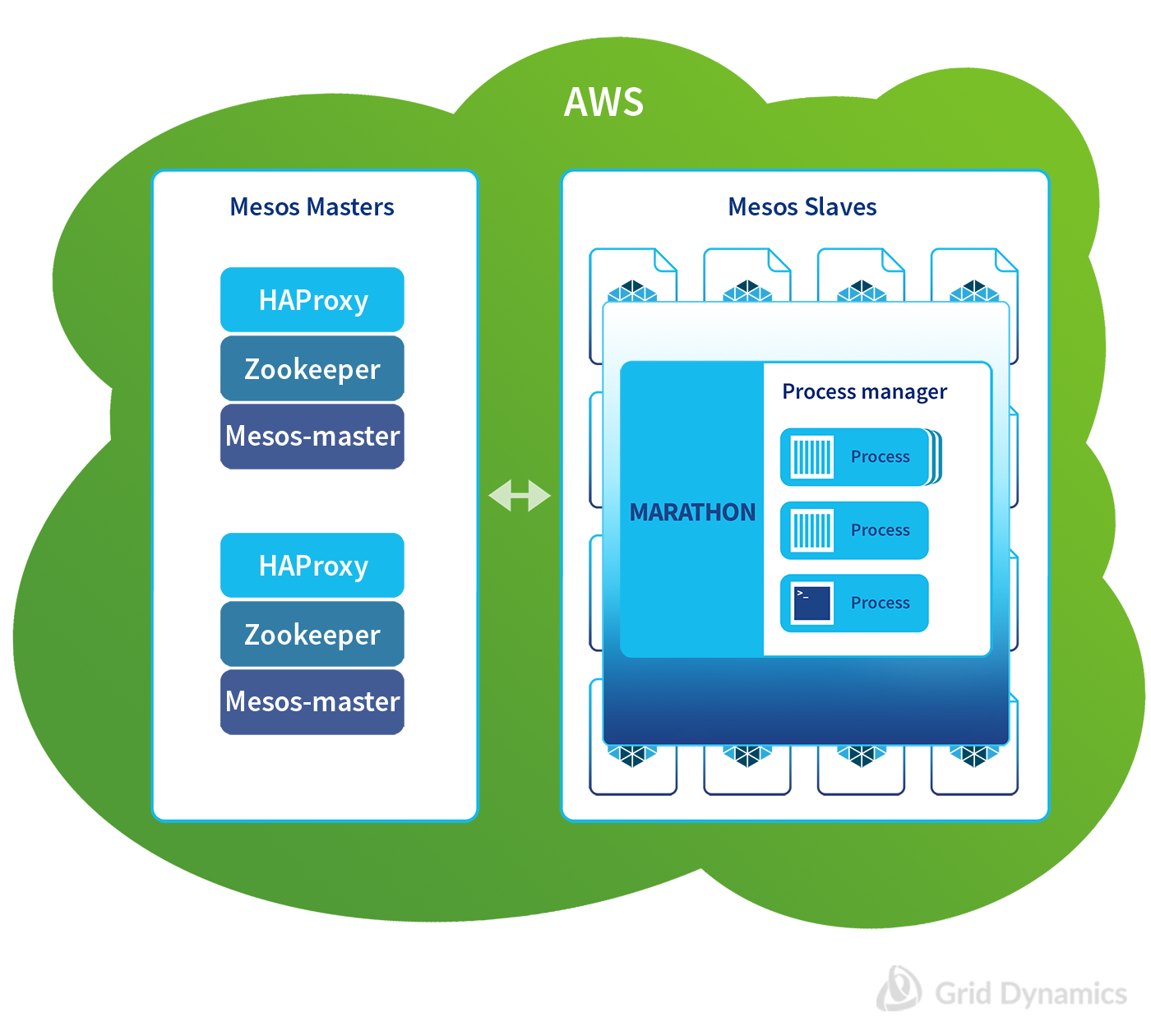

Bootstrapping Mesos + Marathon on bare cloud infrastructure: Ansible

A Mesos cluster must be deployed once to bootstrap the cloud deployment of any containerized application service before the application environments can be provisioned with Mesos. This is pretty obvious when you think about it, so we have to answer the question, “How do we automate bootstrapping the initial Mesos deployment on the pristine cloud infrastructure?”

In this case, Mesos will be running as a VM, not as a Docker container. We chose Ansible as our configuration management tool for this bootstrapping. Alternatives include other configuration management tools such as Chef, Puppet or SaltStack, but we chose Ansible for its simplicity, popularity and — and for existing deployment scripts we had already written in-house for other projects that we could easily reuse.

The goal of using the Ansible scripts is to achieve the following state of the system after bootstrapping is complete:

Let’s stop here for a second to reflect on the following idea: Deploying the Mesos platform as part of the bootstrapping operation is somewhat specific to every cloud service because we are working with VM-based infrastructure. But once Mesos is up and running, the remaining application deployment and management is handled in a totally cloud-independent way, relying only on the APIs of Mesos and its framework, Marathon.

Application management and orchestration of Docker containers over Mesos + Marathon: Tonomi

Finally, with the Mesos infrastructure in place, so it can provision any Docker container on demand, it is time to ask this last question:

“How will we automate the complex orchestration workflows that will deploy different application clusters for Kafka, Spark Streaming, Redis, Cassandra, Twitter API and social analytics visualization web application, configure all these services, resolve their dependencies on each other, and later handle complex re-configuration change management requests such as scaling and failover?”

Put even more simply, we need something to take care of the interactions between the services in our platform, tie them together, and provide the ability to easily deploy the complete In-Stream Processing platform to the Mesos cluster.

It is worth pointing out that, unlike the bootstrapping of the Mesos cluster that only happens once, spinning up application environments holding the In-Stream Processing platform, or its reconfiguration, will happen all the time, sometimes on a daily basis. Events that lead to fresh deployment or reconfiguration include:

- Spinning up of yet-another-test environment for some part of the CI process - or tearing it down. There could be dozens of test instances of the streaming platform up at once, used by different groups of developers, or for different stages of the CI pipeline. In a well-automated CI pipeline, the number of test environment is dynamic and may change throughout the day

- Pushing out new changes to the platform that requires infrastructure upgrade

- Scaling one of the services

- Recovering from node failure

We will use Tonomi to provide application management automation. Sadly, the market doesn’t have a robust selection of open source tools capable of application management automation of this type. Various PaaS solutions, like Cloud Foundry, are not suitable for this use case so we chose Tonomi, which is a SaaS-based DevOps platform.

Admittedly, this is an in-house choice since Grid Dynamics acquired Tonomi in 2015 to provide these capabilities. There is a free version of Tonomi available on AWS that has all the features we need to provide one-click deployment of the complete In-Stream Processing service over Mesos infrastructure. Tonomi supports Ansible, and runs on AWS itself.

We also happen to know that Grid Dynamics has plans to open source the next version of Tonomi as soon as practical. While we cannot commit our colleagues to any specific schedule, these intentions played a role in our decision to use Tonomi in this reference implementation.

In the next post we’ll delve into the details of how Docker, Mesos and Marathon are used together to deliver fully-automated portable management of application containers.

Sergey Plastinkin, Victoria Livschitz