Grid Genie: the beginning of conversational commerce

Retail is on the brink of yet another technological breakthrough. AI devices like Siri, Alexa, Google Home, and virtual assistants of all kinds are rapidly becoming trusted and dependable aides to many people. Technology companies led by Amazon, Google, Apple, and Microsoft are rushing to grab the market of AI devices, applications, and platforms.

Each of these tech giants is hurriedly trying to build an AI ecosystem around its devices that is integrated with its backend cloud. This will set up the latest, and most likely largest ever, platform war for conversational commerce. The prize is control over consumers’ hearts, minds, and wallets in the brave new world of digital assistant-curated Internet experiences.

In a lot of ways, the arrival of voice interfaces with AI capabilities resembles the early days of the mobile revolution. This was a time when many tech companies were fiercely fighting for dominance in the emerging mobile market by pushing forward and promoting their platforms. All of this happened while retailers tried to wrap their heads around how to reach their customers through this new mobile channel and iron out the bumps and glitches of their immature mobile platforms. Those who persisted on this journey eventually became the successful omnichannel retailers of the mobile age.

Today’s retailers wanting to deliver their services to consumers via new AI channels face similar challenges of handling a new platform, except it’s now on an uneven playing field. The mobile age was driven by software and device companies that were impartial to the retail vertical so that the playing field was level for both small and large retail players. This is not the case with today’s AI platforms.

Take Alexa for example. Alexa is not an impartial AI device that would be equally happy to sell items from either Amazon or its competitors. Instead, it is designed to be strongly preferential to Amazon services and is deeply integrated with them. You want to check the delivery status of an item sold on Amazon? Sure thing! You want to check the delivery status of an item sold on Acme.com? I don’t think so...

In addition, targeting each device individually with the associated unique ecosystem of services is expensive to build and maintain. This is especially true when the number of AI devices on the market proliferates beyond two or three major players. This can be seen with the IM conversational channels such as Facebook Messenger, WhatsApp, and WeChat platforms.

So how can retail level the playing field and keep costs under control for AI? When the mobile revolution happened and mobile platforms started to diverge, web technology reacted with responsive/adaptive frameworks that allowed the building of device agnostic, omni-channel applications. We believe that the same should happen with AI and conversational commerce. What needs to emerge is an omni-channel CUI platform based on open source technologies that will treat Google Home, Amazon Echo, Messenger, and other upcoming voice and IM agents as end points that support voice-to-text and text-to-voice conversions.

The rest of the conversational system capabilities such as natural language understanding, dialog management, action fulfillment, etc. should work the same way across all channels so that the morning dialog with Amazon Echo can be picked up in the afternoon through Google Assistant and finished in the evening via Facebook Messenger. These capabilities can be backed by in-house implementations or be connected to the ecosystems of third-party conversational services.

Introducing Grid Genie

At Grid Dynamics Labs, we are developing this vision with Grid Genie, an experimental open omni-channel conversational commerce platform. We are designing Grid Genie to support a wide range of conversational applications. The feature set will include the ability to guide the customer through product selection and associated research, facilitate purchases and returns, keep track of order shipments, and more.

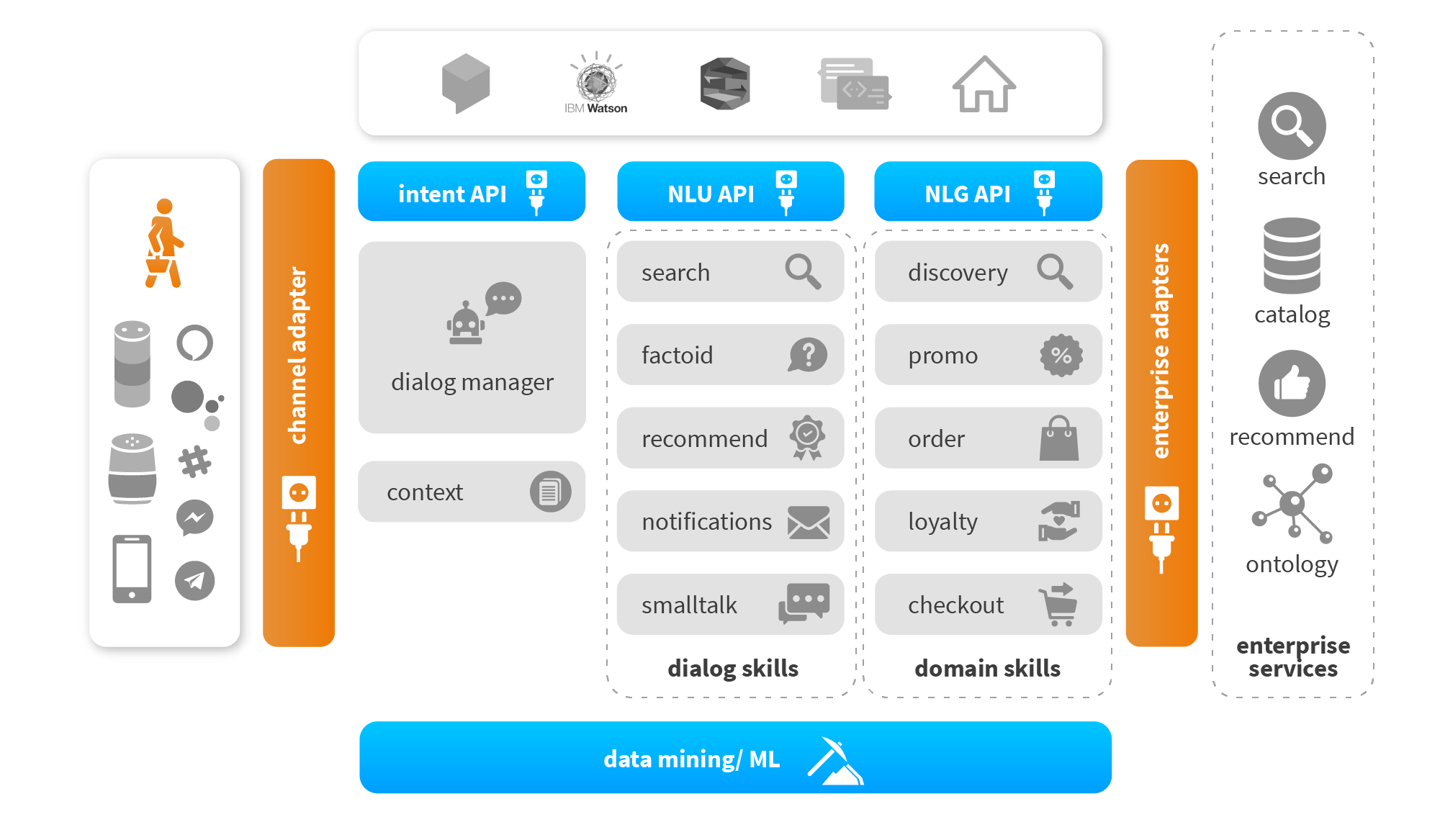

Here is the conceptual architecture of the CUI platform:

There are quite a few components in the diagram, so let’s take a look at them one by one:

- Channel adapters - additional devices can be incorporated into the ecosystem with minimal effort via device adapters. Voice devices are typically responsible for speech-to-text and text-to-speech, with the rest of the processing happening within the Grid Genie platform. Authentication, SSO, and user management between devices and Grid Genie is also addressed by the framework.

- Context sharing between devices - regardless of which device is being used, the user experience is always the same and all prior conversations via different devices are treated the same way. This allows the sharing of a dialog state between Alexa at home and a midday chat via Facebook Messenger while at work.

- Dialog manager - controls the conversation flow and coordinates the platform’s activities to provide a seamless conversational experience to the user. Dialog manager maintains conversation context, detects the customer’s broader intent, and delegates tasks to an appropriate dialog agent within the platform.

- Pluggable dialog agents - different types of conversation often require differently designed and trained dialog agents. For example, performing small talk, answering factual questions, providing product recommendations, or tracking orders require significantly different NLU/NLG models and external dependencies. Grid Genie’s architecture allows for multiple dialog agents with different skills to co-exist within the same platform. All the dialog agents can use platform services to improve their language understanding and fulfill actions on the user’s behalf.

- Dialog services - dialog agents need the ability to listen and understand the intent of what the user is asking in natural language. This allows them to appropriately respond with well-formed natural language responses. With Grid Genie, this function is performed by NLU/NLP/NLG dialog services within the platform. These services can be backed by cloud services or in-platform custom-trained models.

- Enterprise adapters - to be useful, dialog agents need to communicate with enterprise services such as catalogs, search, orders, and shipment APIs. They do it through platform services, which must be integrated with particular enterprise services through the layer of enterprise adapters.

- Domain ontology - intelligent NLU processing depends on a deep understanding of business domain, its terminology, slang, and the relationship between terms. This information is captured by ontology services. It is based on a mix of predefined ontologies (available publically or commercially) and is trained on the specific retailer data.

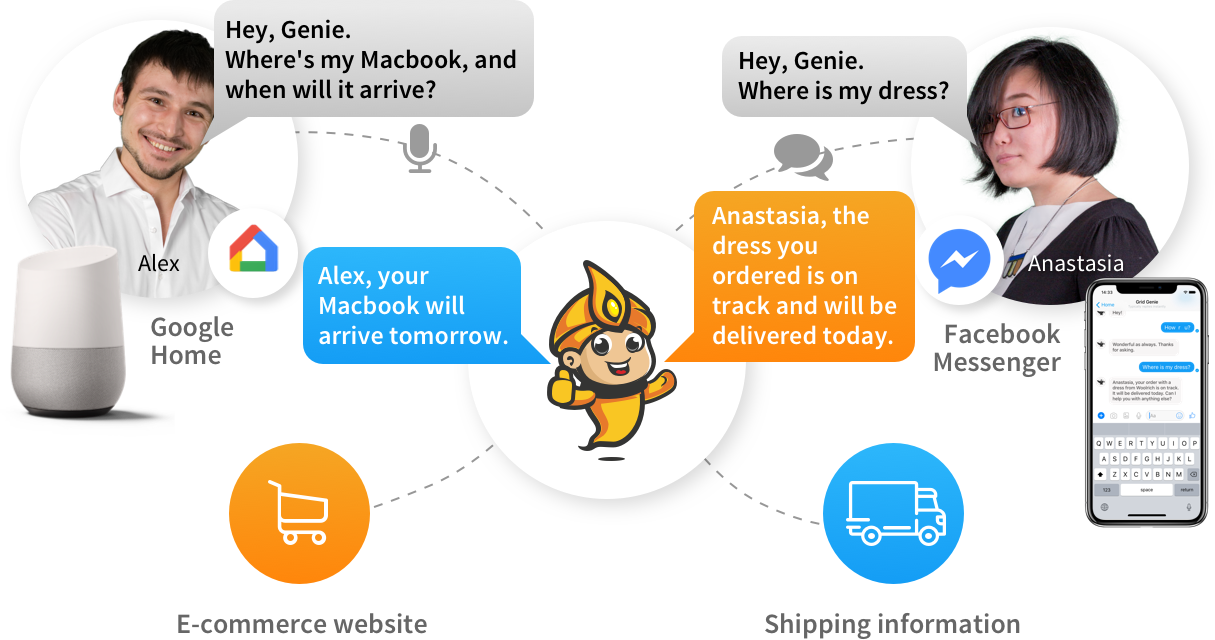

Let’s look at this architecture in action based on a simple example: order tracking.

Grid Genie, where is my order?

One of the first use cases we addressed with the Grid Genie architecture is order tracking. Order tracking can be considered a good example of a relatively simple infobot experience. This is characterized by “shallow” conversation, when it is possible for the customer to specify what she is looking for in one question and receive all the information she needs in one answer. Here is an infographic of how this works.

However, even for such a simple conversation to work seamlessly across multiple devices, a lot of things need to happen under the hood. First and foremost, device-specific identity has to be mapped to customer identity within the store so that the conversational agent knows who it is talking to. Next, the customer’s voice needs to be accurately converted to text. Luckily, modern Speech2text models deployed by Google Assistant and Alexa are maturing quickly and are already very good.

Once the text, known as utterance, is received, we need to understand its broader intent. Is it a small talk attempt? Is it related to order tracking? Or product discovery? Maybe it is an answer to the previous question? Or is it just genuine gibberish? The dialog manager needs to perform intent classification and delegate the utterance to the appropriate agent.

Let’s assume a customer has asked an AI device about the shipping status of the Fisher-Price toy that she ordered the day before. After routing by the dialog manager, this utterance reaches the order tracking agent. It now has to perform natural language analysis and named entity extraction to understand what action is expected (search for an order), and what exactly the customer is looking for (a Fisher-Price toy ordered yesterday).

The agent is invoking a platform service to search for a Fisher-Price toy ordered yesterday which generates corresponding results.

Often the agent has to go back-and-forth with the search service on the customer’s behalf if they aren’t satisfied for whatever reason. Once satisfactory results are received from the order search service, the agent has to decide which template to use for the natural language response. This decision can be made via rules depending on the order results, or by running a predictive model to rank all available templates with this particular result.

Natural language responses have to be constructed to fill the slots in a selected response template with order data. Finally, the responses have to be converted back to text, taking into account the target device’s capabilities. If we are dealing with a voice agent, we also have to include speech synthesis markup to add more color and intonation to the response, or provide phonemes for unusual words such as brand names. If we are working in an IM channel, adding pictures or richer content may also be required.

As you can see, even a relatively simple infobot contains a lot of interacting components that have to work seamlessly together to achieve a natural conversational experience. This includes support for dialog context when the customer may be referring to a previous result (“when will it be delivered again?”), or omit specification of the intent (“what about my dress?”). Here, the conversational agent needs to understand what the customer is referring to. Check out our Grid Genie order tracking in action in this demo video.

The road ahead

Infobots, while already useful, are only just scratching the surface of the complexity of conversational channel. Fully-fledged conversational commerce must be able to deal with far more challenging “deep” conversations. This involves remembering large amounts of context dependent information that the customer has provided. This includes dealing with ambiguity of intent, asking questions to refine the customer’s needs, summarizing and analyzing large result sets, providing factoids about selected products, assisting in product comparisons, and coming up with relevant recommendations. In other words, it has to support the customer at every stage of the sales funnel - starting from discovery and ending with post-purchase support.

As you can see, this involves a huge amount of complexity, but we are working hard to teach Grid Genie new tricks every day. Stay tuned for more updates from Grid Labs!

Eugene Steinberg