How ARKit and ARCore recognize vertical planes despite limited depth perception

In a previous blogpost we explored how retail companies are utilizing emerging augmented reality (AR) technologies to close the “imagination gap” between the showroom and digital experience to improve their online sales. In this post, we look at the two leading AR frameworks from Apple and Google and review their features as well as discuss how they approach one of augmented reality’s current limitations; vertical plane recognition.

ARKit and ARCore overview

In the summer of 2017, Google released the ARCore platform, which enables AR capabilities on mobile devices without the need for specialized hardware. The platform makes it easy for developers to incorporate augmented reality technology into their Android apps. The ARCore platform replaces Google’s previous AR framework, Google Tango. The announcement of a newly named framework was also a clever marketing move in response to the release around the same time of Apple’s AR framework, ARKit.

Apple’s ARKit platform has been made a central part of iOS 11. Like ARCore for Android, it makes it straightforward for developers to create AR-friendly apps for the Apple platform. Both frameworks have very similar functionalities, capabilities, and feature set. ARKit and ARCore can both track position, orientation, and planes for many meters before users will notice any inaccuracies.

Common features of augmented reality platforms

These frameworks use four key features to detect and map the real world environment:

- Motion tracking - this allows the phone to understand and track its position relative to the real world.

- Environmental understanding - allows the phone to detect the location and size of flat horizontal surfaces.

- Light metering - allows the phone to accurately estimate the environment's current lighting conditions.

- TrueDepth Camera (ARKit only) - allows the phone to detect the position, topology, and expression of the user’s face in real time.

ARKit requires a device with iOS 11+ and an A9 or later processor. This includes iPhone SE, 6s/6s Plus, 7/7 Plus, 8/8 Plus, X, iPad 5th gen, and all iPad Pro variations.

ARCore requires Android 7.0+ devices, which includes Google Pixel, Pixel XL, Pixel 2, Pixel 2 XL, Samsung Galaxy S8, Lenovo Phab 2 Pro, and Asus ZenFone AR.

Here is a more detailed overview of the ARKit and ARCore frameworks.

Of the devices mentioned above, only iPhone X has a front camera with some depth perception functionality, however it is not optimized for use with AR experiences. As a result, AR still faces some issues with depth measurement to track vertical surfaces as well as shape topology recognition of the user’s surroundings.

Both ARKit and ARCore have limited depth perception

ARKit and ARCore can analyze the environment visible in the camera view and detect the location of horizontal planes such as tables, floors, or the ground. The ARKit and ARCore frameworks cannot however directly detect vertical planes such as walls.

The reason why vertical plane detection is limited is that the current generation of smartphones do not have the additional sensors needed to measure depth perception accurately. Most likely, vertical plane detection features will be implemented in the next generations of mobile devices. However, until this occurs AR capabilities will remain somewhat limited due to this lacking functionality.

Solutions to limited depth perception

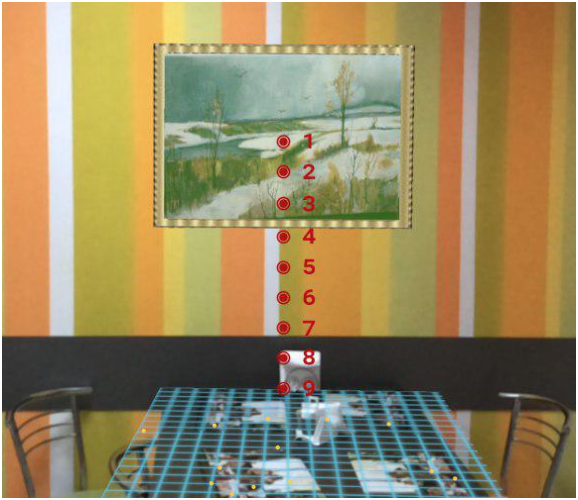

The Grid Dynamics mobile team has expertise in AR app development and was tasked with implementing a program to allow people to hang virtual pictures on walls in real rooms. The app allows users to change the position, orientation, and frame texture of virtual pictures, so they can see for themselves exactly how a picture would appear in the rooms of their home. Scanning of the real world environment is done using ARKit or ARCore.

Considering the ARKit and ARCore frameworks lack true depth perception capabilities, it may initially seem that it isn’t possible to achieve vertical surface detection. However, there are several solutions to this problem and a variety of approaches have been used to address it, each with their own advantages and disadvantages.

1. Using anchor clouds

If a wall contains contrasting colors or patterns or has high contrast objects already hanging on the wall, these provide good features that can be scanned.

Whenever feature points are detected (shown in yellow in the above screenshots), ARKit can place anchors in the real world scene. This allows for the position of a point to be grabbed or even an entire surface to be grabbed using a point cloud.

The above example shows that wall recognition works well in situations where there is a strongly contrasting wall surface and therefore additional vertical plane detection capabilities are not required.

How is it done?

Option #1: Use the ARSCNView class method to detect intersections with one of the points using .featurePoint type:

if let hitResult = sceneView.hitTest(sceneView.center, types: .featurePoint).first {

pictureCreator.addPicture(at: SCNVector3Make(

hitResult.worldTransform.columns.3.x,

hitResult.worldTransform.columns.3.y,

hitResult.worldTransform.columns.3.z

), to: sceneView)

}

Option #2: Calculate wall position and orientation by using identified feature point clouds of the current frame

guard let currentFrame = sceneView.session.currentFrame else {

return

}

let featurePoints = currentFrame.rawFeaturePoints?.points

However, the above option is ineffective for plain walls, meaning it will not work for any surface that has only a single, solid color.

2. Searching a frame plane by point of scene view

This approach is based on a search of a detected plane’s borders, assuming that this plane is the actual wall.

Any 2D point in the scene view’s coordinate space can refer to any point along a line segment in the 3D coordinate space. The approach assumes it is possible to find a plane of the current frame in the world that is located at an intersection point normal to this frame.

This process involves going through the scene along the Y-axis pixel by pixel to find an intersection point with the detected plane.

How is it done?

Option #1: Use the ARSCNView class method to detect intersections with a plane using .existingPlaneUsingExtent type

var point = sceneView.center

var hitResult: ARHitTestResult?

repeat {

guard let nextHitResult = sceneView.hitTest(point, types: .existingPlaneUsingExtent).first else {

point = CGPoint(x: point.x, y: point.y + 1)

continue

}

hitResult = nextHitResult

} while hitResult == nil && screenBounds > point.y

This method runs into problems when there is no visible plane in the current frame. An example of this occurs when standing too close to the wall as shown below.

3. Searching a plane normal using ray casting

Ray casting is the use of line intersection tests to locate the first object intersected by a ray in the scene. This allows a point to be located that is normal to the plane outside the screen (current frame), in order to hang a picture.

The approach involves ray casting along the Y-axis until a hit test is found or the angle reaches perpendicular to the ground, meaning there are no planes in front. This method is shown in the image below.

How is it done?

Option #1: Use the SCNNode class method to detect intersections with a plane object in the scene. This implementation is provided by SceneKit framework.

var currentAngle: Float = 0

var hitResult: SCNHitTestResult?

repeat {

…

guard let nextHitResult = sceneView.scene.rootNode.hitTestWithSegment(from: startPoint, to: endPoint, options: options).first else {

currentAngle += raycastAngleStepDegrees

continue

}

hitResult = nextHitResult

} while intersectionPosition == nil && (cameraAngleY - currentAngle) > 0

This approach requires various calculations to be undertaken by the software in order to obtain the coordinates of the rays in 3D space.

Summary

These frameworks provide the ability to track horizontal planes in the world as a feature out of the box but there is currently no direct implementation of vertical surface recognition. This may however be added in the near future. Despite this, there are some effective workarounds for this limitation as outlined above and each of these approaches can be used for iOS via ARKit and Android via ARCore.

To summarize the advantages and disadvantages of each approach:

- The approach using anchor clouds doesn’t require any plane recognition to track vertical surfaces but the wall must contain contrasting features.

- The second method, searching a frame plane by point of scene view, does not require contrasting wall features but does require that the user have recognizable horizontal surfaces in front of them and these surfaces must be within the field of view of the camera.

- The searching a plane normal using ray casting approach requires additional calculations to be made associated with the ray casting but has the advantage of being effective even if the wall has plain features or if there are no horizontal surfaces in front of the user.

The whole concept behind augmented reality is the blending together of the physical objects around us with virtual ones that can be added dynamically to the world. To make this possible and realistic, the technology needs to be able to recognize the geometry of our surroundings. In other words, it needs to be able to identify ceilings, walls, and physical objects with a high degree of accuracy.

While Google and Apple have made huge breakthroughs in creating engaging AR experiences, the accuracy and capabilities of their frameworks are still not yet ideal. Currently ARKit and ARCore rely on a single device camera to track, measure, and build the real world. However, both companies are working on additional AR capabilities for their next generation of devices. These may include extra sensors such as additional laser systems on the rear side of phones, which will significantly improve depth measurement of the environment and will translate into significantly improved AR capabilities.

Egor Zubkov