How In-Stream Processing works

Now that we have introduced the high-level concepts behind In-Stream Processing and how it fits into the Big Data and Fast Data landscapes, it is time to dive deeper and explain how In-Stream Processing works.

In-Stream Processing service architecture

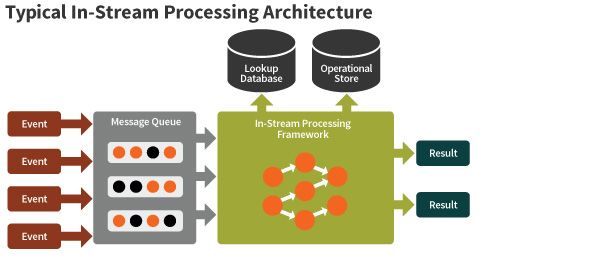

As we already know, In-Stream Processing is a service that takes events as input and produces results that are delivered to other systems.

Typical In-Stream Processing Architecture

The architecture consists of several components:

- Message queues capture the events coming in and act as a buffer.

- In-Stream Processing frameworks pick up unprocessed messages — either one-by-one or in micro-batches of a few hundred events — and put them through a processing pipeline.

- Lookup databases hold simple data structures, such as reference data, used by In-Stream Processing algorithms. These databases should be fast enough to be queried on each event. On the other hand, their volume of information is relatively low.

- Operational stores hold complex data structures passed down to In-Stream Processing by external systems. They are also used to persist an intermediate state of In-Stream Processing that will be used for processing future events. Also, this database may save results of an In-Stream Processing pipeline, such as updated user information from a clickstream.

- Results are delivered to target destinations like databases, microservices, and messaging systems via supported APIs. The events are also nearly always loaded into the data store in the batch processing system for further analysis.

Message queue

A message queue serves several purposes for In-Stream Processing: it smoothes out peak loads; provides persistent storage for all events; or it can allow several independent processing units to consume the same stream of events.

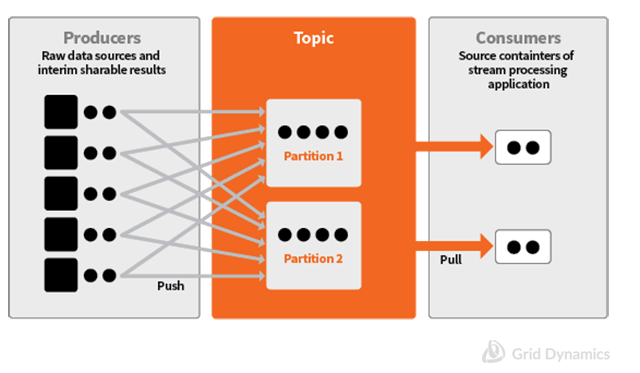

Since a message queue initially collects events from all raw data sources, it has to be performant, scalable, and fault tolerant. That’s why it commonly runs on several dedicated servers which form the message queue cluster. The main concepts essential for understanding how highly scalable message queues work are Topics, Partitions, Producers, and Consumers.

- Topics are logical streams of events intended for particular business needs. A single message queue can serve many topics simultaneously.

- Partitions are mechanisms of parallelization. Topics are divided into a number of partitions that each receive, store, and deliver a specific part of the topic to its consumer. Any partition can be run on any node in the cluster, leading to near-perfect scalability.

- Producers are data sources that emit messages related to certain topics. Producers distribute events across partitions by customizable partitioning function like simple round-robin or hash partitioning.

- Consumers are final recipients of messages. In our case the consumer is the In-Stream Processing framework.

Message Queue Basic Concepts, Parallelization Approach

Events ordering is guaranteed only inside one partition. Therefore, correct partition design for the original event stream is vital for business applications where message ordering is important.

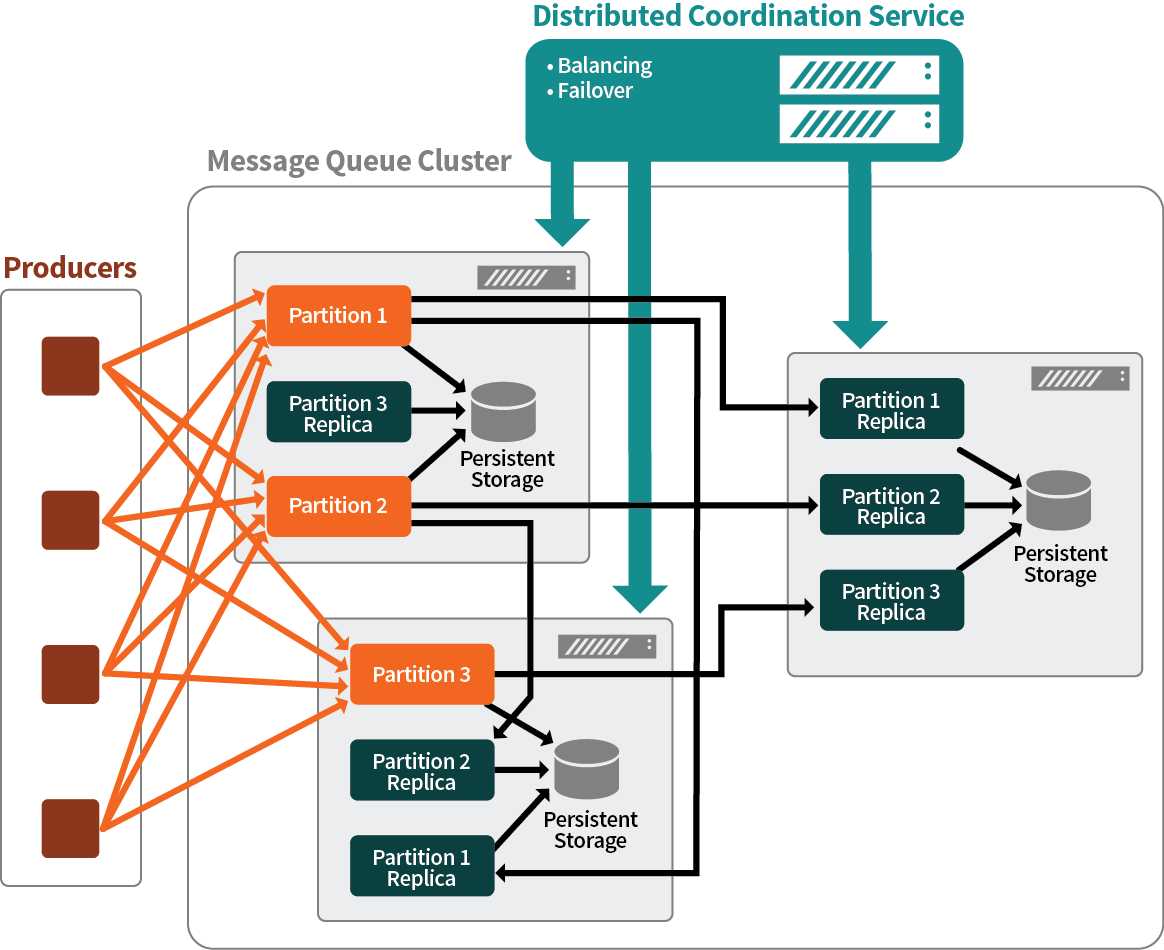

Another important aspect of queue capabilities is reliability. Message queues must remain available for producers and consumers despite server failures or network issues, with minimal risks of data loss. To achieve that, data in every partition is replicated to multiple nodes of a cluster and persists several times per minute; see the diagram below. The efficient architectural design of these features is extremely important to keep the message queues highly performant.

Message Queue Reliability

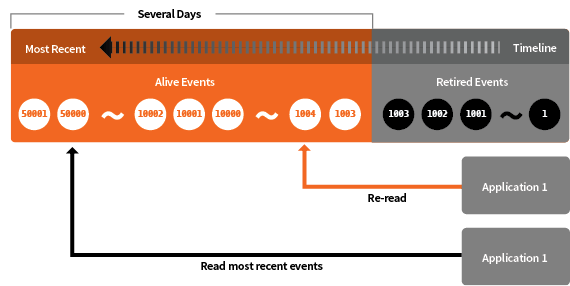

In case of a failure at the consumer side it might be necessary to re-process data that was already read from the queue. Therefore, the capability to replay the stream starting at some point in the past becomes an essential component of overall reliability in a stream processing service.

Event Re-Read Capability

In-Stream Processing framework

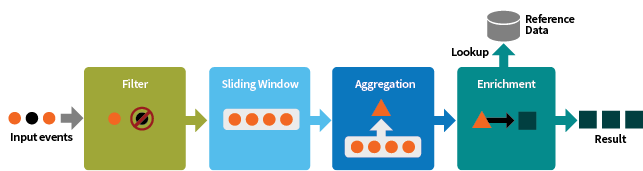

An In-Stream Processing application can be represented as a sequence of transformations, as shown in the next diagram. Every individual transformation must be simple and fast. As these transformations are chained together in a pipeline, the resulting algorithms are powerful, as well as rapid.

A Stream Processing Application As a Sequence of Transformations

New processing steps can be added to existing pipelines over time to improve the algorithms rather easily, leading to a fast development cycle of stream applications and extendability of the stream processing service.

At the same time, the transformations must be efficiently parallelizable to run independently on different nodes in a cluster, leading to a massively scalable design.

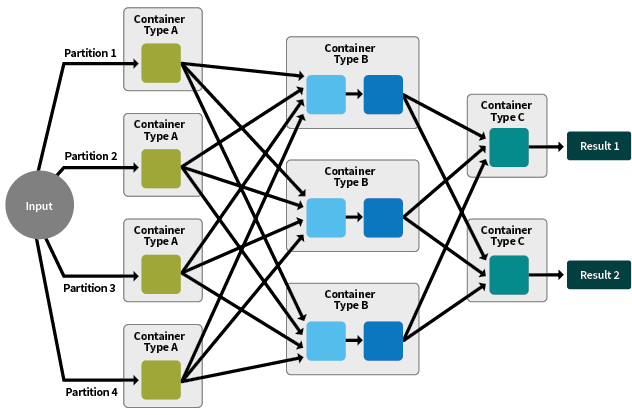

To assure this efficient parallelization, stream developers operate with two logical instruments: partitions and containers.

- Partitions have already been discussed in message queue architecture. Once again, they are parts of the original input stream that have been split up for parallel processing. Partitioning In-Stream applications must be done correctly to avoid interdependencies between partitions that would require a single transformation to access more than one partition.

- Containers encapsulate a sequence of transformations that can be processed on a cluster as a unit of execution. Some containers receive initial data streams and produce interim data streams while others receive interim streams and produce results.

Parellelization of a Streaming Application

Developers need to define the logical model of parallelization by breaking computations into steps that are known as embarrassingly parallel computations. The process is illustrated in the diagram above. Sometimes it is actually necessary to rearrange the stream data in different partitions for different containers. This can be done by re-partitioning. However, developers, beware: re-partitioning is an expensive operation that slows the pipeline speed considerably and should be avoided or at least minimized if at all possible.

Once the model is defined, the application is written using APIs of a particular In-Stream Processing framework, usually in a high-level programming language such as Java, Scala or Python. The stream processing engine will do the rest.

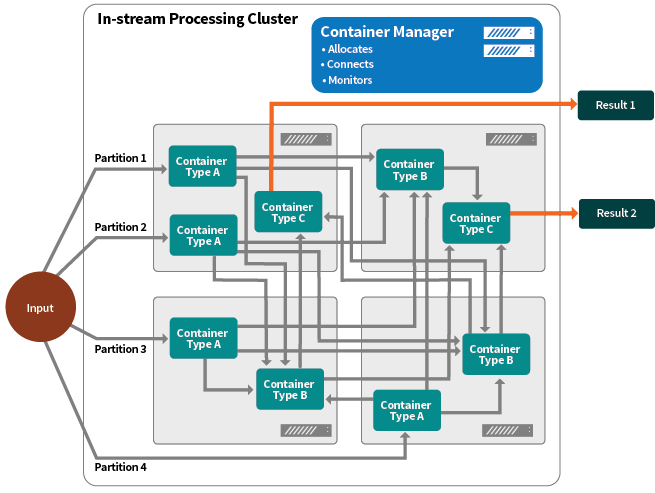

Scalability and availability via container management

While there are many different In-Stream Processing engines on the market, they mostly follow a very similar design and architecture. Typically, the streaming cluster consists of one highly available container manager and many worker nodes.

Containers are allocated to nodes based on resource availability, so new containers may be launched on any available node. If a node fails, the Container Manager will start up more containers on available nodes and re-run any events that may have been lost.

It is very important that one stream processing cluster can run many streaming applications simultaneously. Basically, an applications is simply a set of containers for the Container Manager. More applications lead to a bigger set of containers being served by the Container Manager.

Executing Multiple Streaming Applications on the Same Cluster

Machine Learning

Machine Learning involves “training” the algorithms, called models, on representative datasets to “learn” the correct computations. The quality of the models depends on the quality of the training datasets and suitability of the chosen models for the use case.

The general approach to machine learning involves three steps:

- Identify the training set and the model to be trained. This is a job for data scientists.

- Train the model. This is the job of machine learning algorithms.

- Use the trained model to perform assigned business tasks.

In-Stream Processing can use the trained models to discover insights. It is rarely used for the training process itself, as the majority of training algorithms do not perform well in the streaming architecture. There are some exceptions; for example “k-means” clustering in Spark streaming.

Time Series Analysis is an area of machine learning for which In-Stream Processing is a natural fit, since it is based on sliding windows over data series. A complication that must be considered carefully is data ordering, since streaming frameworks usually don’t guarantee ordering between partitions, and time series processing is typically sensitive to it.

In-Stream Machine Learning is a young, but highly promising, domain of computer science that is getting a lot of attention from the research community. It is likely that new machine learning algorithms will emerge that can be run efficiently by the stream processing engines. This would allow In-Stream systems to train the models at the same time as running them, on the same machinery, and improve them over time.

Data Ingestion and Data Enrichment

Data Ingestion is the process of bringing data into the system for further processing. Data Enrichment adds simple quality checks and data transformations such as translating an IP address into a geographical location or a User Agent HTTP header into the operating system and browser type used by a visitor while browsing a web site. Historically, data was first loaded into batch processing systems, then transformations were made. Nowadays, more and more designs unite Data Ingestion and Data Enrichment into a single In-Stream process, because a) enriched data can be used by other In-Stream applications; and b) end users of batch analysis systems see ready-for-usage data much faster.

Delivering results to downstream systems

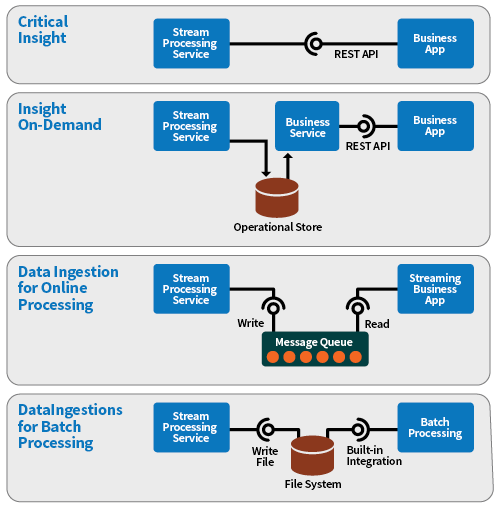

The results of all preceding phases are either individual actionable insights picked out of the original stream or a whole data stream, transformed and enriched by the processing. As we have already discussed, the In-Stream Processing service is one component of a wider Big Data landscape. In the end, it produces data used by other systems. Here are several generic use cases that require different interfaces to deliver results of the In-Stream Processing service:

- Critical insight: Rare but impactful business insights, such as potential fraud alerts, are usually delivered to the downstream application by invoking REST APIs exposed by that application.

- Insight on demand: Identified insights are stored in the operational store of the In-Stream Processing service and are available to external applications either directly through the operational store or via REST APIs. A good use case is an eCommerce system where In-Stream Processing constantly updates user profiles based on clickstreams, which the commerce service pulls to personalize a coupon or promotional offers.

- Data Ingestion for further In-Stream online processing: When resulting output is also a stream of events designed to be consumed by another In-Stream Processing system a message queue, such as Kafka, is the best way to go to connect the two systems.

- Data Ingestion for batch processing: In most cases, the original streams will be transformed, then loaded into a Data Lake for further batch processing. If the Data Lake and batch processing technologies are based on a Hadoop stack, HDFS is the ideal location for storing the post-processed events.

Common Downstream Systems Interfaces

References

- Ilya Katsov (GD architect) blog post

- Spark streaming documentation

- Apache Kafka documentation

- Stream Processing in Uber

Sergey Tryuber, Anton Ovchinnikov, Victoria Livschitz