How to implement Block Join Faceting in Solr/Lucene

In a previous post, we talked about business motivations behind the support of structured documents in a Solr/Lucene index and the unique requirements for a faceting engine which is created by this approach to modeling data. We have introduced SOLR-5743. Now it is time to take a deep dive into the implementation details of Block Join Faceting in Solr search.

First, let’s recap how Solr/Lucene search deals with structured data. Solr supports the search of hierarchical documents using BlockJoinQuery (BJQ). Using this query requires a special way of indexing documents, based on their positioning in the index. All documents belonging to the same hierarchy have to be indexed together, starting with child documents followed by their parent documents.

BJQ works as a bridge between levels of the document hierarchy; e.g. it transforms matches on child documents to matches on parent documents. When we search using BJQ, we provide a child query and a parent filter as parameters. A child query represents what we are looking for among child documents, and a parent filter tells BJQ how to distinguish parent documents from child documents in the index.

For each matched child document, BJQ scans ahead in the index until it finds the nearest parent document, which is sent into the collector chain instead of the child document. This trick of relying on relative document positioning in the index, or “index-time join,” is the secret behind BJQ’s high performance.

Now we are ready to look into faceting BJQ results. We will use the example of an e-commerce catalog with Product-SKU parent-child relationships as our modeling example.

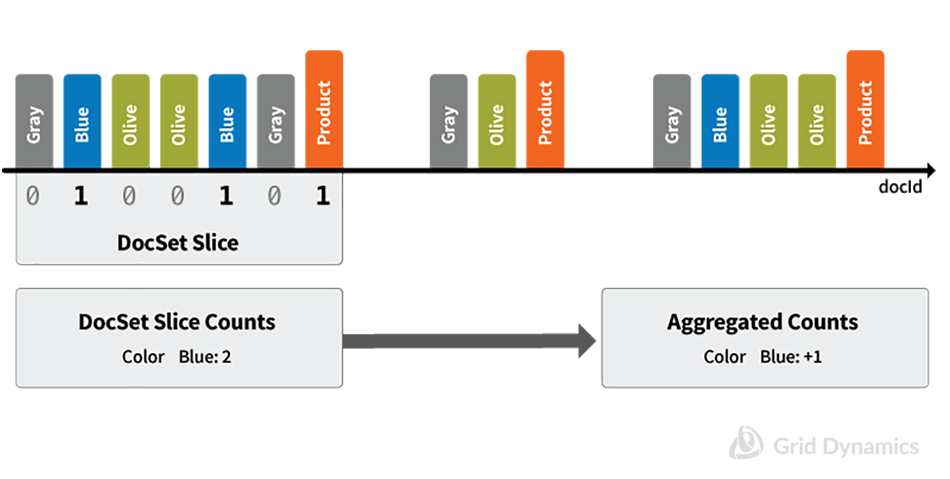

The main idea is quite straightforward. We consider each hierarchy of matched documents separately. As we are using BJQ, each hierarchy is represented in the Solr index as a document block, or DocSet slice as we call it.

First, we calculate facets based on matched SKUs from our block. Then we aggregate obtained SKU counts into Product-level facet counts, increasing the product level facet count by only one for every matched block, irrespective of the number of matched SKUs within the block. For example, if we are searching by COLOR:Blue, even though two Blue SKUs were found within a block, aggregated product-level counts will be increased only by one.

This solution is implemented inside the BlockJoinFacetComponent, which extends the standard Solr SearchComponent. BlockJoinFacetComponent validates the query and injects a special BlockJoinFacetCollector into the Solr post-filter collectors chain. When BlockJoinFacetCollector is invoked, it receives a parent document, since the BlockJoinFacetComponent ensures that only ToParentBlockJoinQuery is allowed as a top level query.

Now, to adjust facet counts based on this parent, we need to identify the child documents which matched within the current block. To do that we request BlockJoinScorer to trackPendingChildHits() and to swapChildDocs(). Our next step is to calculate facets on the obtained doc slice. Initially, we invoked a standard faceting routine, e.g. we employed DocValuesFacet.getCounts() providing DocSet, representing matched child documents from the current index block. Finally, facet counts calculated on slice were aggregated into global ones counting multiple hits on the child documents as one hit on the parent document.

We developed BlockJoinFacetRandomTest to ensure the functional correctness of this implementation. This test checks facet calculation with different combinations of Products, SKUs and random filters.

Also, BlockJoinFacetComponent was extended to work with Solr Cloud, and supplied with BlockJoinFacetDistribTest as well.

This all looked good except for one issue: faceting was relatively slow.

Profiling showed that the utility DocValuesFacet.getCounts() was developed under the assumption that it should be called only once for each particular field. However, with BlockJoinFacetComponent we invoked this method for each matched parent document. As a result, lots of work was unnecessarily repeated, such as determining field type, allocating in-memory objects, determining top and segment SortedSetDocValues, and migration from segment facet counts to global ones. Thus, the first step of performance optimization was the introduction of BlockJoinFieldFacetAccumulator, which is responsible for accumulating facet counts for a particular field. It performs all mentioned tasks during initialization or switching to the next index reader. Those improvements showed a 25X performance gain in local benchmarks.

Our next improvement was inspired by the Reverse Nested Aggregation Elastic Search solution. Suppose we need to calculate facets for a DocValues field. For each segment we know SortedSetDocValues of this field, which contains the field’s unique values. For each of those values, we need to track a facet count, where each term has a corresponding long value positioned in the parallel array of facet counters. Here we use another trick to save memory and ensure CPU cache locality: each long value in our facet counter array combines the aggregated (parent-level) facet count for a particular term (highest 4 bytes) and position of the most recently matched parent document which has a block with a hit for this term (lowest 4 bytes).

When BlockJoinFieldFacetAccumulator is asked to update facet counts within a particular parent document and an array of matched child documents, we:

- iterate over matched child documents

- for each child document we identify terms contained in the document

- for each term we find a corresponding array entry and see if its encoded parent position matches our provided parent document. If it matches, this means we have duplicated our hit within the same block, so we ignore it and proceed with an iteration over terms. If the positions do not match, we have a first hit in a new block, and our encoded long value is updated to increment the facet value counter and update what was last seen in the parent position.

Thus, when all matched parent documents are processed, the higher halves of the long values in our accumulator array will contain the required facet counts. This optimization delivers a 2X performance boost.

A third optimization was suggested by Grid Dynamics Engineer Mikhail Khludnev. He proposed calculating doc slice as an intersection of all matched child documents with all children of the current parent document. This approach is implemented as a separate BlockJoinDocSetFacetComponent. On local benchmarks it’s faster than BlockJoinFacetComponent by 40%, but we believe that learning the exact circumstances in which one component is better than another requires further analysis. We recommend experimenting with macro benchmarks using both implementations.

(Components described in this blog post were committed into the Solr codebase with SOLR-5743 in December 2015 and have been available since Solr 5.5. Documentation is available at Solr Wiki.)

In order to utilize one of the BJQ faceting components, you need to configure them in solrconfig.xml and introduce appropriate search handlers, for example:

<searchComponent name="blockJoinFacet"

class="org.apache.solr.search.join.BlockJoinFacetComponent"/>

<requestHandler name="/blockJoinFacetRH"

class="org.apache.solr.handler.component.SearchHandler">

<lst name="defaults">

<str name="shards.qt">blockJoinFacetRH</str>

</lst>

<arr name="last-components">

<str>blockJoinFacet</str>

</arr>

</requestHandler>

<searchComponent name="blockJoinDocSetFacet"

class="org.apache.solr.search.join.BlockJoinDocSetFacetComponent"/>

<requestHandler name="/blockJoinDocSetFacetRH"

class="org.apache.solr.handler.component.SearchHandler">

<lst name="defaults">

<str name="shards.qt">blockJoinDocSetFacetRH</str>

</lst>

<arr name="last-components">

<str>blockJoinDocSetFacet</str>

</arr>

</requestHandler>Since only docValues fields could be used for BJQ faceting, you need to update the corresponding field configuration in the schema.xml file. For example:

<field name="COLOR_s" type="string" indexed="true"

stored="true" docValues="true"/>

<field name="SIZE_s" type="string" indexed="true"

stored="true" docValues="true"/>

Then, after indexing a set of hierarchical documents like:

<doc>

<field name="id">10</field>

<field name="type_s">parent</field>

<field name="BRAND_s">Nike</field>

<doc>

<field name="id">11</field>

<field name="type_s">child</field>

<field name="COLOR_s">Red</field>

<field name="SIZE_s">XL</field>

</doc>

<doc>

<field name="id">12</field>

<field name="type_s">child</field>

<field name="COLOR_s">Blue</field>

<field name="SIZE_s">XL</field>

</doc>

</doc>You need to pass the required ToParentBlockJoinQuery to the configured request handler and request calculating facets using the child.facet.field parameter. For example:

http://localhost:8983/solr/collection1/blockJoinFacetRH?q={!parent+which%3D%22type_s%3Aparent%22}type_s%3Achild&wt=json&indent=true&facet=true&child.facet.field=COLOR_s&child.facet.field=SIZE_sIf everything is configured correctly, then Solr response should look like:

{

"responseHeader":{

"status":0,

"QTime":82

},

"response":{

"numFound":1,

"start":0,

"docs":[

{

"id":"10",

"type_s":["parent"],

"BRAND_s":["Nike"],

"_version_":1526159505694392320

}

]

},

"facet_counts":{

"facet_queries":{},

"facet_fields":{

"COLOR_s":[

"Blue",1,

"Red",1

],

"SIZE_s":[

"XL",1

]

},

"facet_dates":{},

"facet_ranges":{},

"facet_intervals":{},

"facet_heatmaps":{}

}

}You may also consider an alternative way of calculating facets on hierarchical product structures. Solr JSON Facet API, introduced by Yonik Seeley, allows us to solve the same task in a slightly different way. This is a new and really powerful feature that appeared in Solr 5.4. It provides a very flexible way to control the facets to be calculated. In particular, it makes it possible to calculate facets on child documents with aggregation by the unique _root_ field. For example, for request:

curl http://localhost:8983/solr/collection1/query -d 'q=(type_s:child)&rows=0&

json.facet={

colors:{

type : terms,

field : COLOR_s,

facet: {

productsCount: "unique(_root_)"

}

},

sizes:{

type : terms,

field : SIZE_s,

facet: {

productsCount: "unique(_root_)"

}

}

}'Solr returns a response that contains expected counts (check productCount field):

"facets":{

"count":2,

"colors":{

"buckets":[

{

"val":"Blue",

"count":1,

"productsCount":1

},

{

"val":"Red",

"count":1,

"productsCount":1

}

]

},

"sizes":{

"buckets":[

{

"val":"XL",

"count":2,

"productsCount":1

}

]

}

}This method has obvious advantages: it’s easier to programmatically control which facets are to be calculated, how they should be presented in response, include statistics and nested facet commands, etc. The drawback here is that the search query in the above example, i.e. q=(type_s:child), yields child documents, while facets are calculated on parent ones, So in order to present parent documents in both search results and calculated facets, we need to execute two separate requests.

Another reason to choose one of the BlockJoinFacetComponents is better performance. On my local tests, BlockJoinDocSetFacetComponent is about twice as fast as the JSON Facet API.

BlockJoinFacetComponent can be further improved by replacing the iteration over matched child documents with bitwise operations over entire DocSets. The main idea here is to be able to obtain, for each term, a DocSet of matched parents with a particular term in child documents. In this case, the facet count of the term should correspond to the size of this DocSet.