IoT Platform: A Starter Kit for AWS

The global market for the Internet of Things (IoT) is expected to reach $413.7 billion by 2031, according to a recent report published by Allied Market Research. The key industries driving this growth include manufacturing, supply chain and logistics, energy, and smart cities. The digital transformation of Industry 4.0, including the trend towards smart infrastructure, has been a significant driver of this growth. By integrating smart IoT systems, we can apply machine learning to implement smart maintenance, smart quality analysis, and even predictive and autonomous fixing protocols for potential disruptive issues. The industries with the highest demand for artificial intelligence (AI) are described below.

- Factories with an IoT gateway: Typically, devices in manufacturing rely on a wide range of network and application protocols. As such, gathering data for third-party integration can be a challenge. To reduce overall complexity, the most popular approach is using an IoT gateway as a single data access point. Integration with an IoT gateway leads to encapsulating the complexity of edge interactions, and focuses on enhancing the manufacturing system's existing functionality.

- Factories without an IoT gateway: In the case of manufacturing without an IoT gateway, edge data ingestion and inference can be a complex and expensive task. For example, when gathering data manually, you can only perform batch inference processing in the cloud. Nevertheless, machine learning brings value by leveraging existing processes and removing manual work.

- Energy industry: Wind energy is the most popular choice for green economic recovery. According to the Global Wind Energy Council (GWEC), wind power capacity is growing rapidly over the years. Energy companies now face the challenge of managing and maintaining device fleets. What’s more, the commercial wind turbine costs around $3-4 million, leading to high demand for extended turbine lifetime, and operational costs.

- Smart cities: Using artificial intelligence for traffic management, detecting water supply anomalies, surveying crowd density, and measuring non-green energy usage enhances and optimizes city services. The primary source of data for these use cases is video feeds, which makes computer vision an integral machine learning approach at the edge site.

Building an IoT platform in AWS for the first time can be challenging due to the many available services, coupled with the complexities of integrating data collection, IoT device management, and Machine Learning (ML) platforms. Our AWS IoT Platform Starter Kit makes it quick and easy to provision the platform in the cloud, integrate it with on-premise facilities, and onboard business-critical applications such as predictive maintenance or visual quality control.

The AWS IoT Platform Starter Kit is published in the AWS Marketplace and can be found here.Why is building an IoT platform a challenge?

An IoT platform is a complex and sophisticated system that requires multiple processes, such as data ingestion, storage, processing, and analytics from edge devices. Modern smart platforms also require machine learning algorithms for anomaly detection, forecasting, classification, and computer vision at the edge and in the cloud. Companies must have a competent IT department with expertise in cloud service capabilities to successfully utilize these features, which can be especially challenging for critical systems that require low latency, robustness, and security. The complex solution can be broken down into three main architecture building blocks: an edge computing platform, a data platform, and a machine learning platform, each of which presents its own challenges.

- The data platform must be able to handle data ingestion from many geographically distributed sources using a variety of network protocols such as OPC-UA, MQTT, TCP/IP, and Modbus. Connecting IoT devices to the cloud services securely and reliably with an acceptable latency is a complex task, and it can be difficult to find the optimal solution. In addition to data ingestion, the data platform must also handle data processing and storage tasks as part of preparing the data for machine learning and visualization.

- The edge computing platform manages edge devices, including CI/CD for an edge component, runtime, edge processing, version control and secure and reliable connectivity within the cloud.

- The machine learning process involves model learning, delivery, and an inference layer. The inference logic can be located at the edge layer or in the cloud. Choosing the most appropriate machine learning approach for a particular task and implementing it requires advanced data science expertise.

Having implemented these parts, companies can achieve several operational benefits:

- Predictive maintenance analytics helps identify potential breakdowns before they can impact production systems. Manufacturing companies often lose up to 6% of revenue due to unplanned downtime, and medical device companies can lose approximately $50-150 per hour. Predictive maintenance can reduce maintenance costs, improve overall equipment effectiveness, and increase worker safety.

- Predictive quality analytics can help improve pattern investigation, predict future outcomes, and enhance product quality. Poor quality can lead to a loss of approximately 20% of a manufacturing company’s annual sales.

- Mitigating failure is a crucial part of manufacturing processes. A faster incident response can save money and prevent breakdowns, and it can be automated without human intervention.

- Visual quality control using computer vision models can detect product defects.

- Logistics tracking can improve supply chain management and prevent bottlenecks in critical supply chain networks.

The capabilities mentioned above are sophisticated and require the integration of data, ML, and IoT platforms to provide end-to-end functionality. AWS Cloud significantly reduces the effort required to build and integrate a system into the platform. In the following sections, we will discuss a solution using AWS Cloud that simplifies building a data platform, managing edge devices, and implementing machine learning at the edge and in the cloud layers.

Data ingestion at the edge layer

AWS Cloud provides a robust IoT ecosystem of services, including IoT Greengrass and IoT Core for data processing and machine learning services such as AWS Sagemaker and AWS Glue. These services can be used to build a complex IoT system with minimal effort to meet business goals.

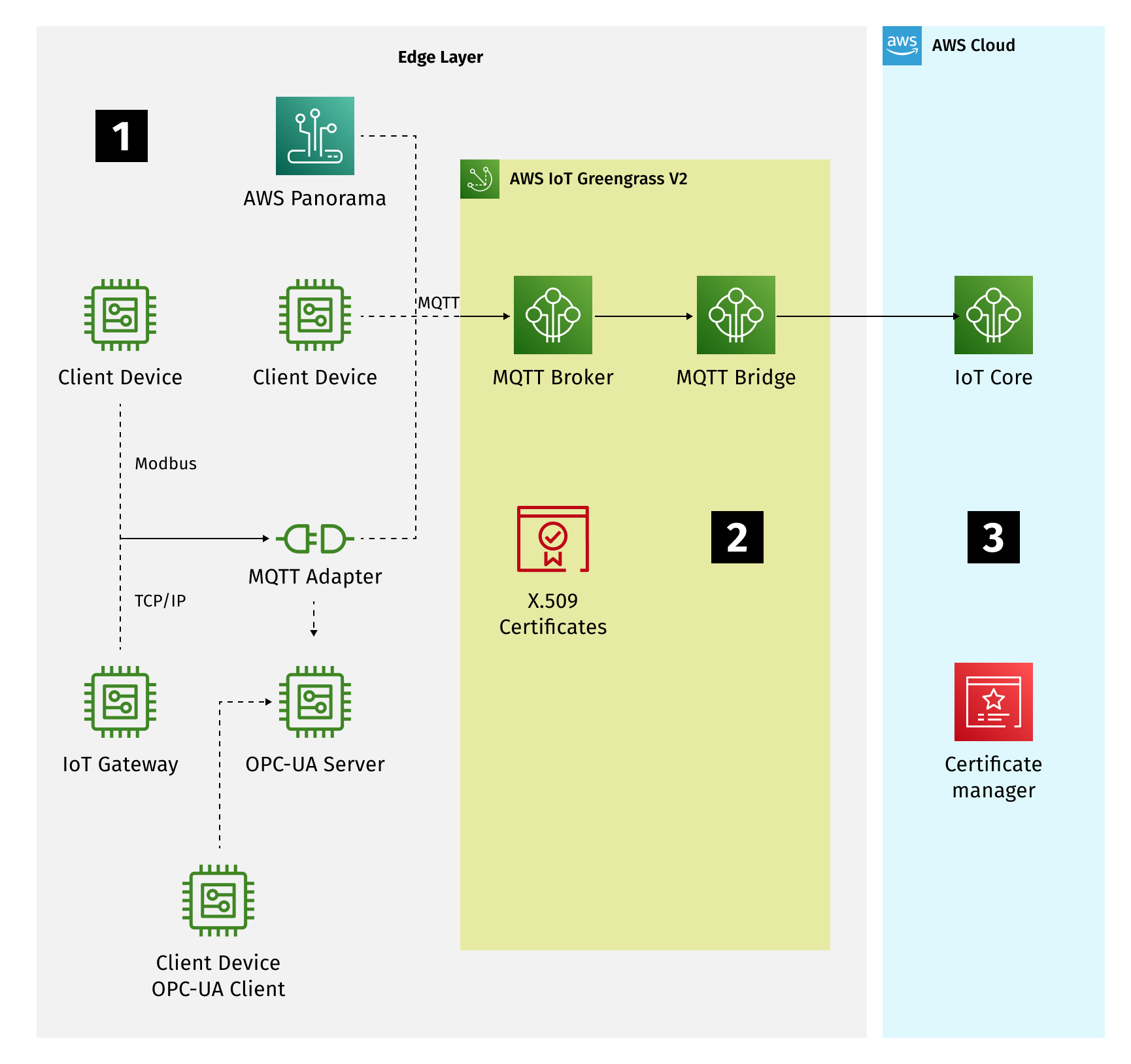

The most challenging tasks in this solution involve data ingestion from IoT devices and data processing logic at the edge layer. To address these tasks, AWS IoT Solutions provides a unified platform called AWS IoT Greengrass V2 for edge sites. It is a container for the AWS-provided and business-specific components written in Java, C++ or Python, and it includes an AWS-provided Java application that local engineers must install on the edge device.

The primary method for collecting data from IoT devices to AWS Greengrass is to run the AWS MQTT Broker component in AWS Greengrass and transfer data from the IoT devices via MQTT. In this case, local engineers are responsible for adapting the devices’ protocols to MQTT. After data collection, edge processing tasks such as aggregation, filtering, buffering, and machine learning inference can be performed within AWS Greengrass components.

In terms of security, the AWS IoT ecosystem supports zero trust by default. As such, IoT Greengrass components establish trust through authentication using X.509 certificates, security tokens, and custom authorizers. All communication between client devices, Greengrass core devices, and IoT Core is secured by TLS 1.2.

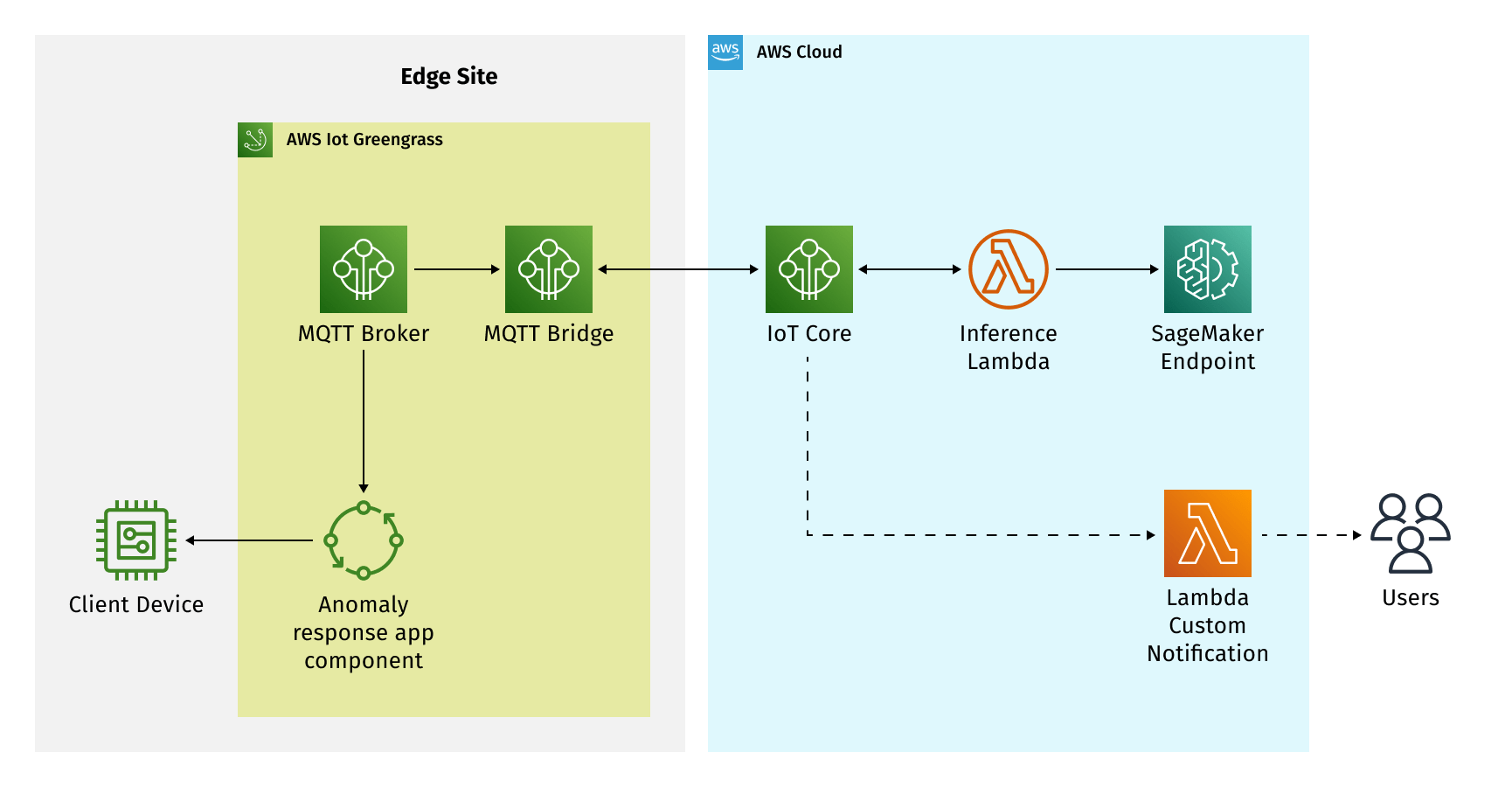

This is conveyed in Figure 1-1 below.

Further on, we cover each stage of data ingestion.

- The connection between client devices and the MQTT Broker Greengrass component requires using MQTT as transport, which involves some effort in writing adapters. However, this approach allows us to encapsulate all business-specific protocols, and focus the Greengrass components on edge processing tasks.

Manufacturers often use IoT gateways to gather device data from on-premise infrastructure, so a focus on adapting this data to the MQTT Broker-specific format is important.

Another popular approach to gathering data is using OPC-UA servers, where an adapter application is implemented to stream the gathered device data to the MQTT Broker.

AWS IoT Solutions also provide an edge device specifically for video feeds called AWS Panorama. It enables capturing video streams, applying machine learning algorithms at the edge site, and sending the results via MQTT. This can be used to effectively apply artificial intelligence to evaluate manufacturing quality, improve supply chain logistics, and support smart cities. - The AWS IoT Ecosystem provides the components for hosting MQTT Brokers, such as the Moquette MQTT 3.1.1, and the EMQX MQTT 5.0 broker components. Connections to these brokers must only be established using TLS 1.2 in accordance with zero-trust principles.

- Finally, the selected MQTT topics route to the IoT Core using the MQTT Bridge component.

Data processing in the cloud

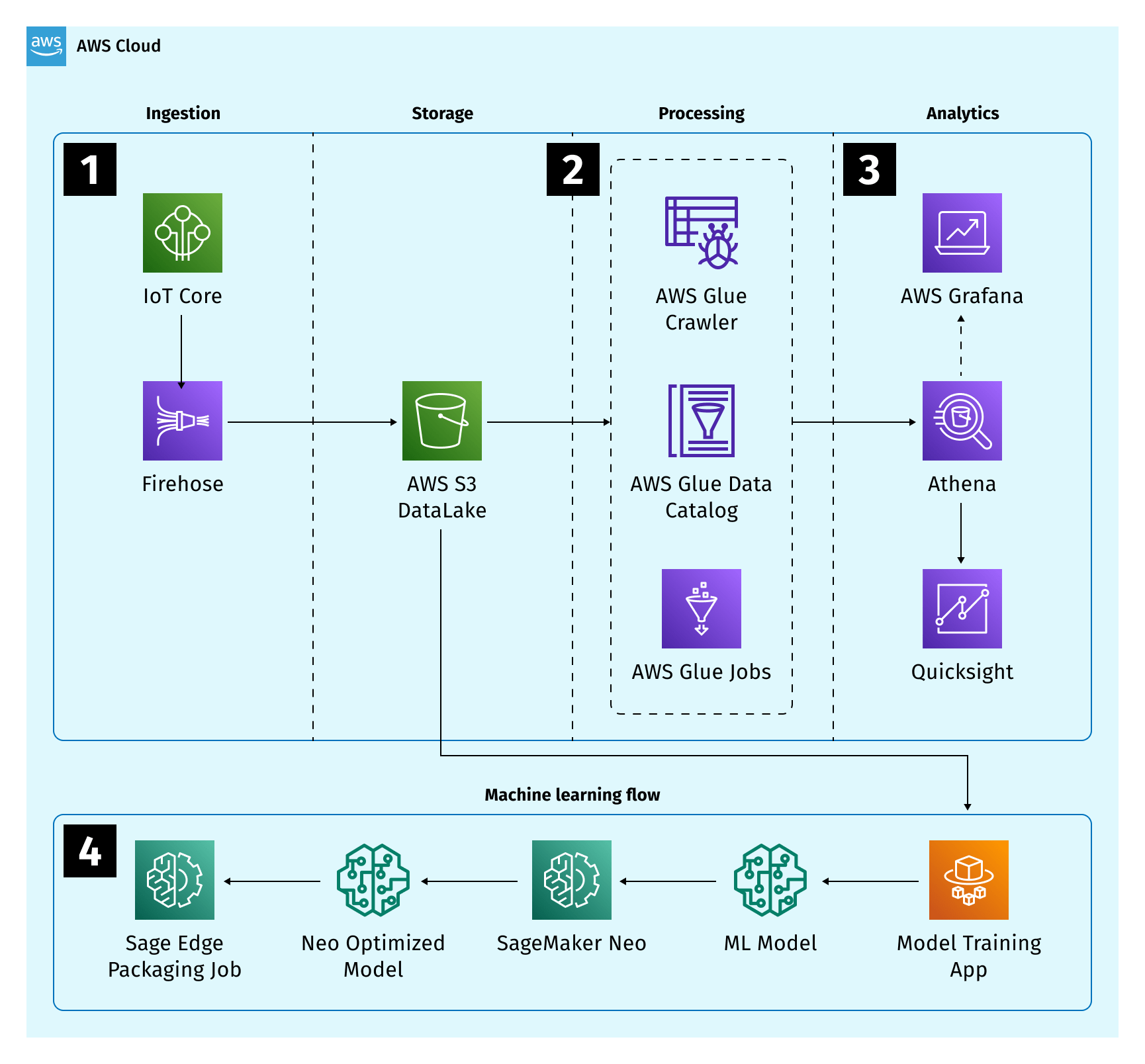

After data are ingested from the edge layer, the next tasks are storing and processing the data, and implementing machine learning models.

- After ingesting device events from the edge site, we can use AWS Firehose to accumulate and store batches of data in an appropriate format, such as parquet or ORC, for at least the last minute. It is advisable to process data at the edge layer as much as possible before sending it to the cloud.

- Data processing and storage are important components of a data platform and are necessary for preparing data for training machine learning models and visual quality control. We can use AWS Glue to build an ETL pipeline and use S3 as a storage system to implement this functionality.

- For visualization, we have at least two options: the business intelligence tool, AWS QuickSight, and the open-source visualization tool, AWS Grafana.

- Once the data is prepared for machine learning, we can use AWS SageMaker components to train models. The pipeline is scalable and can handle hundreds of models per device type.

We can use options such as the AWS Sagemaker API with AWS Lambda, the AWS Sagemaker pipeline, or any business-specific method for model training.

AWS Sagemaker also provides a component for model compilation, which optimizes the model for a specific platform or device type using the NEO-compiler. Finally, IoT Core packages the compiled model for deployment in the edge layer.

The AWS data platform described above allows us to build a fully automated pipeline for continuous learning and timely improvement of machine learning models. After training the model, the next step is implementing the inference logic and edge processing.

Inference at the edge layer

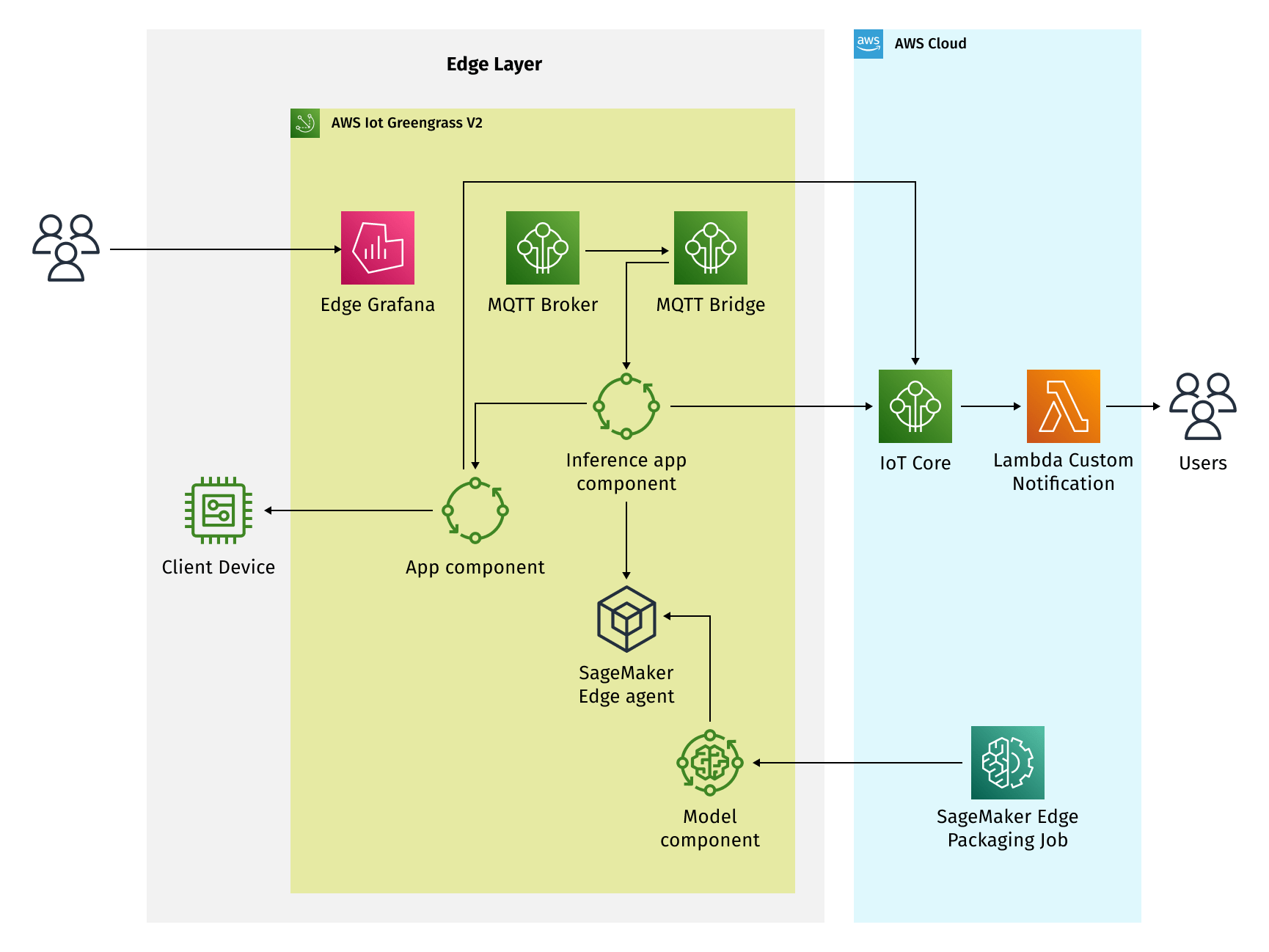

AWS IoT Greengrass also allows developers to build machine learning inference at the edge site with minimal effort using AWS-provided components such as the Sagemaker Model Component and Sagemaker Edge Agent, also known as the inference engine. However, some work involving business-specific logic must be encapsulated into components called the inference app component and the app component.

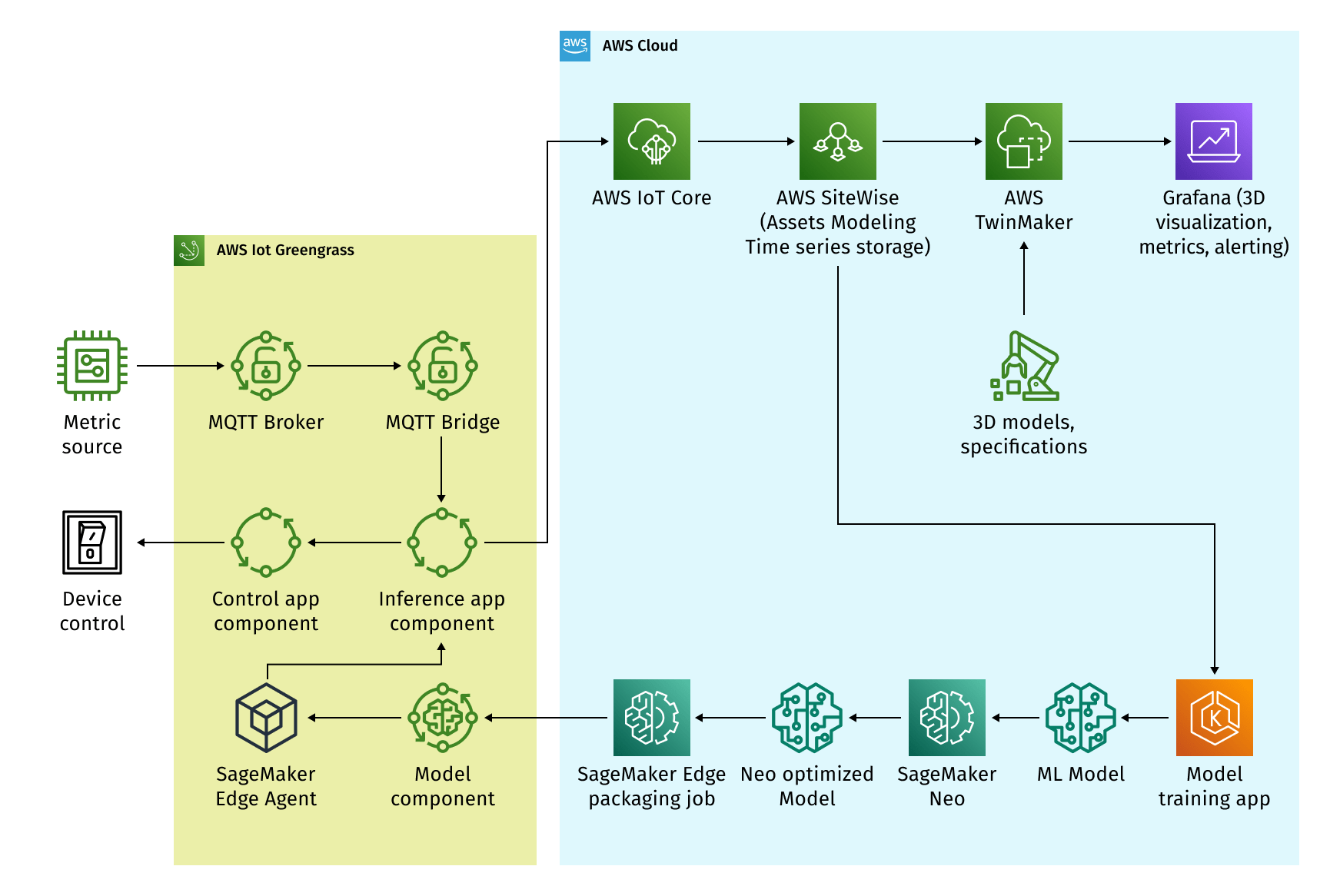

See Figure 1-3 below.

Once we have trained a new machine learning model, compiled it in an optimized build for an IoT device, and deployed it as a model component with a new version, the Sagemaker Edge Agent can load the new version of the model component and apply it to inference without any manual work.

The core logic at the edge site is in the inference app component, which reads data from the AWS IoT Greengrass Core IPC service (local pubsub), processes the data sample, and invokes Sagemaker Edge Agent API to perform edge inference. This leads to secure and reliable edge processing for critical tasks such as anomaly detection, visual quality control, and logistics tracking, and it can also off-load cloud services and save costs.

We can also use the received values to provide edge analytics with AWS Greengrass community components, such as Grafana components.

The responsibilities of the inference app components include:

- Listening to local PubSub;

- Preprocessing data to build an input sample for inference;

- Interpreting the inference;

- Performing edge processing before sending the data to AWS IoT Core.

To optimize performance, various techniques are used:

- Implementing the component in low-overhead languages like C++;

- Reducing network communications by execution inference without the Sagemaker Edge Agent;

- Using a faster machine learning model, applying batching to the input sample; or

- upgrading the hardware.

The AWS-provided inference engine supports both CPU-based and GPU-based inference, such as NVIDIA Jetson.

Overall, the benefits of using inference at the edge site include:

- Increased throughput due to local connections;

- Low latency due to the close proximity of computing units; and

- Increased security due to the lack of outbound traffic from the local network during critical processes.

To implement edge processing of the result, the app component, and another AWS Greengrass component for the edge site are created. Its primary responsibilities include:

- Listening to local PubSub;

- Choosing the appropriate processing for specific results;

- Communicating with IoT device management systems; and

- Notifying user about the executed commands through the IoT Core.

Notifications from AWS IoT Core can be sent to Lambda, AWS SNS, AWS Cloudwatch, or even an HTTP Endpoint.

While this is a powerful solution, it is also complex and requires a deep understanding of edge computing. Therefore, it may be easier to implement an approach based on inference in the cloud.

Inference in the cloud

Machine learning inference can be easily implemented using serverless technology within AWS Lambda and AWS Sagemaker Endpoints. This approach is especially useful for quickly prototyping and testing new models, as they can be easily deployed to SageMaker Endpoints after learning. However, this solution does have some drawbacks, including increased latency and added security challenges. If the channel between the edge and IoT Core is compromised, it can lead to incorrect decisions about anomalies. The revised architecture is illustrated in Figure 1-4.

In this design, the logic from the inference app component is implemented in the Inference Lambda function. The responsibilities of this function include:

- Preprocessing data to create an input sample for inference;

- Performing the actual inference;

- Sending the results to IoT Core.

This fully-managed solution offers several benefits, including simplicity, maintainability, and ease of integration with third-party tools. All of these factors make it a strong consideration for our approach.

Adoption of AWS IoT for manufacturing

An IoT platform for manufacturers based on AWS technologies can be built using advanced AWS services, such as AWS Sitewise and AWS TwinMaker with TwinMaker Knowledge Graph.

The integration of the described services is on the data platform layer shown in Figure 1-5.

AWS IoT Core routes messages to AWS Sitewise using AWS IoT Rules. Then, AWS Sitewise is responsible for storing the ingested time series data as a hierarchy of industrial assets. The service also processes the data to calculate important metrics, giving us the ability to evaluate the reliability and efficiency of equipment in real-time.

Each asset relates to a certain physical device that can be represented as a digital twin. A digital twin is a virtual representation of industrial equipment that enhances monitoring, maintenance and simulation. With the use of AWS TwinMaker, we can associate data, equipment specifications, 3D models of real-world devices, and even rooms to create immersive digital twins.

One potential challenge of adopting this technology is the difficulty of creating 3D models of real-world rooms and equipment in manufacturing facilities. However, we can overcome this challenge by utilizing various cameras and CAD systems to capture 3D space and accurately model real-world environments.

To summarize, the proposed solution addresses several key challenges in the manufacturing industry, such as:

- Modeling industrial assets using data streams from industrial equipment;

- Calculating industrial metrics, like mean time between failures, and overall equipment effectiveness (OEE) in real-time;

- Building digital twins with comprehensive monitoring capabilities like real-time grafana dashboards with 3D visualization of industrial equipment, assets, metrics and alerts; and

- Visualizing the physical or logical relationships between assets using the AWS TwinMaker Knowledge Graph.

Although this system is complex and requires integration between manufacturing facilities, the cloud, and applications, a well-designed end-to-end system is an essential starting point for integration. It may be complex, but it is a crucial part of digital transformation, and is likely to become an industry standard in the not-so-distant future.

AWS IoT Platform Starter Kit: Conclusion

The digital transformation of modern enterprises often involves integrating on-premise facilities with cloud-native or agnostic solutions. This process can be challenging and may require a partner who can avoid over-engineering, and provide industry standard solutions. The benefits of cloud solutions, including a lower TCO and a faster time-to-market, should be considered in the initial design with a clear plan for how they will be achieved.

Interested in the AWS IoT Platform Starter Kit? Get in touch with us to start a conversation.