Istio Service Mesh Beyond Kubernetes

Cloud migration has been a hot topic for many years but for many organizations it's far from being complete. According to a recent Forrester's State Of Public Cloud Migration report, about two-thirds of firms plan to move their workloads to public clouds in the next 12 months.

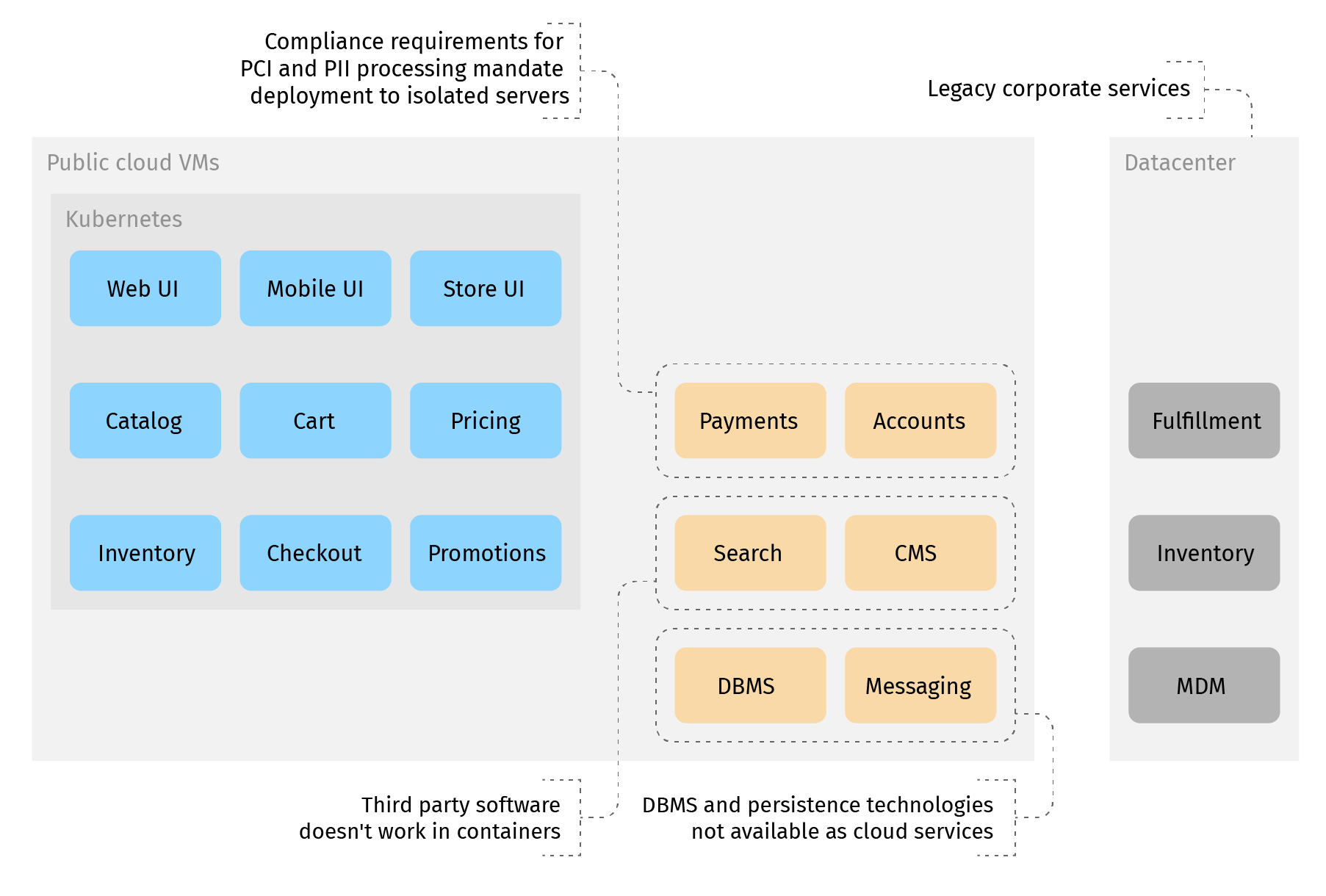

When we design a cloud migration of an enterprise application system, we usually find that some of its parts can be safely moved to Kubernetes. But many others cannot - either because they need too much re-working, due to being stateful, or due to entangled interactions. Custom persistence services (DBMS, file management services, messaging services) have to be retained because too many things depend on them. So a large chunk of the application system has to go to VMs.

Service mesh is a class of networking middleware helping with such an evolution. Istio is the default choice of service mesh technology because it is the best known and because currently there are no other options that are as feature-rich and future-proof. But it doesn't mean that Istio is perfect or can solve every problem that is thrown at it.

That's how it usually lays out. And that's quite different from the typical Istio service mesh demonstrations.

Service mesh across K8s and VMs - conceptual model

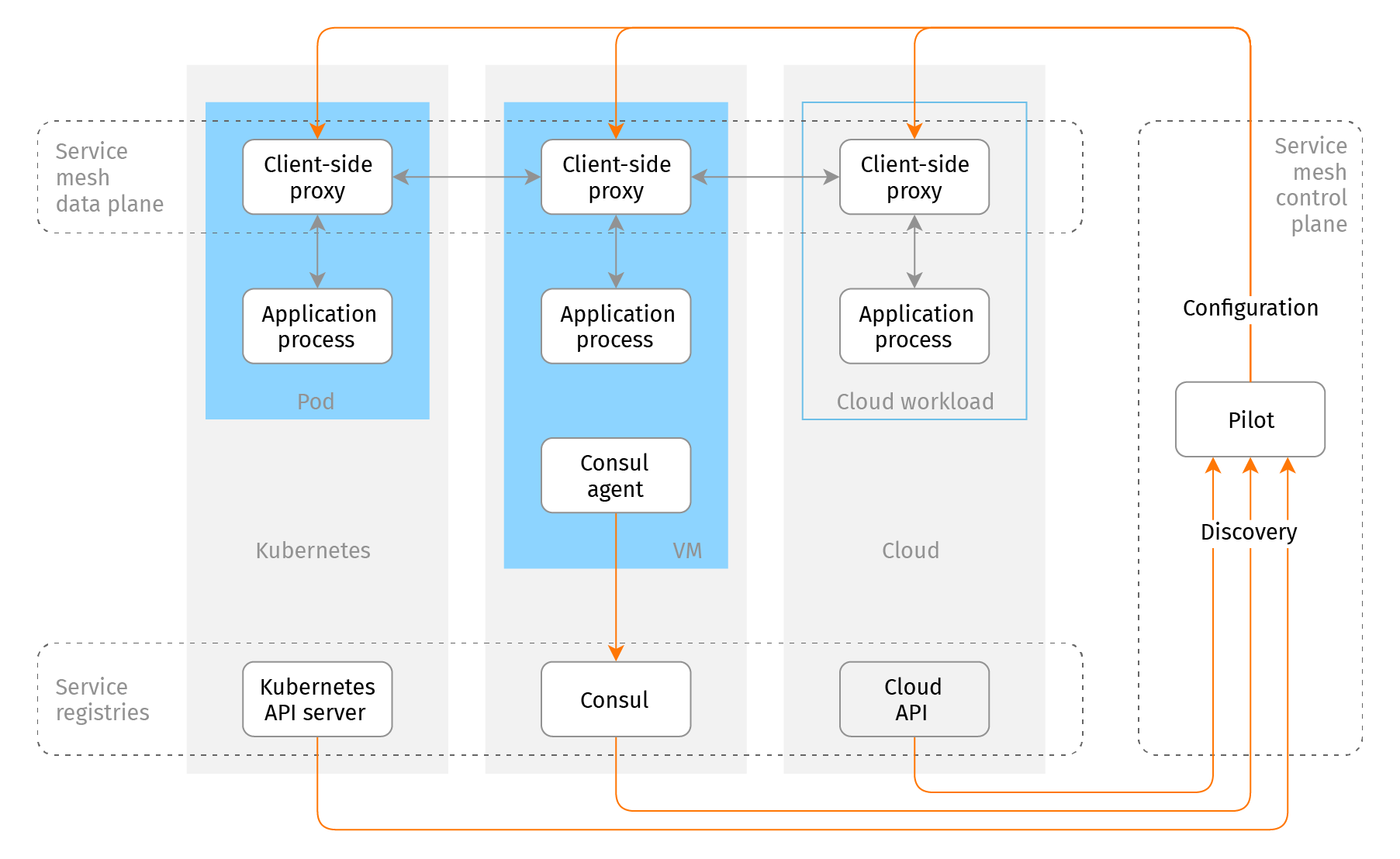

The main pieces of service mesh networking middleware are transport (referred to as "data plane") and configuration (or "control plane") facilities. The data plane is uniform - it consists of client-side proxies for every running unit of the application. The proxies communicate with each other and are agnostic to the actual runtime environment - whether it is a Kubernetes cluster, a mixture of VMs, or physical machines. But the control plane depends heavily on the environment because it configures the data plane according to the system layout.

Roughly speaking, the control plane can be represented as a combination of a service registry control circuit and a configuration service. The service registry (or registries) aggregates the information about the application deployment, while the configuration service prepares routing rules for the data plane proxies.

What is a service registry? For the needs of a service mesh (and service discovery in general), it's a facility with an API maintaining a mapping from application services to network endpoints of the service. To be useful, a service registry should be (almost) real-time, work for reasonably big deployments, effectively handle updates, and support custom metadata for services and endpoints. For example:

- Kubernetes implements a service registry for Kubernetes services

- HashiCorp Consul implements a service registry for any services that either register with it or have a Consul agent

- Certain proprietary cloud APIs may be utilized as a service registry for services running in the cloud

DNS looks like a service registry because its SRV records map services to the network endpoint. But DNS is not real-time and cannot handle either reasonably large deployments or massive updates. That alone excludes DNS from the list of service registry implementations. DNS does not support service metadata.

The service mesh configuration facility should query the service registries, aggregate the information about services and their endpoints, apply operator-defined rules, and supply configurations for the data plane proxies. For service mesh implementations using Envoy proxy (most of the active and popular service mesh toolkits do), the configuration facility exposes the configuration data via the xDS protocol family. Specifically, this is done by CDS/EDS (Cluster and Endpoint Discovery Services) protocols.

The real application deployment runs some things at Kubernetes, some at VMs to be migrated to Kurbenetes at a later time, while some are still sitting in an on-premise datacenter. For such a mixed setup we would like to use Kubernetes for Kubernetes service discovery and Consul for services at cloud VMs and physical machines. Not so long ago, Istio seemed to be doing exactly that:

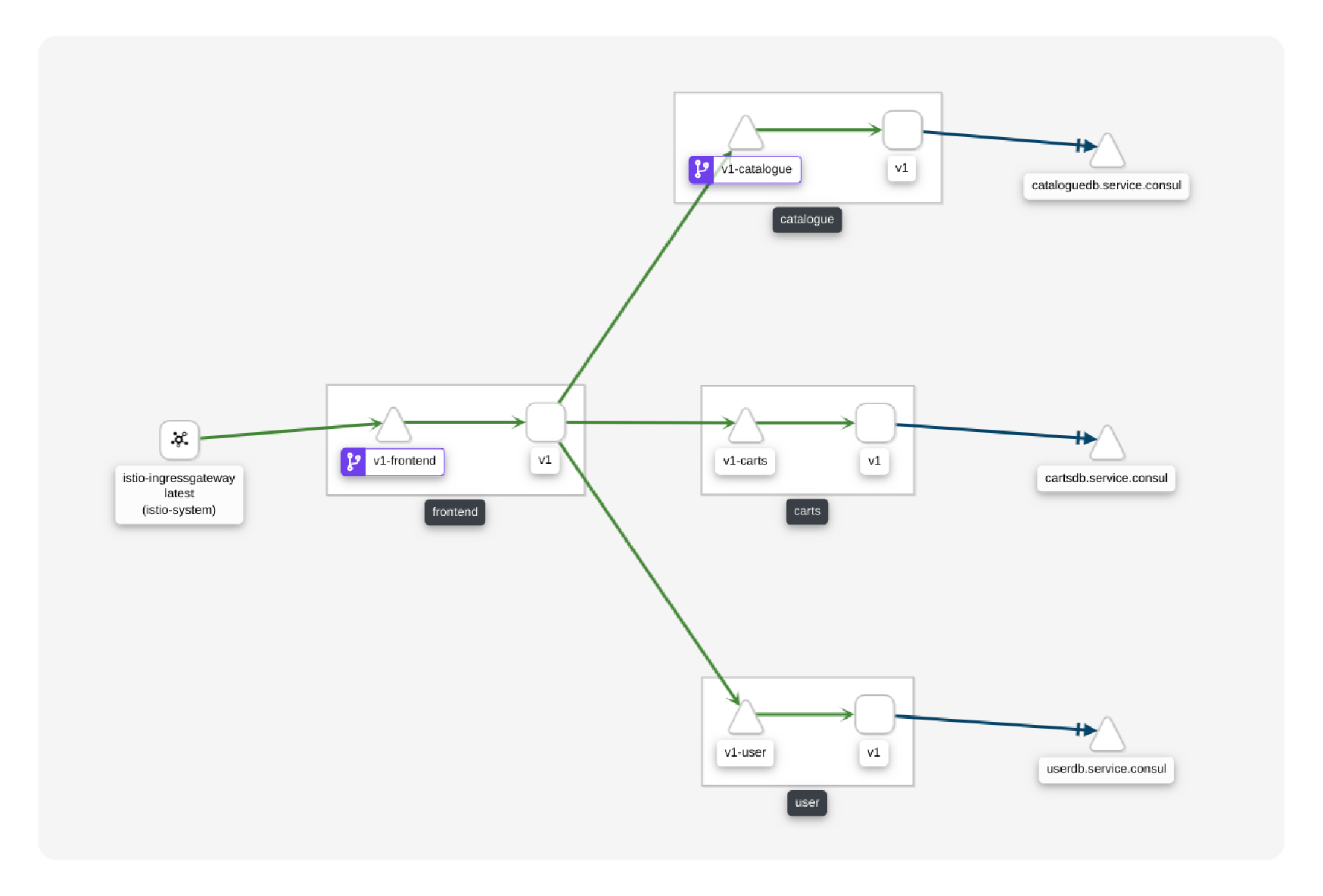

Istio service mesh connecting services on Kubernetes and DBMS on VMs (a sample application, Istio 1.6, screenshot of Kiali dashboard).

Unfortunately it was just a prototype of the desired functionality. In Istio 1.3 the documentation about Consul connection was removed and the remaining Consul support was removed from the source code in Istio 1.8.

How Istio actually works at VMs

Let's see what is actually available in the Istio service mesh toolkit.

Istio data plane implementation for standalone Linux machines is in pretty good shape. The core of the data plane consists of Envoy reverse proxies. Envoy is a regular piece of networking software. Lyft, which was the original Envoy development company, had run Envoy in production for a while before it became the core of Istio. By itself, Envoy only communicates to the service mesh control plane by the xDS protocols and isn't aware of the exact environment - whether it is Kubernetes or not. All that it needs (such as TLS certificates and routing configuration) it gets from the control plane service. But to connect to the control plane services, Envoy has to do a few things - to bootstrap mTLS and to authenticate itself. It has to be done out of the process, by calls to the API server that usually is the Kubernetes API server hosting the Istio control plane. A bunch of shell scripts and `pilot-agent` utilities do the job. Everything (Envoy proxy, bootstrapping tools, and SystemD configuration) is shipped as standard Debian and RedHat packages. The packages have only basic dependencies, don't overlap with the other tools and can be installed on almost any DEB- or RPM-based Linux OS.

The missing part is API server (Kubernetes API server) authentication. Because a standalone machine isn't a part of the Kubernetes cluster, it doesn't have Kubernetes credentials out of the box. The operator has to provision them somehow at the machine start time, Istio doesn't handle this case. However, it can be automated using an external credentials management service such as HashiCorp Vault.

So once the Envoy reverse proxy is started and configured at a VM or a physical Linux machine, it will intercept the outgoing traffic and route it according to the service mesh rules - as long as the target service endpoints are on the same network. The endpoints can be either other VMs or Kubernetes Pods. Pod connectivity assumes the cluster network uses the same address space as standalone machines. For cloud-managed Kubernetes (Google GKE, Amazon EKS) it is the default networking mode. Self-managed clusters need a networking subsystem (such as Calico), implementing a flat routable network address space.

But in the most relevant use cases, the standalone machines are servers, not service clients. For this case the Envoy proxy terminates the incoming TLS connections to the local services - but only if the endpoints of the local services are correctly advertised in the service mesh registry. And that is the caveat.

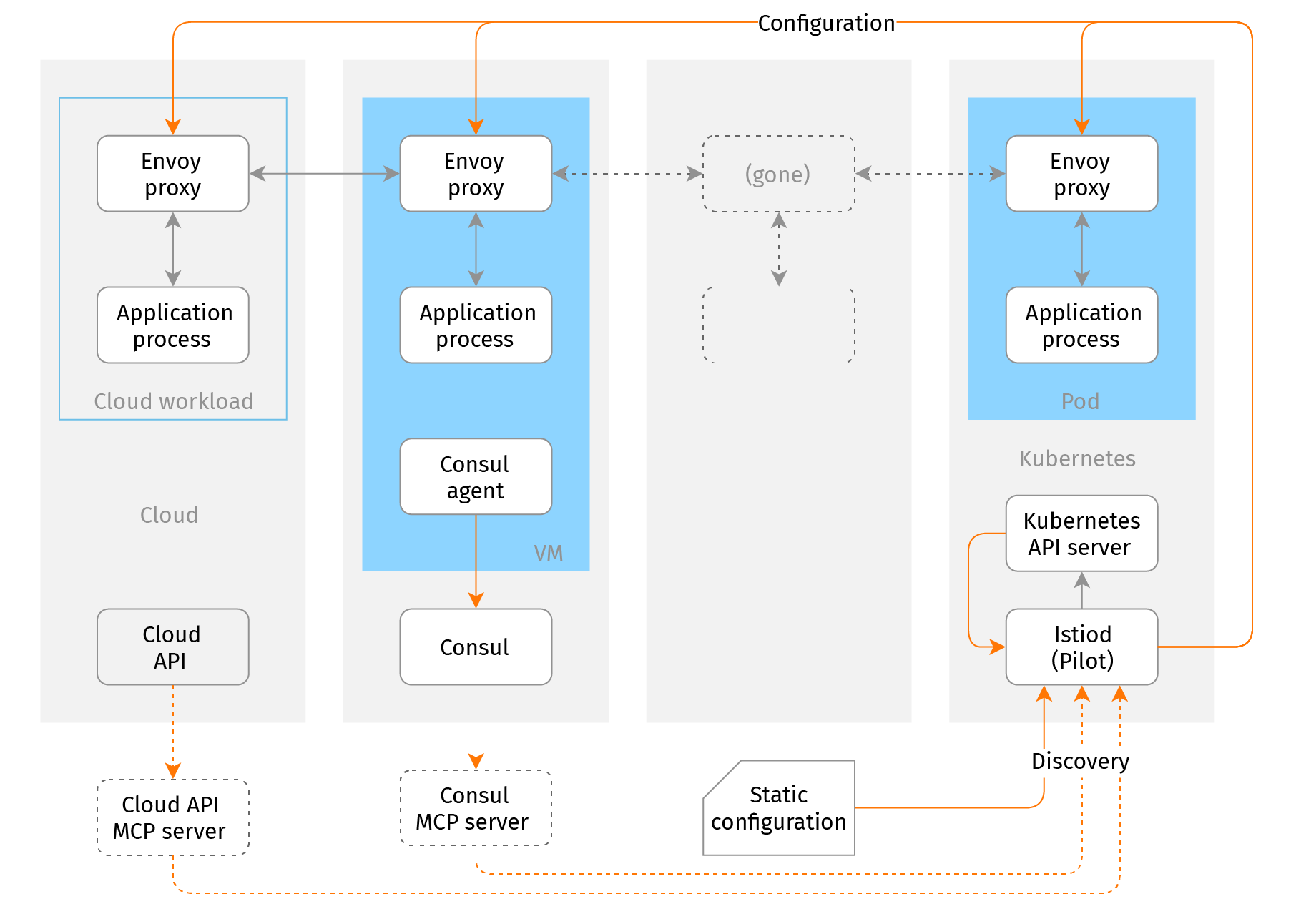

As of Istio 1.7, support for standalone Linux machines is still in Alpha stage. We would attribute it to the control plane implementation, in particular - to service discovery. Because in-tree service discovery implementations are removed from the Istio source code, only two non-Kubernetes discovery options remain:

- Static configuration - the operator has to explicitly configure service endpoints (network address and port) by means of WorkloadEntry/ServiceEntry Istio API resources.

- MCP (Mesh Configuration Protocol) - the operator needs to provide an MCP adapter to whatever service discovery facility they have - either HashiCorp Consul, or cloud APIs.

Neither is immediately usable. Static configuration doesn't match well with the ephemeral nature of cloud resources. Existing MCP adapters are just prototypes.

Another obstacle is Istio control plane deployment. By itself it consists of a single, stateless, horizontally scalable service - `istiod`. It can run anywhere. But this service relies on Kubernetes API server for persistence, authentication, and access control. The API server in turn relies on etcd, a distributed strongly consistent key-value database. So while technically an Istio control plane doesn't need a Kubernetes cluster, it "just" needs its API server, practically it's easier and safer to provision a cluster just for the control plane. As a result of this, Istio service mesh currently is neither viable nor sustainable outside of Kubernetes infrastructure.

Workarounds

At this point we should realize that to solve our pretty common business problem we'll have to create a critical piece of infrastructure software - an MCP adapter for service discovery at standalone machines. Building and maintaining custom infrastructure software is one of the last things a regular (non-software) company wants to do. So we have to explore alternative options on how to span a service mesh across application components on Kubernetes, VMs, and on-prem machines.

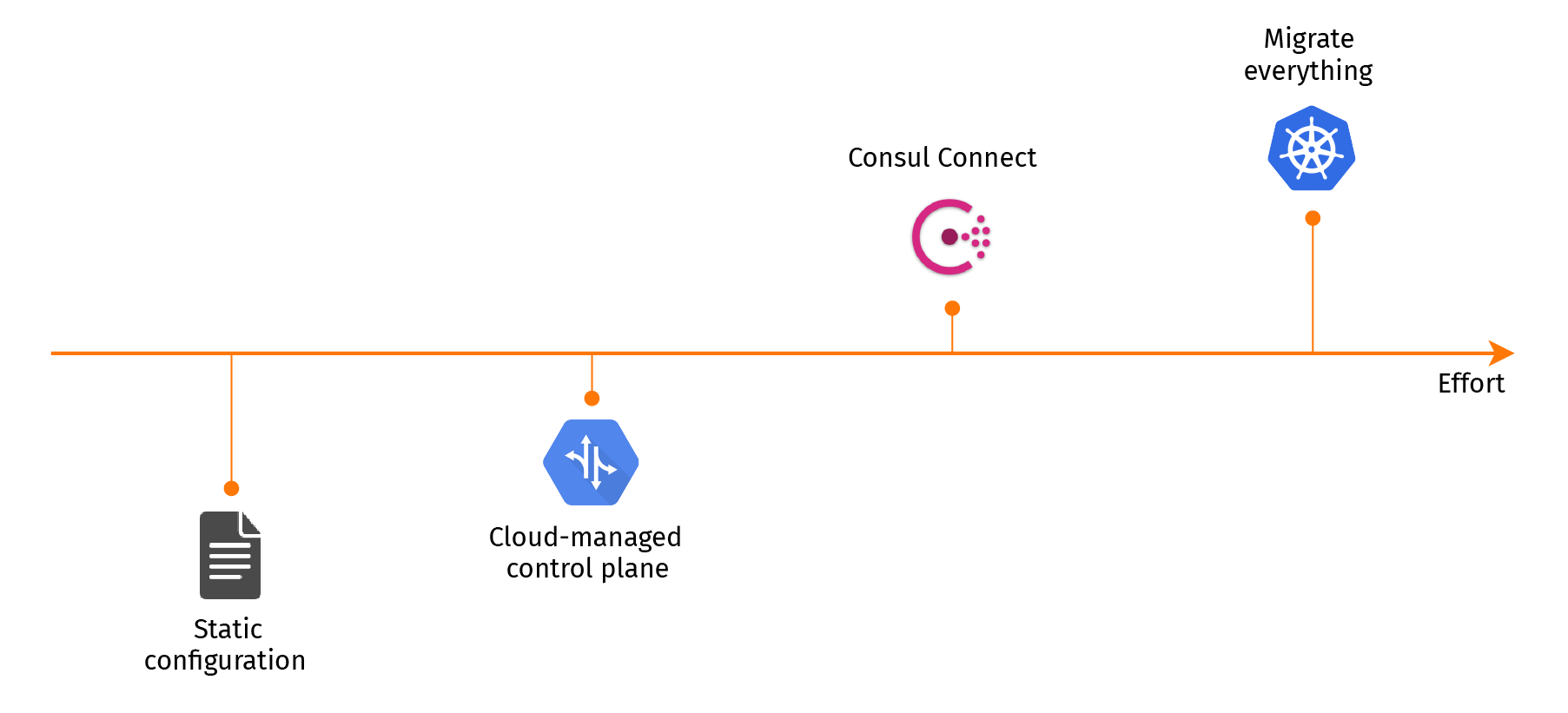

1. Static configuration, fixed network addresses

If Istio cannot handle this kind of complexity - push the complexity to somebody else to deal with. This time to the networking infrastructure. If the VM-based deployments can be configured so their services are accessible at static network addresses (VIPs), registration in Istio service mesh by static WorkloadEntry resources may be reliable enough. But it complicates and constrains VM-based workloads operations so much that it may outweigh the service mesh benefits.

2. Managed service mesh control plane (GCP Traffic Director, AWS App Mesh)

If everything is in the specific public cloud, it's a compelling option. The cloud provider runs a managed service mesh control plane exposing xDS protocols. So the user only needs to supply compatible client-side Envoy proxies. These managed control planes use cloud APIs for service discovery so they have first-hand information about what's running in the cloud. These service mesh solutions usually support a variety of workloads - not just Kubernetes, but also VMs and serverless runtimes equally. They leverage cloud IAM for service and client authentication so an operator doesn't need to supply Kubernetes credentials at VM launch time. It all sounds too good to be true. But yes, it does come with some "fine print". First and foremost, these managed solutions require specific proprietary methods for deployment and service mesh configuration. They don't expose Istio API. It means they are incompatible with the existing Istio control, visualization, diagnostic tools, and integrations. Given that service mesh management and troubleshooting is a difficult task, this limitation may be a deal breaker alone. Also these implementations often lack features of contemporary Istio versions and are constrained to the specific public cloud.

3. Use another service mesh toolkit (Consul Connect)

Consul Connect is another Envoy-based service mesh toolkit. It originated from VMs and only later came to Kubernetes. So it supports standalone machines pretty well. However, it is much less adopted. It means a higher chance to hit a defect or an edge case. It also shares the main drawback of cloud-managed service meshes - it's incompatible with the ecosystem of Istio tools. It also needs a standalone distributed stateful service, Consul, which is quite complex to maintain.

4. Migrate everything

Databases, message queues, and similar general purpose stateful components are often the things that remain at VMs during the cloud migration. If the application team can afford migration from these self-hosted services to cloud-managed ones, it eliminates the need for service mesh at VMs altogether. Yes, it creates a cloud lock-in, but otherwise it's usually the most reliable path.

Of course the best solution to a problem is to eliminate the problem. If the cloud migration can be arranged so the well-established use patterns (such as Istio on Kubernetes) are enough, or even better if the platform migration can be substituted with architecture migration so a service mesh is no longer necessary, we shouldn't look any further.

What should I plan for?

Service mesh is here to stay for the coming years. It became a class of enterprise application middleware somewhat similar to what Enterprise Service Bus (ESB) used to be for SOA over a decade ago. Istio is not the first implementation but it has much more development and marketing resources than any other service mesh toolkit. Thus it effectively becomes the reference implementation - the default choice for many business organizations. It's still the case despite the recent governance issues around the Istio project. It means that if the project is about a cloud migration, Istio will be a part of the agenda regardless of other technical justifications.

Istio is developed and promoted by a group of large service providers, primarily Google and IBM/RedHat. Their natural interests shape an "open core" model for Istio, when the core infrastructure components are open source while extra services are specific to the provider. On the other bank of the service mesh are either the same cloud providers or HashiCorp. But HashiCorp actively develops their own Consul Connect service mesh product. Hence we shouldn't expect robust and well-supported integration between Istio and external (to Kubernetes) service registries any time soon.

So some might say, "Ok, that’s an interesting discussion, but I need to move to the cloud today, what should I do?" There are no canned answers, but rather a thought process to find a solution for the specific problem. Firstly try to simplify the problem - replace generic application components (such as DBMS) with cloud services while mitigating risks of cloud lock-in, take the opportunity to re-implement the legacy software with modern architecture, and reduce inter-component dependency by microservice design. Then, if it doesn't eliminate the issue or doesn't fit the budget - build a professional team to create an application runtime platform for the application by solving specific design problems of the application system and the business. Such a platform may holistically leverage a concert of technologies (including Istio) and make viable trade-offs. Istio is a great tool, but alone it is not a silver bullet.