Take your apps to the next level with 3D augmented reality

Cool AR apps were possible before, but now they have become increasingly sophisticated, thanks to new features in Google's ARCore and Sceneform. Objects can now be placed on vertical planes such as walls or doors. 3D images render faster and more easily, and augmented reality scenes can be shared between devices. Overall, the AR experience has become much more advanced and easier to use compared to preceding versions.

In a previous post (and another), we discussed what augmented reality is and how it can help e-commerce, as well as how to get AR to recognize vertical planes. In this post, we will dissect our demo app, which can virtually furnish a room with customized, detailed 3D augmented reality images to see how this piece of furniture will look like in a real life. We break down this process into three parts:

- How to create, render and load 3D images onto an augmented reality device, which is needed for AR to take place.

- Review the basics of augmented reality to get some context for how the app works.

- Go through a step-by-step guide on how to use our app to furnish a room using the new ARCore features.

Now that we’ve laid out an overview for the post, let’s discuss how to create 3D objects and make them usable for our augmented reality app.

How to create 3D objects for augmented reality

The first step in the augmented reality process for an e-commerce app, is getting a 3D model of a physical object. There are several ways to accomplish this:

- Perform a 3D scan on a real-world object

- Get the 3D model from the object manufacturer

- Create your own 3D model

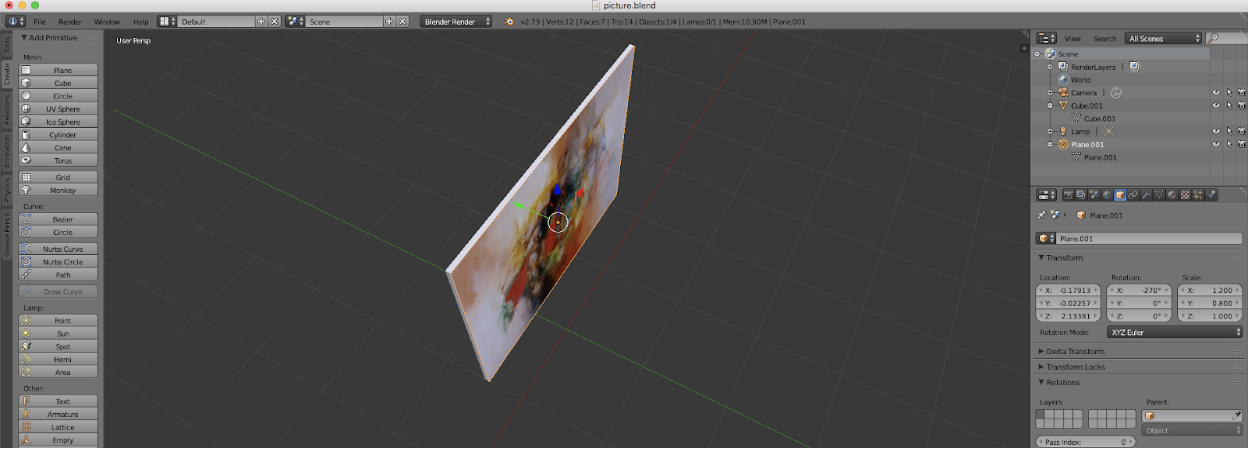

For our example, we will create a 3D piece of art with Blender. Here is our artwork in the Blender editor:

Our next step was choosing a file format for the model: whichever format we chose had to work on iOS and Android, as our app operates on both devices. After a short investigation, we decided to use an “.obj” file, because it is a universal format for 3D image editors. We then began to work on determining a 3D object scale: for example, an object in 3D Max might be three meters long and look normal, but will be far too large when shown through the AR app.

Therefore, we formulated rules that adjust the size of any 3D object that will be displayed on a device screen. This way, the image size is the same in the editor as it would appear in real life. We implemented these rules for 3D Max alone, but this practice could be put in place for any other 3D editor as well.

Rendering 3D objects with Sceneform

Now that we have created a 3D model and edited it to size, the next step is rendering it so that it can be displayed virtually. The best option for rendering before the ARCore updates was OpenGL, a separate application that ran outside of ARCore. Now we can use Sceneform, a framework that has been added by Google to ARCore SDK (Software Developer Kit), providing an in-house rendering of 3D objects. It comprises a high-level scene graph API, a physically-based renderer, and an Android Studio plug-in for importing, viewing, and building 3D assets. These tools make integration with ARCore easy, simplifying the process of building AR apps.

Before we continue with the rendering process, here is an explanation of how the various elements of Sceneform work:

Sceneform components:

- ArFragment — This can be added to an Android layout file, like any Android Fragment. It checks whether a compatible version of ARCore is installed, and prompts the user to install or update ARCore as necessary. Additionally, the fragment determines whether the app has access to the camera, and asks the user for permission, if it has not been granted yet. The fragment also creates an ArSceneView and an ARCore Session (the scene and session are maintained and handled automatically).

- The ArSceneView renders the camera images from the session onto its surface. It then highlights planes when they are detected by ARCore, and are in front of the camera. ARSceneView, as its name suggests, also has a scene attached to it. The scene is a tree-like data structure that holds nodes, which are the virtual objects that will be rendered.

- The ARCore Session actually manages the augmented reality experience. It keeps track of all the objects that have been anchored, and all the planes that have been discovered.

- Renderables — These are 3D models consisting of meshes, materials and textures, that can be rendered on the screen by Sceneform. Sceneform provides three ways to create renderables: from standard Android widgets, from basic shapes/materials and from 3D asset files (OBJ, FBX, glTF). For example, the piece of the art that we created above was an obj. file, and therefore renderable by Sceneform.

- An Android Studio plug-in is also included in Sceneform. The plug-in automatically imports the 3D models, then loads and processes them asynchronously. For more information, check out this page on importing and previewing 3D Assets.

Now that Sceneform has been explained, let’s look at how you can use it to put a 3D model onto the augmented reality app.

Using the project build.gradle files to create a renderable resource

The app build.gradle includes two Sceneform dependencies, the plug-in and a rule for converting sampledata assets, such as the 3D art model that we built above. This app is used to create a resource (in a .sfb file format) that is packaged with your augmented reality app, which Sceneform can load at runtime:

…

dependencies {

…

implementation 'com.google.ar.sceneform:core:1.4.0'

implementation 'com.google.ar.sceneform.ux:sceneform-ux:1.4.0'

}

apply plugin: 'com.google.ar.sceneform.plugin'

sceneform.asset(

'sampledata/models/armchair.obj'

'default',

'sampledata/models/armchair.sfa',

'src/main/res/raw/armchair

)

The next step is to load the resource as a renderable onto our augmented reality app:

ModelRenderable.builder()

.setSource(this, R.raw.armchair)

.build()

.thenAccept(renderable -> andyRenderable = renderable)

.exceptionally(

throwable -> {

Log.e(TAG, "Unable to load Renderable.", throwable);

return null;

});

The Android Studio plug-in provided by Sceneform then helps to import, convert and preview the 3D models. With the 3D object made and rendered, we will review some basic augmented reality concepts and terms to make the walkthrough to our app a little bit easier to understand.

Discussing the basics of ARCore

Before we proceed with the step-by-step walkthrough of how to furnish a room with an augmented reality app, we need to understand five main concepts of ARCore SDK:

- Features — When you move your device around, ARCore uses the camera to detect “visually distinct features” in each captured image. These are called feature points, and are used in combination with the device sensors to figure out your location in the room/space, as well as estimate your pose.

- Pose — In ARCore, pose refers to the position and orientation of the camera. It needs to align the pose of the virtual camera with the pose of your device’s camera, so that virtual objects are rendered from the correct perspective. This allows you to place a virtual object on top of a plane, circle around it and watch it from behind.

- Plane — When processing the camera input stream, ARCore looks for surfaces such as tables, desks, or the floor, in addition to finding feature points. These detected surfaces are called planes. We’ll later demonstrate how you can use these planes to anchor virtual objects to the scene.

- Anchor — For a virtual object to be placed, it needs to be attached to an anchor. An anchor describes a fixed location and orientation in the real world. By attaching the virtual object to an anchor, we ensure ARCore tracks the object’s position and orientation correctly over time. An anchor is created as a result of a hit test, which is done by tapping on the screen.

- Hit test - When the user taps on the device’s screen, ARCore runs a hit test from that (x,y) coordinate. Imagine a ray of light starting from the real life coordinate and going straight into the camera’s view. ARCore returns any planes or feature points intersected by this ray, plus the pose of that intersection. The result of a hit test is a paired collection of planes & poses that we can use to create anchors and attach virtual objects to real world surfaces.

Recent updates in ARCore

Since 2017, Google has invested a lot into ARCore. Here is a brief summary of the recent improvements in ARCore:

- Support with numerous mobile devices: In 2017, the list of Android AR-capable devices was short: Google, Samsung, Lenovo and Asus. Since then, there has been a substantial expansion in AR-capable devices: HMD Global, Huawei, LG, Motorola, One Plus, Vivo and Xiaomi are some of the more notable devices added to support AR. The full list can be seen here.

- Vertical planes recognition: The initial release of ARCore SDK in August 2017 lacked vertical planes recognition. Developers had to create elaborate workarounds just in case ARCore was required to detect a wall. Check out our previous blog post for more information on these workarounds. ARCore 1.2 however, fixes this problem.

- Rendering 3D objects without OpenGL: In 2017, OpenGL was required to render virtual objects. ARCore itself was limited to environmental understanding and motion tracking, so a developer had to feed AR data, like coordinates and the orientation of the camera, to OpenGL to perform the actual rendering of 3D objects. Now we have Sceneform, which has a high-level scene graph API and a physically-based renderer, which masks all low-level operations and can work directly with 3D asset files (OBJ, FBX, glTF).

- Google Play Store support of augmented reality: It’s no longer needed to explicitly pre-install AR libraries before running an AR application. By marking an application with AR “Required or Optional”, Google Play ensures the proper version of AR SDK is downloaded.

- Cloud anchors API: These anchors enable developers to build shared AR experiences across iOS and Android devices by allowing anchors created on one device to be transformed into cloud anchors, and then shared with users on other devices. This essentially means that several mobile devices can share the same AR scene. For more information, check out the developer page here.

- Augmented images: This feature enables ARCore to recognize and augment 2D images (e.g. posters) via reference images, so that they can be tracked in the user’s environment, as well as 3D objects.

- New API and other features:

- The API can now synchronously (in the same frame) acquire an image from the camera frame, without manually copying the image buffer from the GPU to the CPU. This enables the running of custom image processing algorithms from the images from the camera.

- The API is capable of getting color correction information for the images captured by the camera. This allows rendering of virtual objects in a way that matches the real-world lighting conditions.

- Significant performance improvements: ARCore works perfectly when tracking over a dozen anchors, which was nearly impossible just a year ago.

Adding AR to our application

The final step to using our augmented reality app is adding an ArFragment to an Android layout file:

<FrameLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context="com.google.ar.sceneform.samples.hellosceneform.HelloSceneformActivity">

<fragment android:name="com.google.ar.sceneform.ux.ArFragment"

android:id="@+id/ux_fragment"

android:layout_width="match_parent"

android:layout_height="match_parent" />

</FrameLayout>

Having explained the processes of building 3D images, adding them to ARCore and then using them in ARCore, it’s time to start talking about how to actually use our augmented reality app itself.

A step-by-step guide to using our AR app

If we run the demo application, we see a hand holding a phone, which is the app’s equivalent to a cursor:

The app immediately starts detecting different points to anchor world coordinates, showing detected cloud points on the surface. If several points belong to one surface, then a plane has been identified:

The next step is selecting an image to place on the selected plane. In this case, we will use furniture to demonstrate what our app can do for e-commerce purposes.

Choosing a piece of furniture

We embedded several 3D objects into our demo application: a piece of artwork and TV set to hang on the wall; several furniture items like a sofa, chair and table; a chandelier to attach to the ceiling; and some other objects for decoration like vases. In a real-world application, all of these objects would be stored on a remote server, and there may be hundreds of thousands of them.

We also created a helper class, Model, to simplify operations with 3D objects stored in .sfb files. Model is defined below:

public class Model {

private int modelSfbRes;

private Integer modelSfbRes2;

private String displayableName;

private Plane.Type planeType;

public Model(int modelSfbRes, String displayableName, Plane.Type planeType) {

this.modelSfbRes = modelSfbRes;

this.displayableName = displayableName;

this.planeType = planeType;

}

public int getModelSfbRes() {

return modelSfbRes;

}

public String getDisplayableName() {

return displayableName;

}

public Integer getModelSfbRes2() {

return modelSfbRes2;

}

public Plane.Type getPlaneType() {

return planeType;

}

}

After we have selected the furnishing and created a corresponding Model for it, the next step is to actually place the object in the room. In order to do so, when the user taps on the screen, the app needs to:

- Determine the type of the selected surface.

- Compare the type of the selected object to that of the surface.

- Display the object if the object type corresponds to the selected surface.

All of these processes are accomplished with the following code commands:

arFragment.setOnTapArPlaneListener(

(HitResult hitResult, Plane plane, MotionEvent motionEvent) -> {

if (plane.getType() != modelInteractor.getChosenModel().getPlaneType()) {

Toast toast =

Toast.makeText(this, "Model plane doesn't match tapped plane", Toast.LENGTH_LONG);

toast.setGravity(Gravity.CENTER, 0, 0);

toast.show();

return;

}

ModelRenderable.builder()

.setSource(this, modelInteractor.getChosenModel().getModelSfbRes())

.build()

.thenAccept(model -> {

placeModel(model, hitResult, modelInteractor.getChosenModel().getPlaneType());

})

.exceptionally(this::showModelBuildError);

});

There are two steps to displaying an object. First, we must establish an anchor to create a point of reference for the camera. Next, a TransformableNode must be created to move the object and bind it to the anchor. Doing so results in an image like the armchair below:

private void placeModel(ModelRenderable renderable, HitResult hitResult, Plane.Type planeType) {

Vector3 size = ((Box) renderable.getCollisionShape()).getSize();

// Create the Anchor.

Anchor anchor = hitResult.createAnchor();

changeAnchorForPainting(anchor);

AnchorNode anchorNode = new AnchorNode(anchor);

anchorNode.setParent(arFragment.getArSceneView().getScene());

// Create the transformable and add it to the anchor.

TransformableNode node = new TransformableNode(arFragment.getTransformationSystem());

node.setParent(anchorNode);

if (planeType == Type.HORIZONTAL_DOWNWARD_FACING) {

Node downward = new Node();

downward.setParent(node);

downward.setLocalPosition(new Vector3(0, size.y, 0));

downward.setLocalRotation(new Quaternion(0, 0, 1, 0));

downward.setRenderable(renderable);

} else if (planeType == Type.VERTICAL) {

Node downward = new Node();

downward.setParent(node);

downward.setLookDirection(curPlaneNormal.negated());

downward.setRenderable(renderable);

} else {

node.setRenderable(renderable);

}

TranslationController controller = node.getTranslationController();

controller.setAllowedPlaneTypes(EnumSet.of(planeType));

node.select();

}

}

Vertical plane recognition allows objects to be hung on walls and doors

Vertical plane recognition is a new feature introduced in ARCore in recent months, enabling the placement of objects on vertical planes such as walls or doors without any lengthy processes or separate algorithms. Additionally, this recognition helps with depth perception. For example, a sofa can now be placed up against a wall, allowing users to see how far from the wall the sofa extends. Ceiling objects such as chandeliers can also operate on vertical planes, and need to be rotated so that they are displayed correctly. Here is the code for rotating and placing objects on vertical planes, with the resulting chandelier below:

TransformableNode node = new TransformableNode(arFragment.getTransformationSystem());

Node downward = new Node();

downward.setParent(node);

downward.setLocalPosition(new Vector3(0, size.y, 0));

downward.setLocalRotation(new Quaternion(0, 0, 1, 0));

downward.setRenderable(renderable);

ARCore cannot detect a vertical plane when the wall has a solid color. We can use an algorithm that we described in our last blog post on augmented reality to determine the vertical surface in these cases:

private MotionEvent getNewTapOnBottomCloud(MotionEvent tap, Frame frame) {

if (tap == null) {

return null;

}

for (int i = 0; i < 70; i++) {

for (HitResult hit : frame.hitTest(tap)) {

Trackable trackable = hit.getTrackable();

if (trackable instanceof Plane && ((Plane) trackable).isPoseInPolygon(hit.getHitPose()) && ((Plane) trackable).getType() == Type.HORIZONTAL_UPWARD_FACING) {

float distance = PlaneRenderer.calculateDistanceToPlane(hit.getHitPose(), arFragment.getArSceneView().getArFrame().getCamera().getPose());

scale = distance;

ModelRenderable.builder()

.setSource(this, modelInteractor.getChosenModel().getModelSfbRes())

.build()

.thenAccept(model -> placeModel(model, hit, modelInteractor.getChosenModel().getPlaneType()))

.exceptionally(this::showModelBuildError);

return tap;

}

}

tap.offsetLocation(-1 * 15, 0);

if (tap.getX() < -100) {

break;

}

}

return tap;

}

Move and rotate

After a piece of furniture has been placed, we can move and rotate the object. Move and rotate functionality is provided by using TransformableNode. After you select an object to move, setAllowedPlaneTypes will specify the type of surface on which the object will be moved. Additionally, we can also select another object to modify. All objects can be moved and rotated by using two fingers:

TransformableNode node = new TransformableNode(arFragment.getTransformationSystem());

node.setRenderable(renderable);

TranslationController controller = node.getTranslationController();

controller.setAllowedPlaneTypes(EnumSet.of(planeType));

node.setParent(anchorNode);

node.select();

Changing the color and texture of augmented reality images

Now that the object has been moved so that it fits correctly in the room, the next step is to adjust its appearance. This feature was created as a dialog to change the color for the selected object, and applies the selected color using the setFloat3 method for changing the texture color. You can see the results of the color change in the two images below:

private void changeColor() {

ColorPickerDialog.Builder dialog = new ColorPickerDialog.Builder(this);

dialog.setTitle("Choose a color");

dialog.setNegativeButton(R.string.cancel, (dialog1, which) -> {

});

dialog.setPositiveButton(getString(R.string.ok), colorEnvelope -> {

BaseTransformableNode node = arFragment.getTransformationSystem().getSelectedNode();

if (node != null) {

node.getRenderable().getMaterial().setFloat3("baseColorTint", new Color(colorEnvelope.getColor()));

}

});

dialog.show();

}

You can also use this method to change the actual texture of the images, as seen in the two couches below:

Texture.builder()

.setSource(this, texturesInteractor.getRes(position))

.build()

.thenAccept(t -> {

BaseTransformableNode node = arFragment.getTransformationSystem().getSelectedNode();

if (node != null) {

node.getRenderable().getMaterial().setTexture("baseColor", t);

}

})

.exceptionally(this::showModelBuildError);

Our demo video

We have put together a demo videothat demonstrates all of the features that we described above. Seeing the app work as it would for a regular user really puts into perspective how useful augmented reality apps will be for shoppers. Check out the video, and see for yourself!

Conclusion: the future of augmented reality is bright

ARCore’s new features have allowed us to build a more sophisticated augmented reality app. Augmented reality apps like ours will open up all kinds of doors for retailers, mostly because of the power of visualization over browsing. AR can blend products with the environment in which they will be used, all from the comfort of a shopper's home. An entire room can now be furnished virtually, and customized to the user's specifications with ease, enabling convenient shopping and a pleasant experience.

Developments in AR over the past year have surpassed our predictions from a year ago. However, while there has already been a substantial expansion of AR-capable devices over the past year, we expect greater growth in the year to come. Additional features, such as face detection, could also arrive via extensions of ARCore with the Google ML (Machine Learning) Kit. Finally, we hope to soon see the development of custom chips on mobile devices that can implement 3D algorithms on the hardware level. These would greatly speed up the processing of real-time video, making for a superior customer experience.

We are excited to see where augmented reality goes over the years to come, and look forward to exploring further updates with blog posts as they become available. If you want to learn more about our augmented reality application, or about the new features in ARCore, please contact us — we will be happy to help!