Building a question answering system for online store

NLP technologies are rapidly improving, changing our user experience, and increasing the efficiency of working with text data. For instance, web search and language translation innovations changed our world, and now Deep Learning enters more and more areas. While writing these sentences, the editor corrects my grammar, suggests synonyms, analyzes the tone of the text, autocompletes words, and even whole sentences depending on the context.

Search is one of the main tools for everyday tasks, and it is also quickly evolving in recent years. We move from word matching to deep understanding of queries, which also changes our habits, and we start typing questions in the search boxes instead of simple keywords. Google already answers questions with instant cards and recently started to highlight the answers on a particular web page when you open it from the search results. The same works even for YouTube: the search engine can redirect you to a specific part of the video to answer your questions.

We call such systems Questions Answering (QA). QA systems help to find information more efficiently in many cases, and go beyond usual search, answering questions directly instead of searching for content similar to the query.

Besides web search, there are many areas where people work with domain-specific documents and need efficient tools to deal with business, medial and legal documents. Recently, COVID-19 crisis significantly increased the interest in QA systems. There are hundreds of research papers, reports, and other medical documents published every day for which we need efficient information retrieval tools, and QA systems provide an excellent addition to the classic search engines here. Another good use for QA systems is a conversational system. The dialog with digital assistant contains a lot of questions and answers, and here modern QA systems can help to replace hundreds of manually configured intents with a single model. We believe that QA is just the next logical step for information retrieval systems that will be widely integrated in the nearest future.

In this blog post, we describe our experience of building such systems and adapting them to a specific domain, especially when we don’t have resources to label a lot of data. We also explore how we can leverage unlabeled data, and how a knowledge distillation may help here. Finally, we touch on the performance aspects of the solutions based on popular Transformer models like BERT.

Finding answers in customer Q&As and reviews

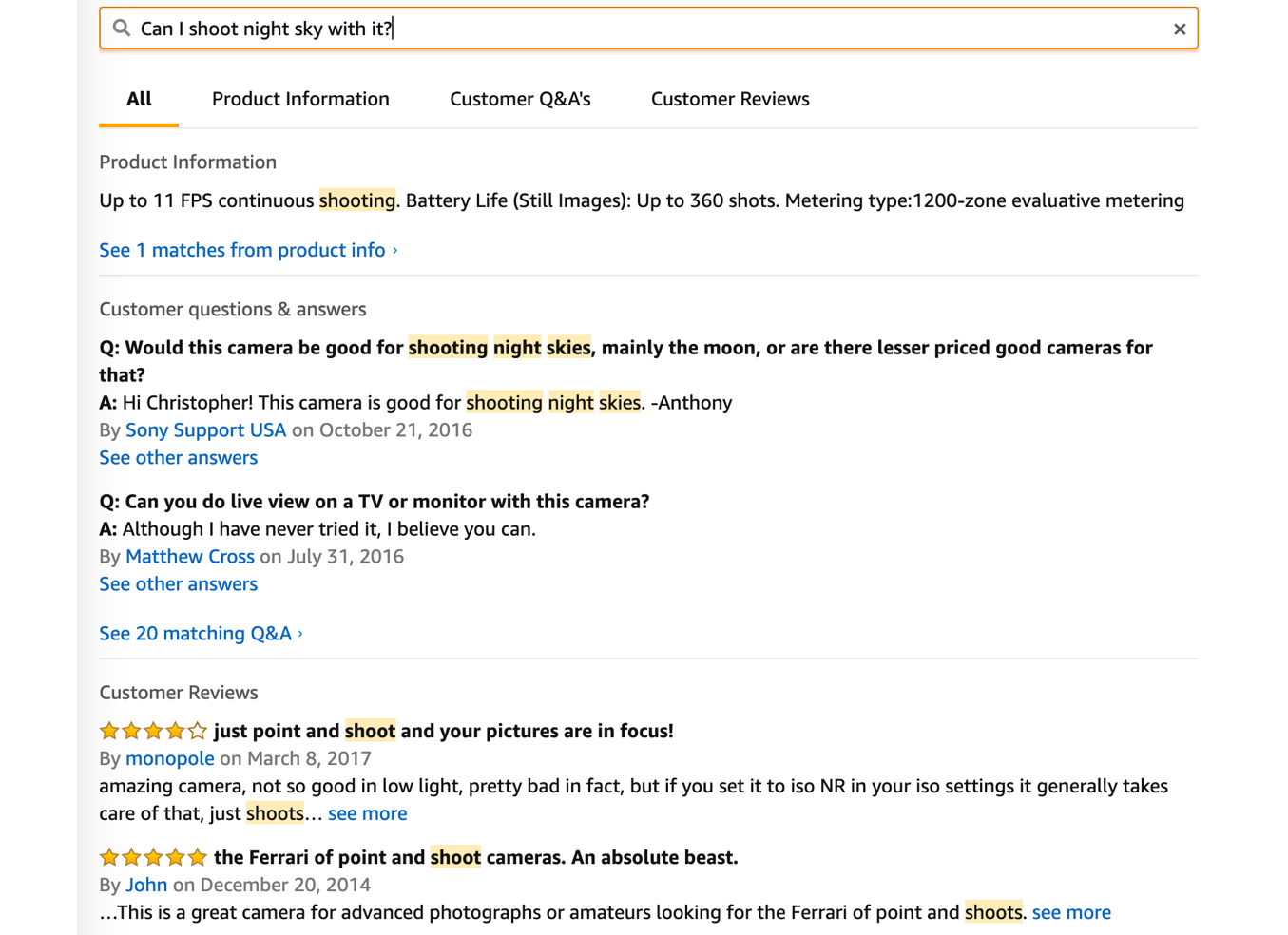

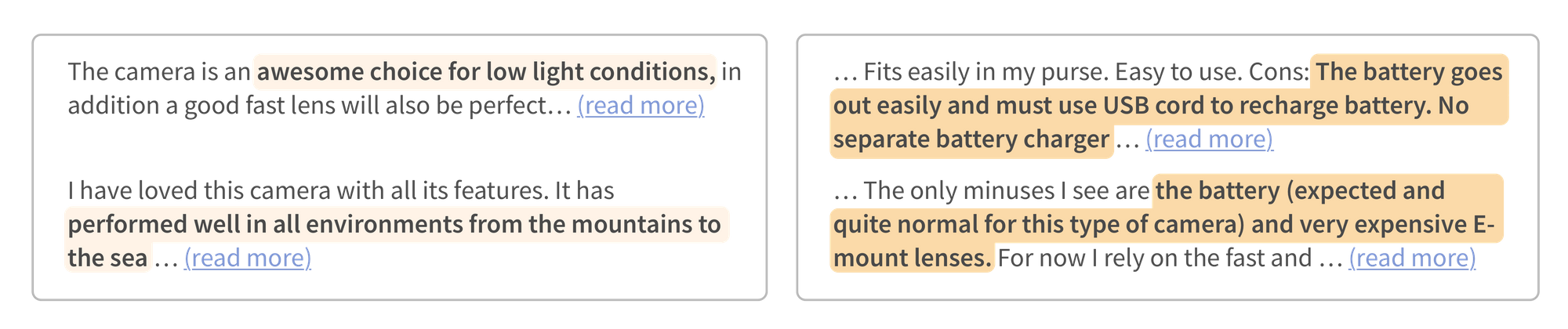

In our project, we are going to build QA system to find answers in customer’s Q&A and reviews for a particular type of products: photo & video cameras. You can find a similar system at amazon.com:

For relatively complex and technical products, like cameras, such a QA system is very useful because many questions arise during product discovery and selection. Customers study the market by comparing different options across many criteria depending on their use cases and preferences, and the information from customer Q&A and reviews is especially important. For popular cameras, you may see up to a thousand reviews.

Amazon uses a classic keyword search algorithm to find something relevant, yet you still need to sift through the results to find the relevant response. It does not work well for all questions, because what you want is a direct answer, not just the text similar to your question, e.g., keyword search falls short here.

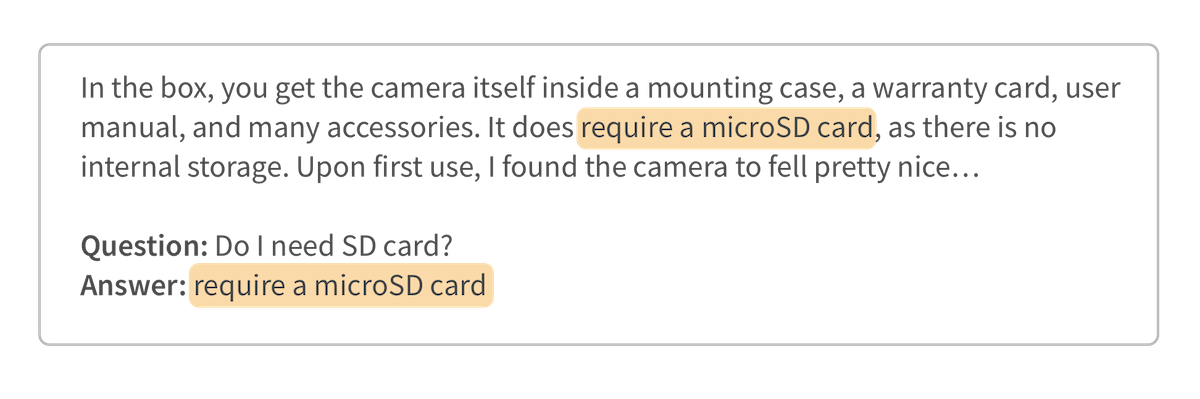

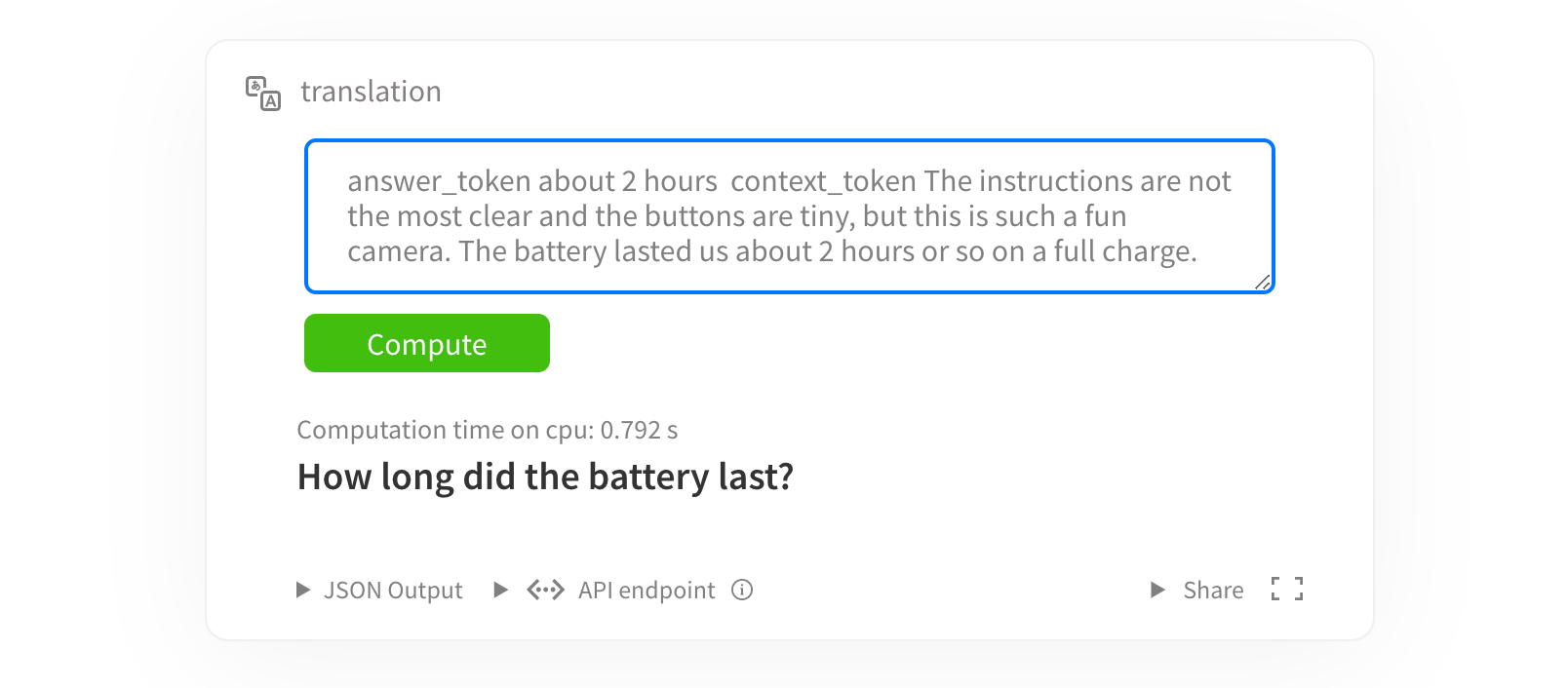

Below, you can see a few examples of our question answering system, in which go beyond simple keyword matching:

Semantic Question Answering

We believe that such QA systems can be of much more use in this and similar scenarios.

Let’s look at the typical architecture of QA systems, models, and how we can improve the quality of available pre-trained models adapting to our photo & video cameras domain.

Reading comprehension as a question answering system

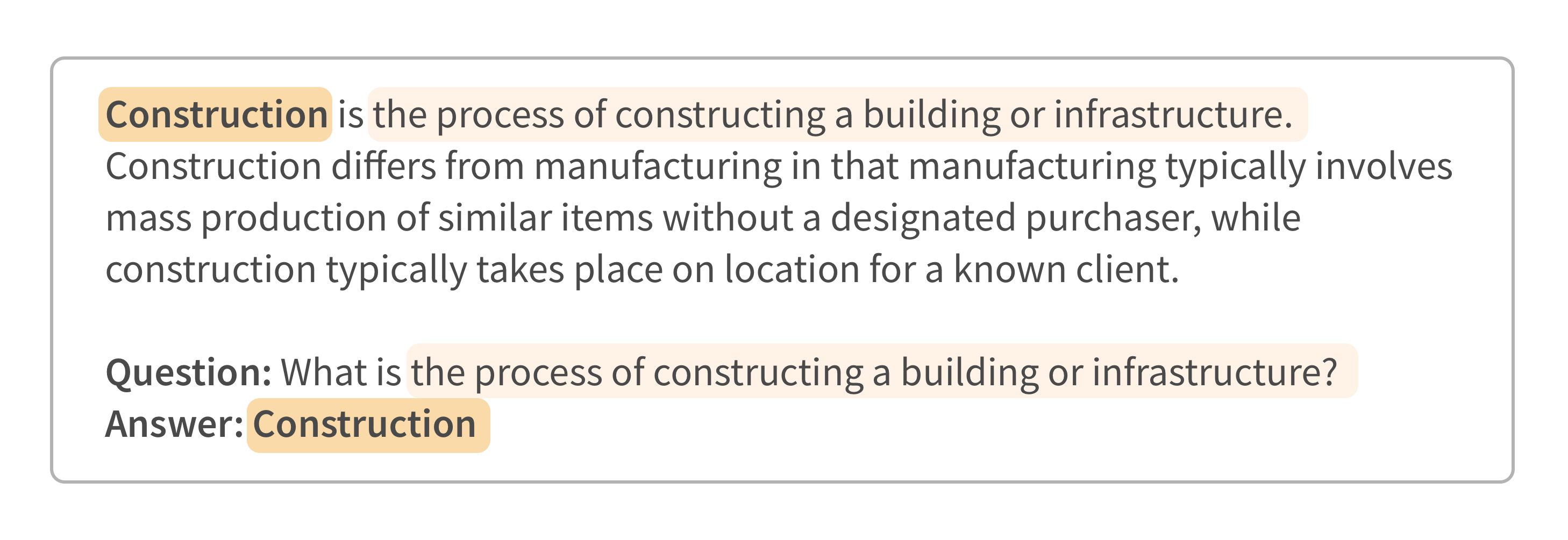

One of the simplest forms of QA systems we'll talk about in this post is a machine reading comprehension (MRC), when the task is to find a relatively short answer to a question in an unstructured text.

Of course, there are many additional tasks, such as finding both short and long answers, answering yes/no questions, response generation, multi-modal QA, etc. Conversational systems also add additional complexity, as you need to consider the dialog context.

This post will focus on the most common case when we need to extract a short answer from unstructured text. The nice thing about MRC is that it covers many types of questions out of the box and doesn’t require structured data. The only requirement is that the answer should exist in the text. Even when you have semi-structured information such as product specifications, you still can easily convert it to plain text and use the QA model with it.

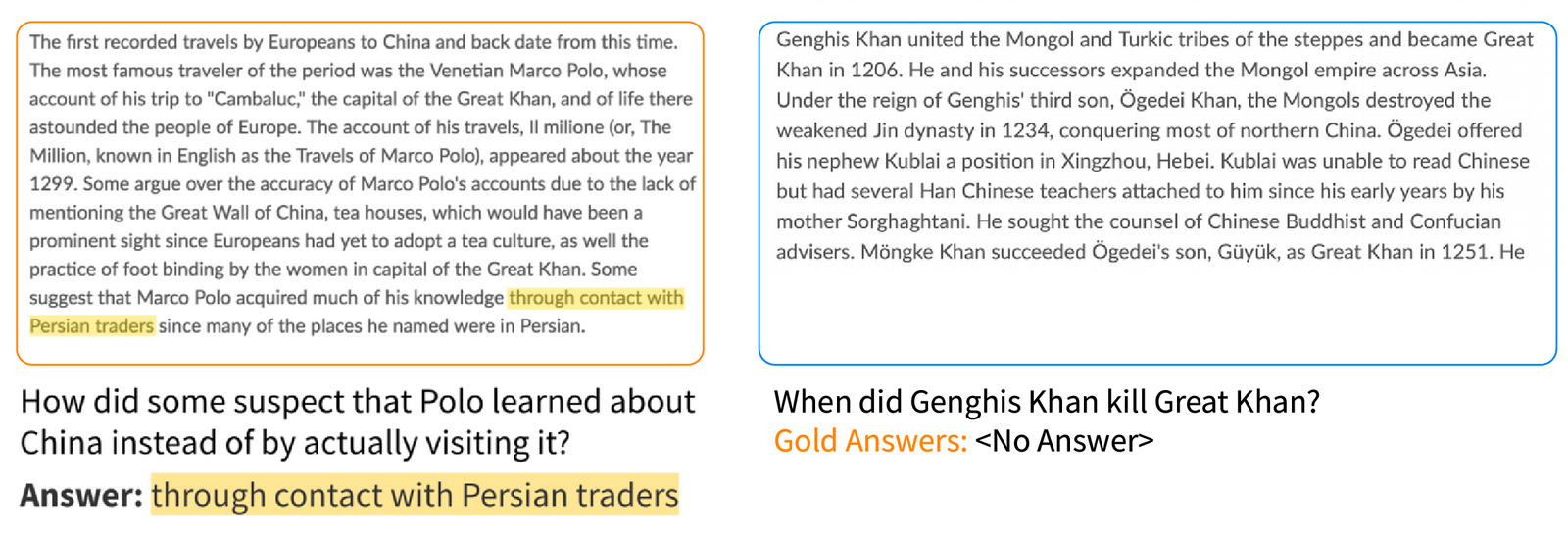

Stanford Question Answering Dataset

When it comes to machine reading comprehension, one cannot fail to mention the Stanford Question Answer Dataset (SQuAD). There are plenty of QA or MRC datasets available (Natural Questions, MS MARCO, CoQA, etc.), but SQuAD is one of the most used. We will not go into details in this blog post, but here are several facts about SQuAD:

- SQuAD 1.1 (2016) contains 100,000 question-answer pairs (536 articles);

- SQuAD 2.0 (2018) adds 53,775 new unanswerable questions about the same paragraphs;

- Dataset collection: the top 10000 articles of English Wikipedia, 536 articles were sampled uniformly at random; 23,215 paragraphs for the 536 articles covering a wide range of topics, from musical celebrities to abstract concepts; Amazon Mechanical Turk was used to label the data; many examples have some sort of lexical or syntactic divergence between the question and the answer in the passage.

- You can explore the dataset here.

Examples from SQuAD 2.0 dataset

Unanswerable questions (that were added to SQuAD 2.0) require the model to determine whether an answer exists in the document. Further, we will refer to that task as the “no answer” classification. It turned out that this is a rather challenging task, as it requires a deeper understanding of the context. As stated in the SQuAD 2.0 paper: “a strong neural system that gets 86% F1 on SQuAD 1.1 achieves only 66% F1 on SQuAD 2.0”.

SQuAD dataset has several limitations:

- Only span-based answers: no yes/no, counting, implicit why;

- Generally, greater lexical and syntactic matching between questions and answer span than you get in real life (the questions were created by the annotators after seeing the passages), and it often doesn’t force the model to use domain knowledge and common sense.

The main problem of almost all available QA datasets (SQuAD, NaturalQuestions, and others) is that they contain many easy examples and similar questions between the train and test data. Also, the models trained on one big QA dataset don’t perform so well on another. The high score that we see at the leaderboards near “human performance” results doesn’t mean that the models are learning reading comprehension.

We used SQuAD 2.0 to train a baseline model and help with some of our experiments. Despite its limitations, SQuAD is a well-structured, clean dataset, and still a good benchmark. It showed pretty good results on out-of-domain data, and there are many pre-trained models and various research papers around it.

Question answering system architecture

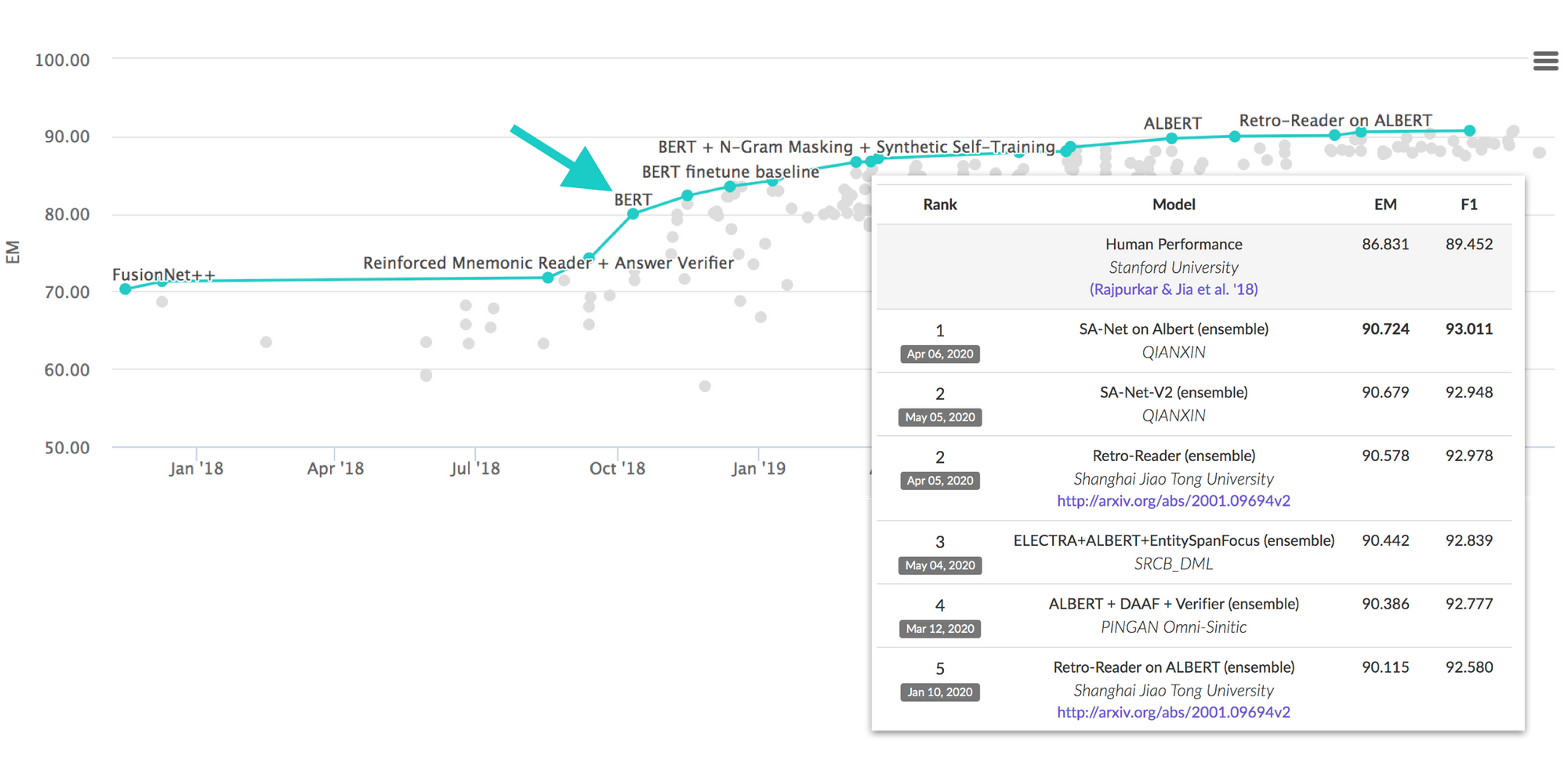

The rise of Transformers

The attention mechanism has become one of the key components in solving the MRC problem. In 2017, Stanford Attentive Reader used BiLSTM + Attention to achieve 79.4 F1 on SQuAD 1.1, then BiDAF used the idea that attention should flow both ways — from the context to the question and from the question to the context. There were many other solutions based on attention mechanisms, but the last few years’ key breakthrough was achieved with Transformer architectures like BERT. Currently, the latest models solve this pretty complex task really well.

Of course, even the best models are not truly intelligent, you can easily find examples where they fail, and there are also limitations of the datasets described earlier. But, as an instrument for question answering tasks, these models already have a good quality, and they can surprise in some cases.

Typical architecture of the QA system

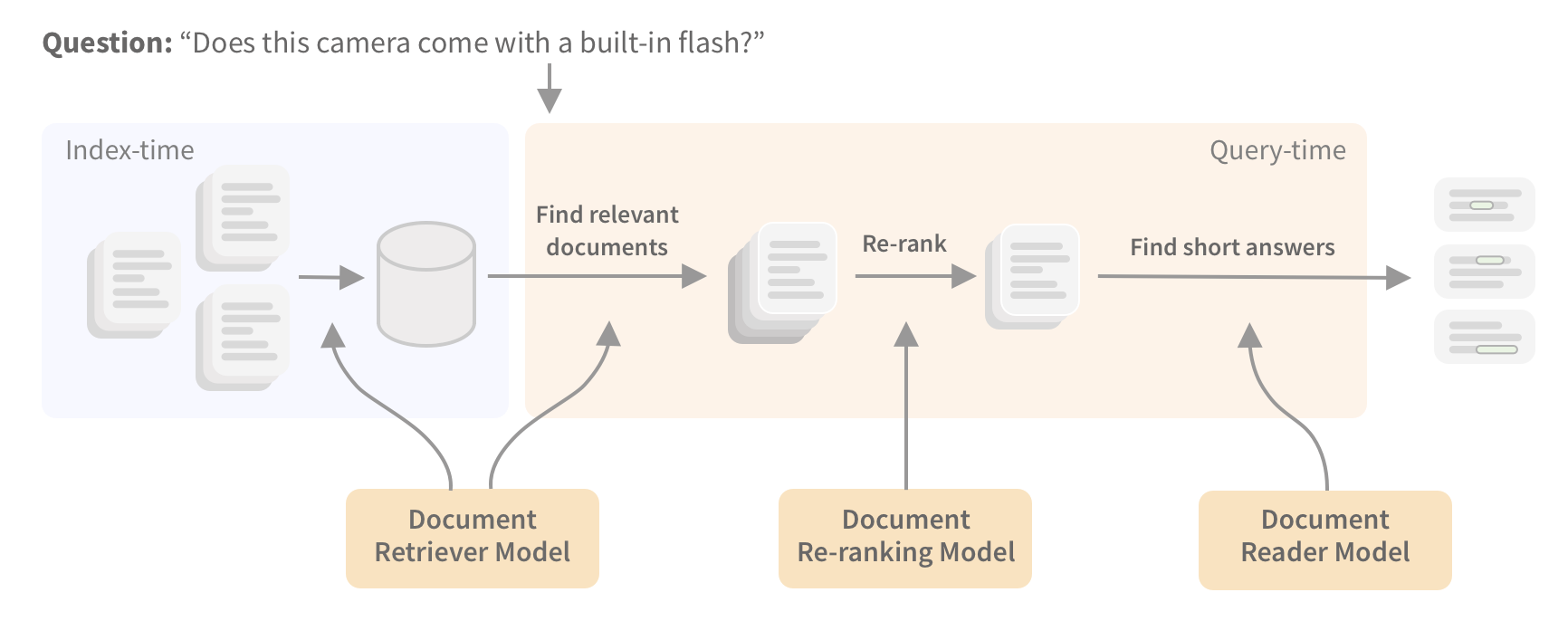

The task that involves finding an answer in multiple documents is often referred to as open-domain question answering. There are two main approaches to such systems: retrieval-only and using MRC models. The MRC-based usually includes several stages:

- Retrieval stage: find a set of relevant documents for MRC. Due to the MRC model’s performance limitations, we can not apply it to many documents for each request. The retrieval stage should be fast, so we care more about the recall and less about precision. Usually, the sparse retrieval (bigram TF-IDF or BM25) is used, but dense retrieval models like Universal Sentence Encoder (USE) also can be fast enough here.

- Re-rank stage: use a more robust ranking model (e.g., BERT) to re-rank retrieval stage results to reduce the number of selected documents. The retrieval and re-ranking stages could be reduced to a single one based on the number of documents, quality, and performance requirements.

- Document reader: apply the MRC model to each document to find the answer.

Such an approach has several disadvantages. First of all, you have several models, so no matter how well MRC works, it still depends on the retrieval stage results. Another significant limitation is the MRC performance. For example, BERT-base models may be slow even for ten documents per request. On the other hand, smaller models may not be good enough, but it depends on the desired quality and your requirements.

Another approach is to reduce all those components to a dense-retrieval task when we vectorize documents and query separately (i.e., we don’t use query-document attention), and then use kNN search. Even though we discussed that research has moved towards applying the attention mechanism between query and document, solutions without it still can produce good results, especially when we want to find long answers (sentences). Recently, we started to build search engines this way, you can find more information here. This post will focus on the multi-stage approach, especially on how we can adapt the MRC model to our domain.

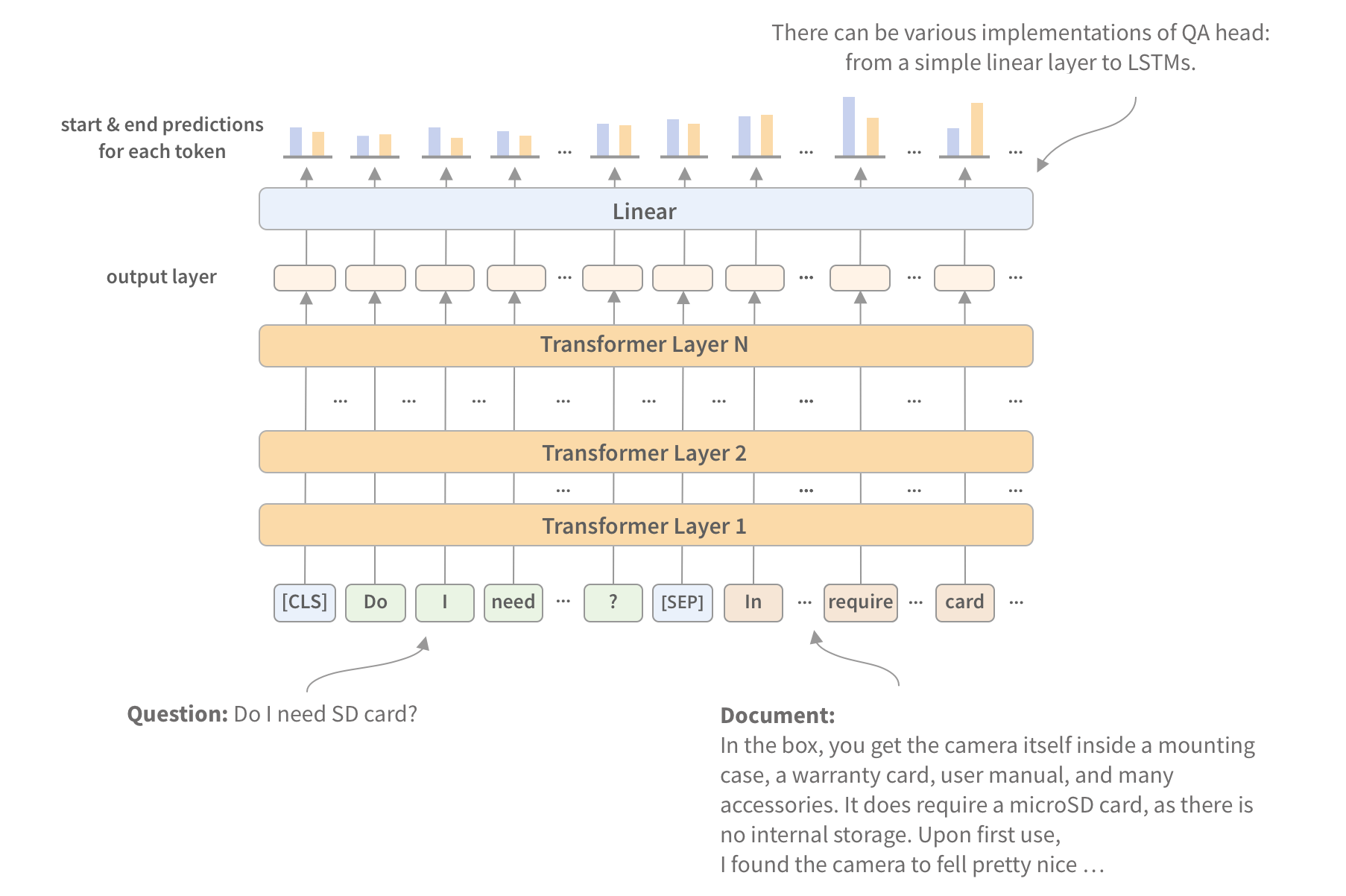

Reading comprehension model

Despite task complexity, question answering models usually have a simple architecture on top of the Transformers like BERT. Essentially, you just need to add classifiers to predict which tokens are the start and the end of the answer. The model’s input is constructed from the query and the document, where a separation token and segment embeddings help the model differentiate a question from a document.

If predictions of the start and the end token point to CLS token, then the answer does not exist. In practice, such an approach doesn’t always work very well. You can find various solutions where a separate classifier or a more complex architecture is used to improve the “no answer” classification. In our case, we also faced the problem when one version of the model is better at the answer extraction task, while another at “no answer” classification. If you are not familiar with Transformer architecture, concepts like attention, CLS token, segment embeddings, we recommend reading more about BERT.

Autoregressive and sequence-to-sequence models like GPT-2 and T5 also can be applied for MRC, but that is beyond the scope of our story.

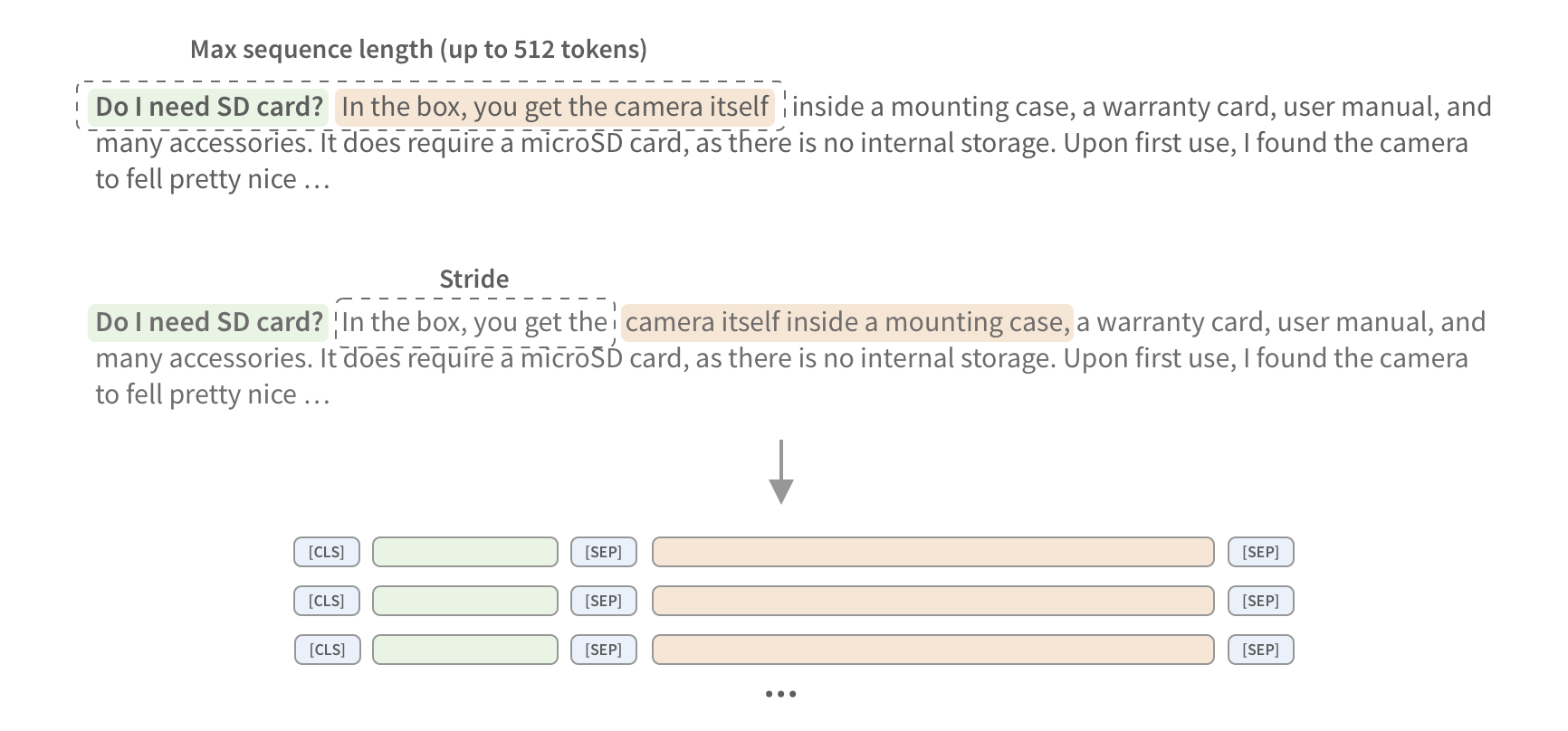

Most of BERT-like models have limitations of max input of 512 tokens, but in our case, customer reviews can be longer than 2000 tokens. To process longer documents, we can split it into multiple instances using overlapping windows of tokens (see example below).

Sequence length limitation is not the only reason why we want to split the document into smaller parts. Since attention calculations have quadratic complexity by the number of tokens, processing the document of 512 tokens is usually very slow. In practice, the window size of 128...384 tokens contains enough surrounding context to extract the answer correctly in most cases. It is computationally beneficial to process several smaller documents instead of a single big document in many cases.

So there is a trade-off between window size, stride, batch size, and precision, where you should find the parameters which will fit your data and performance requirements. Transformer architectures, like Reformer and Longformer, deal with long documents more efficiently, but they are usually faster only for documents with a size starting from ~2000 tokens.

Evaluating QA models for the photo & video cameras domain

Collecting question answering dataset

Whether you will use a pre-train model or train your own, you still need to collect the data — a model evaluation dataset. Collecting MRC dataset is not an easy task. To prepare a good model, you need good samples, for instance, tricky examples for “no answer” cases. Additionally, not only you should label the answers, but also prepare questions if you don’t have them (compare this to tasks like classification and NER). Preparing questions can be extra challenging for exotic domains, which may require domain experts. In our case, the field is not that specific, yet many questions can be written and understood only for people with some photography experience. We decided to label a part of the test data ourselves (without services like MTurk) to understand the process’s challenges better.

Luckily, our case was not that hard because we already have customer’s Q&A, so we don’t need to write questions. However, we can not just use Q&A data to train/test the model, and then use it for everything else in our domain. The first reason is that customer reviews are very different from Q&A data in terms of content and document length. Another problem is that many Q&A pairs have answers biased to its question, and not only because of lexical & syntax similarity.

To collect better samples, we did the following:

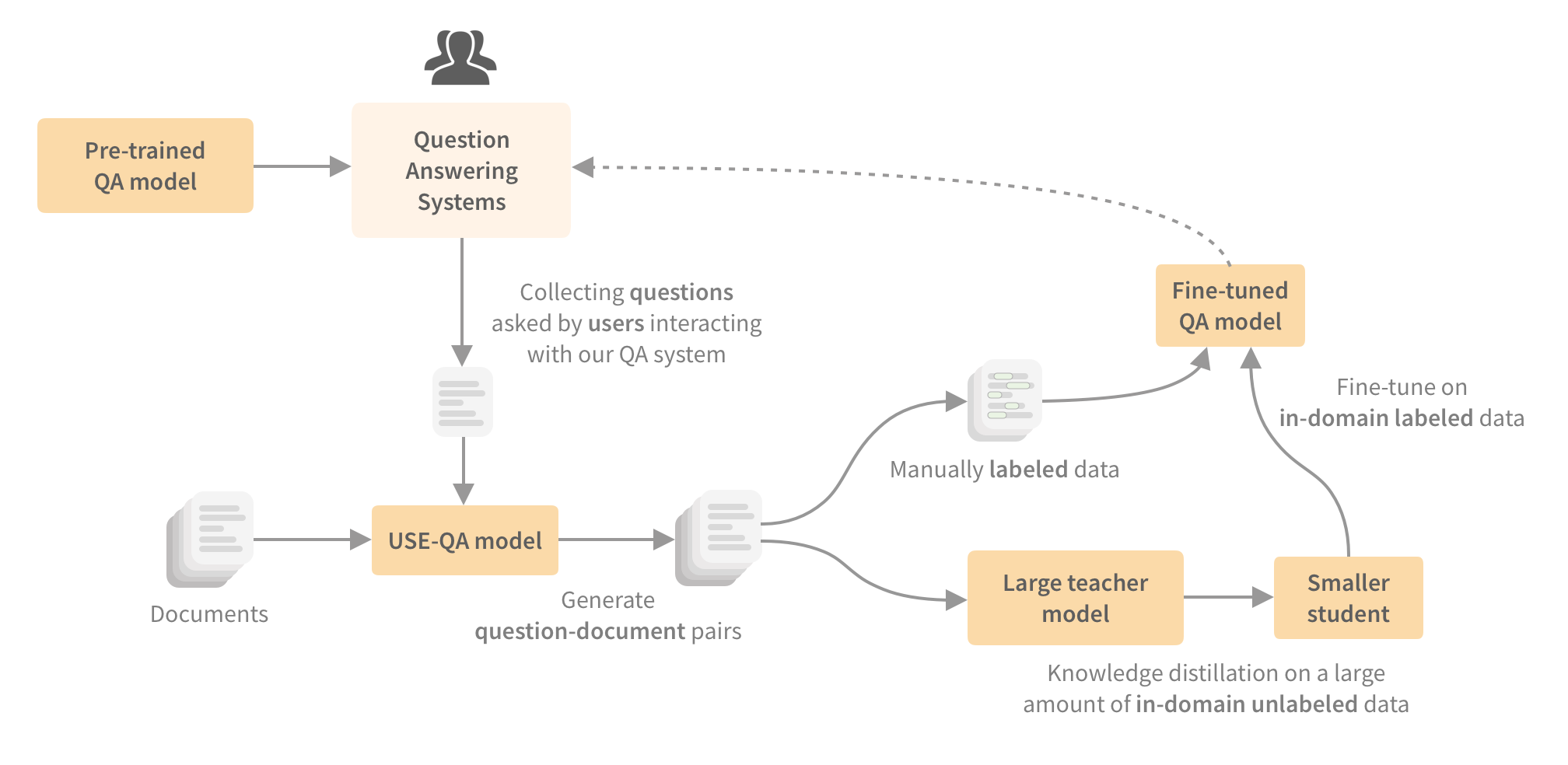

- We took ~120K Q&A pairs and clustered the questions using Universal Sentence Encoder (USE) to sample data from the clusters;

- After that, instead of using original Q&A pairs, we found customer answers and reviews for each selected question using the USE-QA model;

- Then we labeled ~1000 QA pairs. It took about one week for two people;

- Because the pre-trained USE-QA model produces mistakes, it allowed us to find and label “no answer” examples.

During labeling, we faced the following challenges:

- Labeling is slow: long paragraphs to read; easy to miss the answer; not always understand where the answer is; many debates about the right answer between the crowdworkers.

- Should we label shorter or longer answers? Again, debates between crowdworkers. And different QA models (which we used as baselines) also don't produce consistent results. The solution was to label multiple overlapped answers.

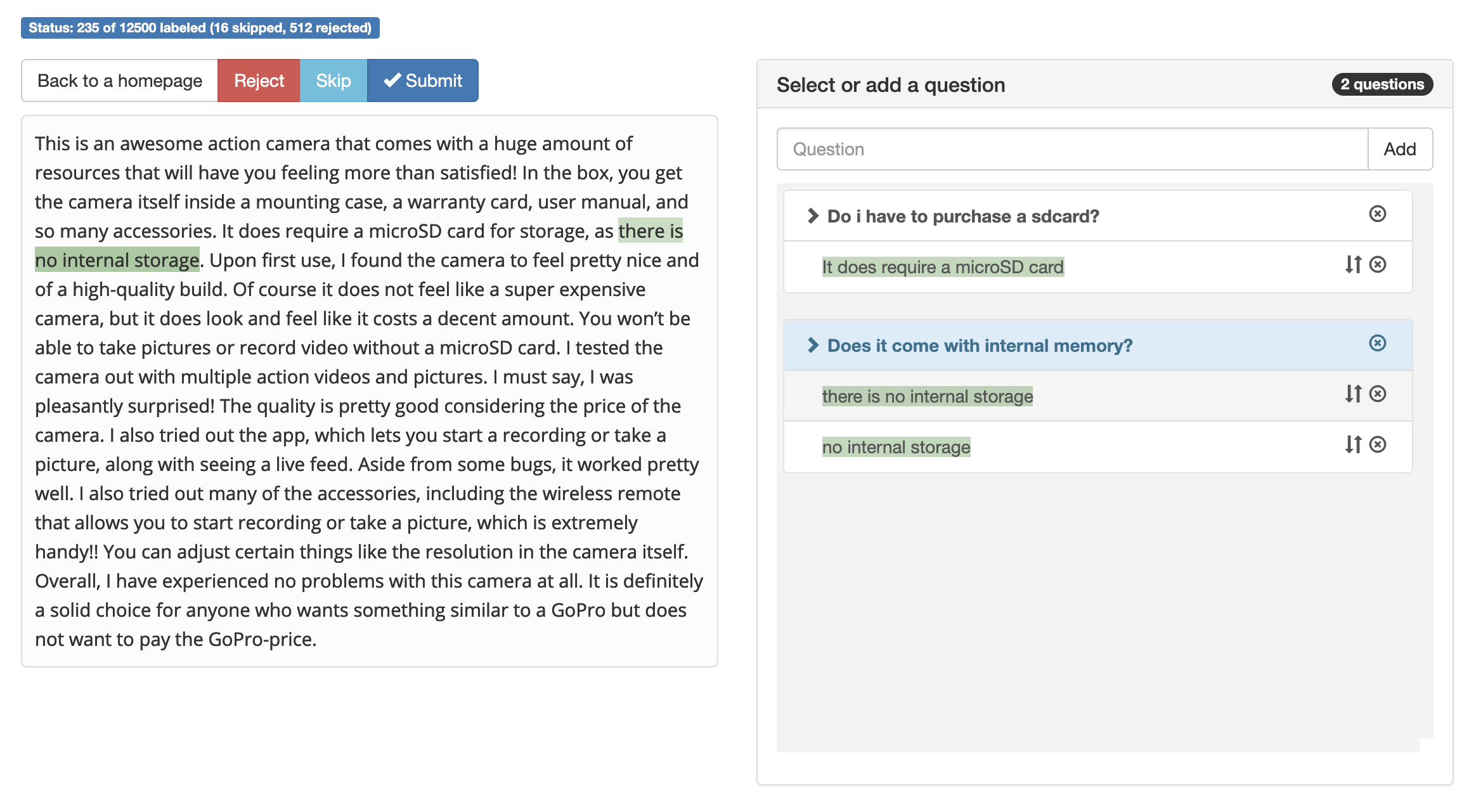

We also built our tool to label the data faster because we didn’t find any convenient tool on the market (except Label Studio which can be adapted to MRC task via NER labels).

Here are several examples of questions from our dataset:

- How long does the battery last? How long does it take to fully charge?

- Does it come with SD card? What type of SD card do I need to buy?

- Does this camera come with a built-in flash?

- Can I use an external mic with this camera?

- Can I use Canon lenses?

- Does it take good pictures at night with regular lenses?

- Is this camera good for landscape photography?

- Can I record videos while the camera is charging?

- Is it compatible with the GoPro case?

- Can I go under water with it?

- Does this camera have in-camera image stabilization?

Can we generate such questions using some rules or deep learning models like GPT or T5? The answer is “yes, and no”. You can find several works describing such an approach, but we didn’t see any convincing results. We believe that it is not feasible to generate really good questions automatically, and such questions will be different from real-life examples.

In general, even synthetic questions may help improve your model as an additional source of data for training, and such an approach can also be used for data augmentation. To generate the question either by rules or generative models, you first need to extract possible answers (e.g., using NER models).

Pre-trained QA models

Helpfully, there are plenty of models pre-trained on SQuAD 2.0 with different architectures and sizes at the 🤗 HuggingFace Model Hub . Let’s check how well they perform in our domain (using our labeled 1000 examples):

| Model 1 | F1 2 | EM | F1_span 3 | EM_span | F1_no_ans | SQuAD 2.0 F1/EM |

|---|---|---|---|---|---|---|

| ALBERT-xxlarge | 70.8 | 59.6 | 84.3 | 61.0 | 76.7 | 89.0 / 84.6 |

| RoBERTa-large | 65.3 | 53.3 | 77.9 | 54.6 | 73.1 | 87.4 / 83.4 |

| RoBERTa-base | 59.1 | 48.1 | 70.8 | 45.0 | 70.0 | 81.4 / 78.3 |

| ALBERT-base | 58.7 | 48.4 | 69.9 | 45.1 | 68.8 | 81.1 / 77.0 |

| DistilRoBERTa | 50.3 | 40.1 | 61.3 | 36.4 | 62.4 | 74.6 / 70.9 |

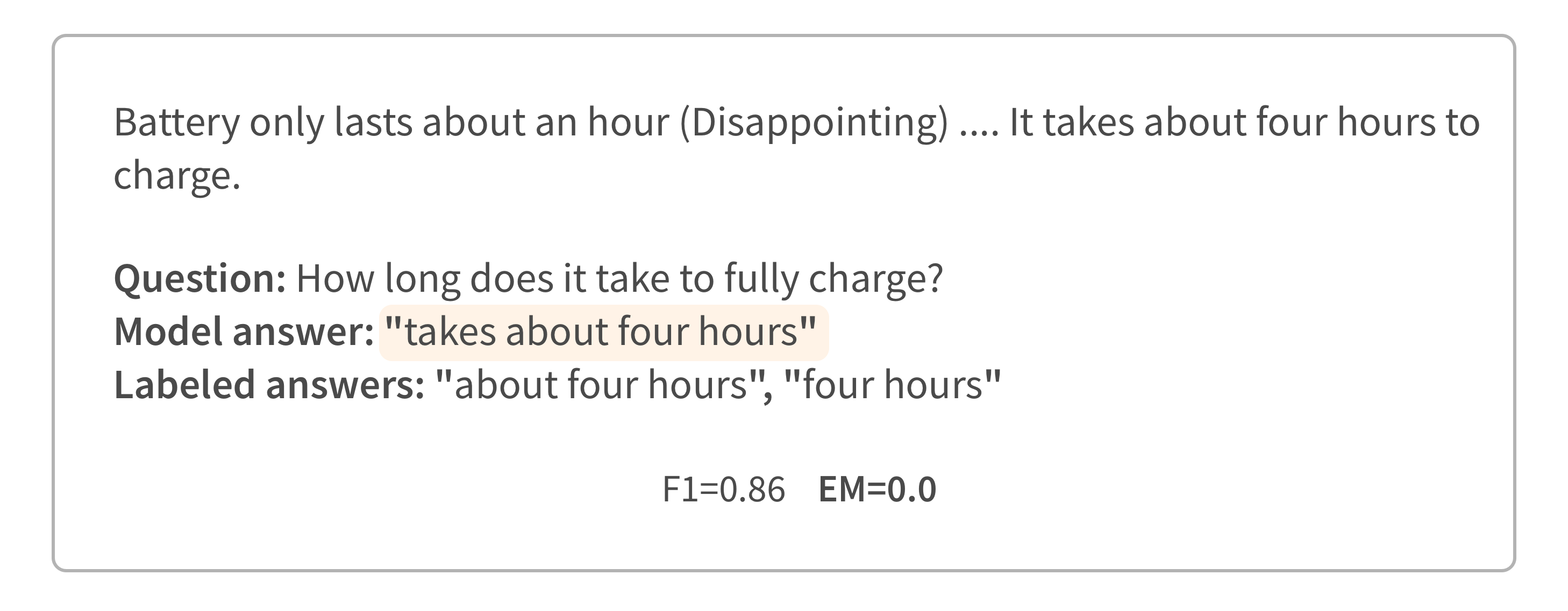

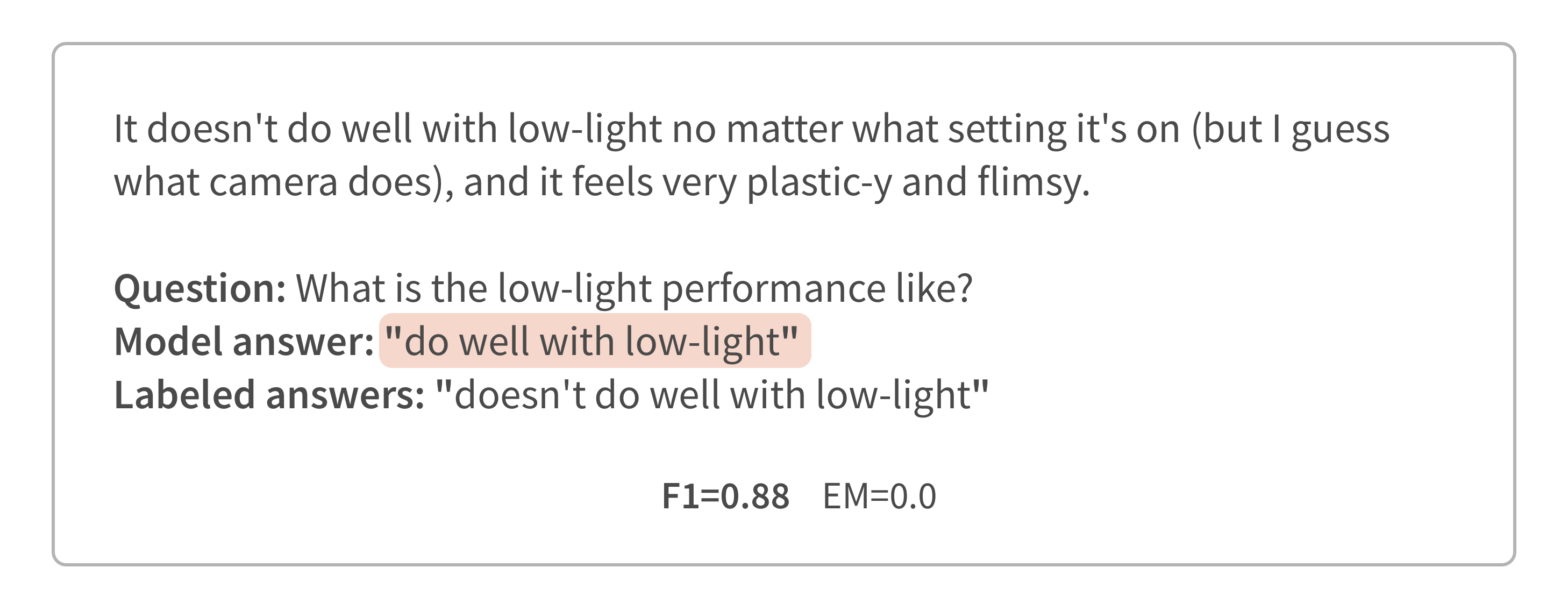

You can see a clear correlation between the metrics and the model size. That is typical for the Transformers world — bigger models show better results. You can also see two types of F1 and EM (exact match) metrics in the table. Since “no answer” classification is a pretty challenging task, we are often faced with the problem that some models are good at the “no answer” task (F1_no_ans), while others at the answer extraction (F1_span). So, it makes sense to keep an eye on both objectives separately. For the answer extraction task, we should track both F1 and EM metrics as well: even though EM is more precise, it’s difficult to label all possible variations of the correct answer. That means that EM will be underestimating the model performance. On the other hand, F1 is prone to overestimate the model performance in some cases (see examples below).

Although the results are far from ideal and noticeably lower than the SQuAD dataset results, the largest model shows pretty good results out of the box. However, it's so slow that we can't use it in production even with GPU acceleration. RoBERTa-base and ALBERT-base models don’t perform that well, and they are also not fast enough for our case, mostly because we work with long sequences (more than 256 tokens), and we would like to process ten such documents per user request in our pipeline. Still, employing various optimizations and GPU-accelerated servers *-base models can be suitable for many cases, including ours. Let’s try to improve the models quality for our domain.

Adapting the model to your domain

Does domain adaptation matter for QA tasks? After all, the model trained on the SQuAD dataset already answers many questions correctly. If we look at how we usually answer the questions like “what is …”, “how to …”, “how many …”, it often depends on various domain-independent knowledge about the language, especially in case of syntactic and lexical similarity between a question and an answer. There are questions for which you can find an answer without understanding some words in it. But in reality, not all documents and answers are similar to a question in such a way, so domain-specific lexical knowledge is still also necessary for many questions, and the SQuAD dataset also doesn’t cover all the cases. Thus, we still need to enrich a language model with our domain knowledge and fit the model to our questions and documents.

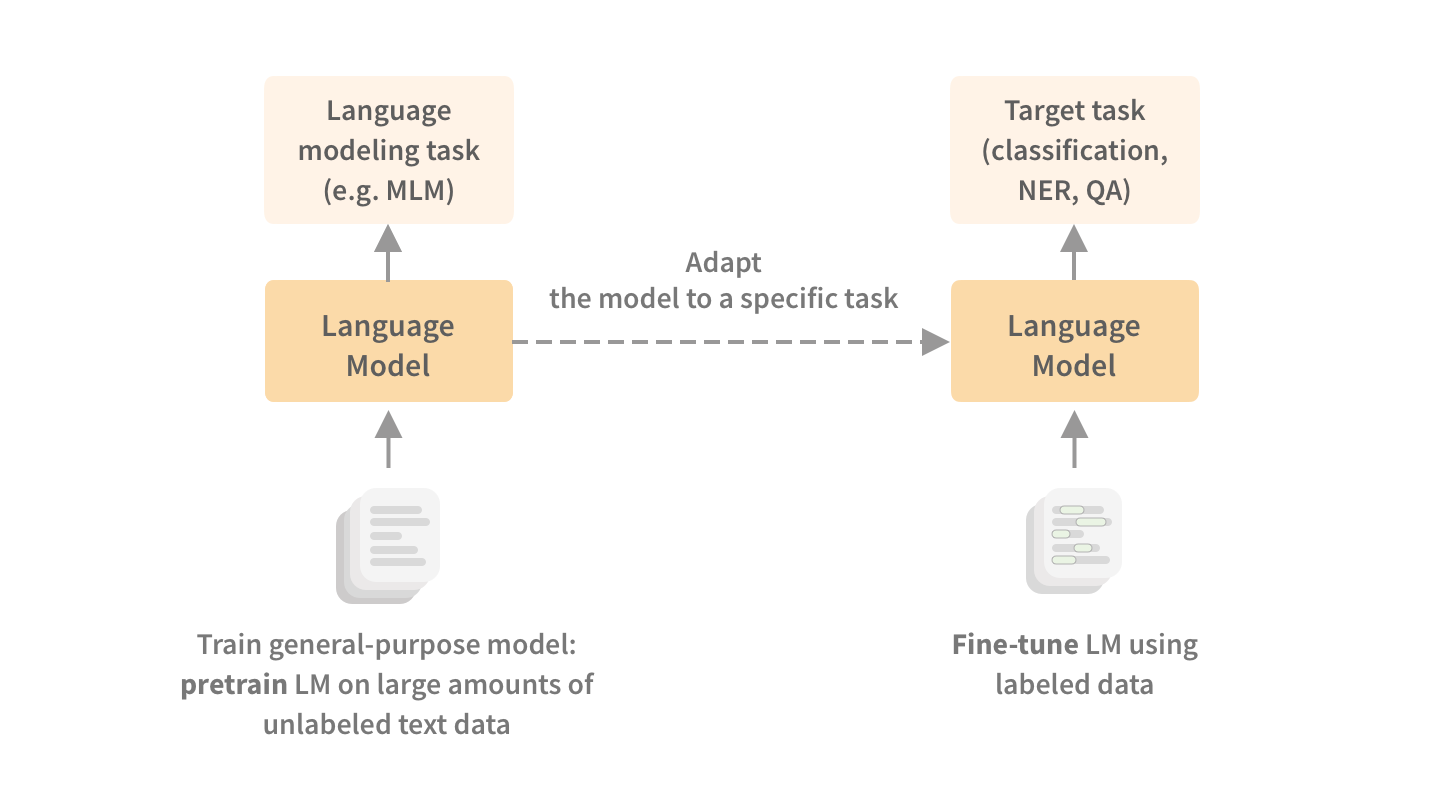

In modern NLP, we use Transfer Learning techniques to adapt the language models (LM) to a target domain and task. There are usually two main steps: LM pre-training on unlabeled data and then fine-tuning for target tasks using a labeled dataset. The LM pre-training step helps a lot with sample-efficiency when you can achieve good results using fewer labeled samples. But even if your LM has enough knowledge after pre-training, you still need to add task-specific layers on top of it and adapt them to a target task as well, and here you still need enough labeled data (that is one of the limitations to achieve even better sample-efficiency during fine-tuning [4]).

Let’s investigate how we can adapt the model to our photo & video cameras domain using unlabeled data, and what is possible to achieve with a small labeled dataset. For our project, we had ~1GB of raw text data related to the photo & video cameras domain, and ~120K unlabeled Q&A pairs.

Domain-adaptive pre-training

First, let's check if pre-training LM on in-domain data will help us. In our case, we had ~1GB of raw text, which is not enough to train the LM from scratch, but could be enough to take a pre-trained language model and continue pre-training it on domain-specific data. After we adapt LM using a masked language modeling objective [5], we need to fine-tune it for the MRC task after that, that’s where we need labeled data.

We can still use the SQuAD dataset to fine-tune task-specific layers, but since this dataset does not overlap with our domain, it probably can not outperform existing models trained on it. Even more, training on the SQuAD dataset may lead to a catastrophic forgetting effect (of what we learned during pre-training). However, several studies have shown that the knowledge gained at the lower layers of the Transformer does not change dramatically during the fine-tuning on an MRC task (other tricks like discriminative fine-tuning and gradual unfreezing also can help here).

We also have our small test dataset (1000 labeled examples). The dataset is still tiny to fine-tune task-specific layers, so additional fine-tuning on SQuAD may help here, and then we can fine-tune the model on our test dataset using cross-validation. Let’s perform several experiments with RoBERTa-base.

| Model | F1 | EM | F1_span | EM_span | F1_no_ans | SQuAD 2.0 F1/EM |

|---|---|---|---|---|---|---|

| RoBERTa-base + in-domain pretraining + SQuAD 2.0 | 62.2 (+3.1) | 51.1 (+3.0) | 74.2 (+3.4) | 48.3 (+3.3) | 73.2 (+3.2) | 80.4 / 76.4 |

| (baseline) RoBERTa-base + SQuAD 2.0 | 59.1 | 48.1 | 70.8 | 45.0 | 70.0 | 81.4 / 78.3 |

As we can see, additional LM pre-training improves the model. Now let’s take these models to fine-tune them using cross-validation (1000 examples, mean of 4 folds shown in the table):

| Model | F1 | EM | F1_span | EM_span | F1_no_ans |

|---|---|---|---|---|---|

| RoBERTa-base + in-domain pretraining + SQuAD 2.0 + CV | 72.3 (+3.1) | 62.8 (+3.0) | 81.8 (+2.2) | 58.2 (+0.9) | 77.8 (+2.3) |

| (baseline) RoBERTa-base + SQuAD 2.0 + CV | 69.2 | 59.8 | 79.6 | 57.3 | 75.5 |

Although we cannot directly compare the models’ performance on our full test dataset with the mean of the folds, it still shows us that we can improve our model even with a small dataset. And we see again that additional LM pre-training using in-domain data helps in our case, even with fine-tuning on SQuAD after that. We also tried cross-validation without the SQuAD dataset, but the model didn't show good results as expected. But even in those experiments, the model with additional LM pre-training was better.

Knowledge distillation for unlabeled data

Using the same approach as for creating the test dataset, we collected additional ~30K question-document pairs from customer’s Q&A and reviews for which we don’t have labels. One of the obvious ideas is to use a pseudo-labeling technique, which didn't give us noticeable improvements. But the less obvious idea is to apply a knowledge distillation to our problem.

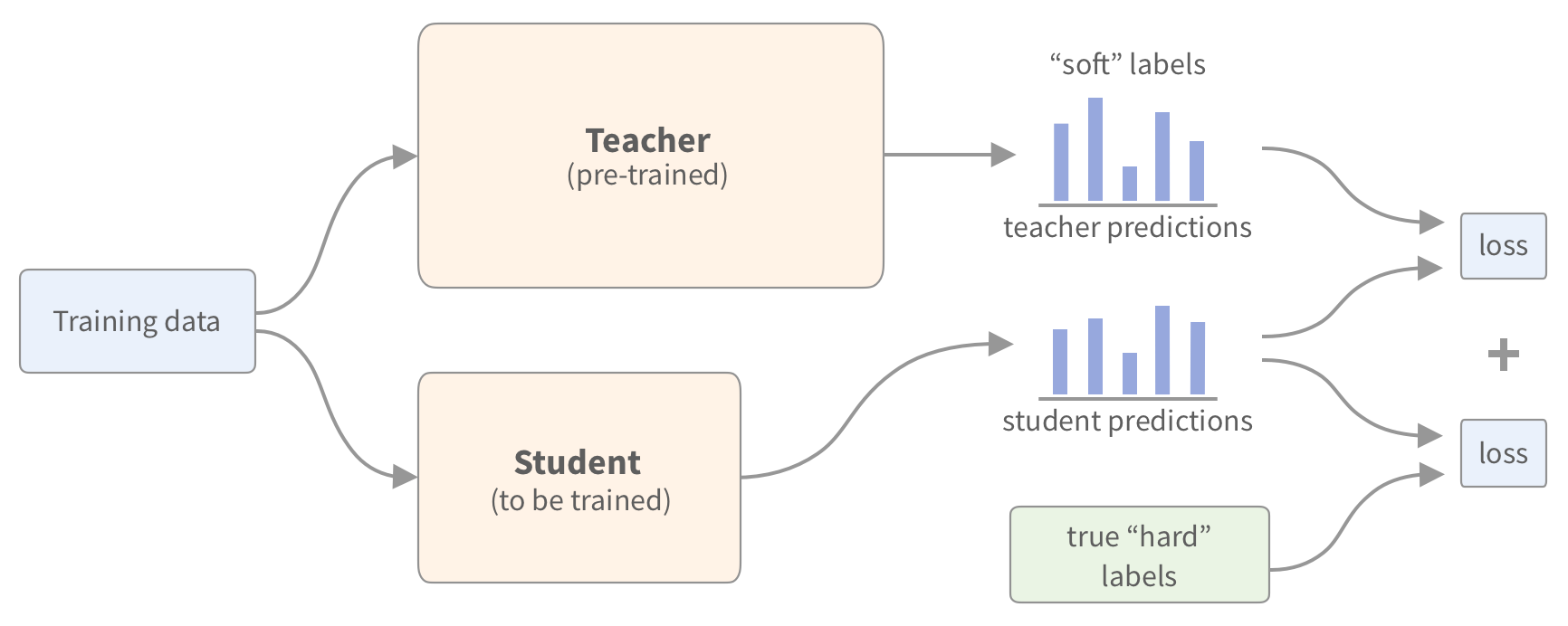

Knowledge distillation is usually used as a model compression technique when we have a model, but it is not fast or small enough. Knowledge distillation is a technique in which we train a smaller model (student) using output probabilities from our primary larger model (teacher), so a student starts to imitate its teacher’s behavior. Rather than training only with a cross-entropy loss over the hard targets (ground-truth labels), we also transfer the knowledge from the teacher to the student with a cross-entropy over probabilities of the teacher. The loss based on comparing output distributions is much richer than from only hard targets. The idea is that by using such “soft” labels, we can transfer some “dark knowledge” from the teacher model. Additionally, learning from “soft” labels prevents the model from being too sure about its prediction, similarly to a label smoothing technique.

Knowledge Distillation is a beautiful technique that works surprisingly well and is especially useful with Transformers. Bigger models often show better results, but it’s hard to put such big models to production. And knowledge distillation is one of the main techniques to help here.

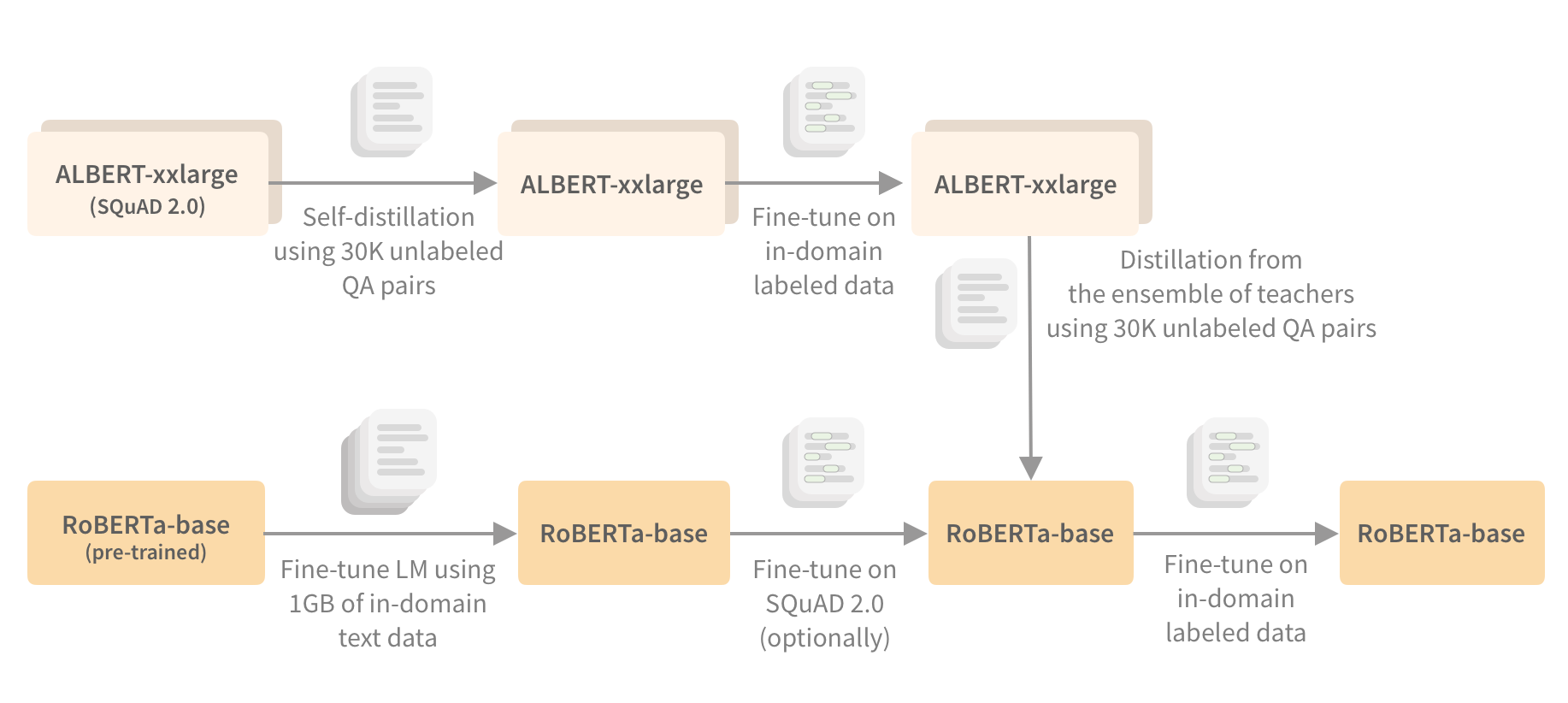

Now, let’s understand how we can apply distillation for our case. As we saw earlier, ALBERT-xxlarge showed the best results on our dataset, so it will be a teacher model. We don’t have labels for our 30K examples, but we can just remove part of the loss which uses labels. Sure, we will inherit more teacher mistakes during knowledge distillation without ground-through labels, but we don’t have models better than our teacher at the moment, so even to get similar results on a smaller model would be useful for us. Let’s try to distill the knowledge to our RoBERTa-base fine-tuned on in-domain data (we didn't use ALBERT-base because there were no fast tokenizers and ONNX support for ALBERT architecture at that time).

| Model | F1 | EM | F1_span | EM_span | F1_no_ans | SQuAD 2.0 F1/EM |

|---|---|---|---|---|---|---|

| ALBERT-xxlarge + SQuAD 2.0 | 70.8 | 59.6 | 84.3 | 61.0 | 76.7 | 89.0 / 84.6 |

| RoBERTa-base + in-domain pretraining + SQuAD 2.0 + Distillation | 66.6 | 57.2 | 82.9 | 60.6 | 74.1 | 67.0 / 61.2 |

| DistilRoBERTa + SQuAD 2.0 + Distillation | 63.3 | 52.3 | 77.7 | 54.0 | 71.1 | |

| RoBERTa-base + in-domain pretraining + SQuAD 2.0 | 62.2 | 51.1 | 74.2 | 48.3 | 73.2 | 80.4 / 76.4 |

| RoBERTa-base + SQuAD 2.0 | 59.1 | 48.1 | 70.8 | 45.0 | 70.0 | 81.4 / 78.3 |

As you can see, we got RoBERTa-base with F1/EM close to its teacher ALBERT-xxlarge, which is also much slower than our RoBERTa, and we didn’t use any labeled data for training. Of course, it doesn’t mean we don’t need labeled data anymore, the score is still far from ideal, and we also inherited teacher mistakes, so adding labeled data may improve this training procedure.

We can also think about distillation as an additional pre-training step to achieve better sample-efficiency when using labeled data. Below, you can see that a knowledge distillation helps in our cross-validation experiments as well.

| Model | F1 | EM | F1_span | EM_span | F1_no_ans |

|---|---|---|---|---|---|

| RoBERTa-base + in-domain pretraining + SQuAD 2.0 + Distillation + CV | 73.1 (+0.8) | 63.0 (+0.2) | 83.2 (+1.4) | 59.5 (+1.3) | 78.8 (+1.0) |

| RoBERTa-base + in-domain pretraining + SQuAD 2.0 + CV | 72.3 | 62.8 | 81.8 | 58.2 | 77.8 |

Self-distillation

The better teacher you have, the better student you can probably train. So, one of the main directions can be improving the teacher model. First of all, distillation training procedure allows us to effectively use an ensemble of teachers and distill them to a single smaller model. Another exciting technique is the self-distillation when we use the same model architecture for both student and teacher. Self-distillation allows us to train a student model which will perform better than its teacher. It may seem strange, but because a student updates its weights learning from the data that the teacher didn’t see, this can lead to better performance of the student (of comparable size) on the data from that distribution. Our experiments also reproduced this behavior when we applied self-distillation for ALBERT-xxlarge and then used it as one of our teachers for further knowledge distillation to RoBERTa-base.

Knowledge distillation as pre-training using unlabeled data is not a novel idea, and it already showed good results in both computer vision (SimCLRv2, Noisy Students) and NLP (Well-Read Students Learn Better).

Worth to say that even though we didn’t label any data for distillation, we still had questions, which is not always the case. But as we mentioned earlier, we didn’t use the original Q&A pairs. Instead, we collected these pairs from independent Q&As and reviews using the pre-trained USE-QA model. In such a way, we can approach the further model improvements in production. After we start collecting real questions asked by users interacting with our system, we can find candidate documents for these questions in the same way, and use this dataset for knowledge distillation without labeling many examples.

Accelerating BERT in production

RoBERTa-base model was our choice in terms of a trade-off between quality and speed, but it is possible to distill the knowledge even into smaller models like DistilRoBERTa. As we said earlier, RoBERTa-base is still relatively slow, so we applied various optimizations (most of them are well-known), which allowed us to achieve more than 3.5x acceleration. Here is the list of things which worked well for us:

- Use GPUs for inference. Modern GPUs like NVIDIA T4 can be much more beneficial compared to a CPU at a similar price.

- Use a low precision inference: fp16 for GPU (up to 2x acceleration), int8 for CPU.

- Use faster tokenizers: this can be a bottleneck even more than the model inference itself. There are faster implementations written in Rust. You can also pre-compute / cache tokens for documents.

- Use optimized inference servers like ONNX-runtime, TensorRT, etc. These servers also have various optimizations specifically for BERT / RoBERTa. They are especially effective for sequences shorter than 128 tokens.

- Smart batching: group inputs of multiple concurrent requests by sequence length.

- For long documents split into overlapping windows, you can use a faster model to select the best window instead of processing the entire document.

- And of course, a simple cache by a question helps for popular queries.

Besides knowledge distillation, there are other popular techniques, for instance, pruning. For computationally efficient pruning, better to prune entire attention heads or even layers. Yet pruning is more beneficial when your goal is to decrease the model’s size, not to accelerate it. The pruning could also be easily applied after model training by analyzing which weights are less important. In our case, we were able to prune ~15% of attention heads without a significant drop in accuracy.

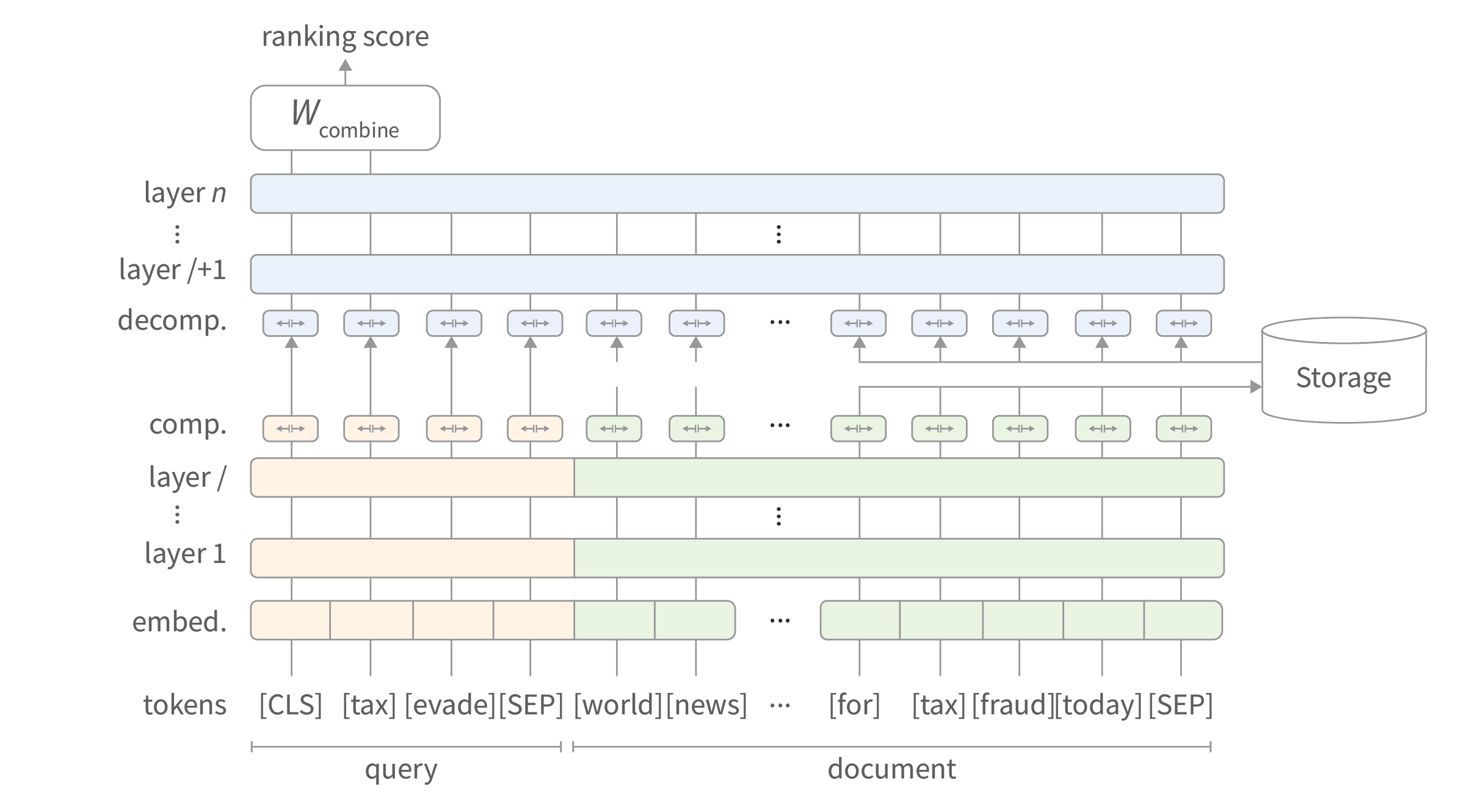

There are also various task-specific model optimizations. For instance, Early Exit which is mostly applied for a classification task, and Pre-computed Representation for a document ranking model. The second one requires pretty simple modifications to pre-trained BERT or RoBERTa architecture, and can significantly accelerate the documents re-ranking model in the question-answering pipeline.

We also tried to apply that to our MRC model, where we removed attention (before fine-tuning) between question and document for 1-5 layers without a significant drop in accuracy, but it gave us only ~1.15x acceleration, and only for documents longer than 256 tokens.

Generally, we can achieve a low latency, but high throughput for long documents is difficult to achieve even with DistilRoBERTa. Moreover, in our case, we need to apply the MRC model to 5-10 documents per user request. That's why retrieval and re-ranking models are also important. If they allow us to retrieve smaller chunks of a document more accurately, we will feed the MRC model with a smaller number of shorter candidates.

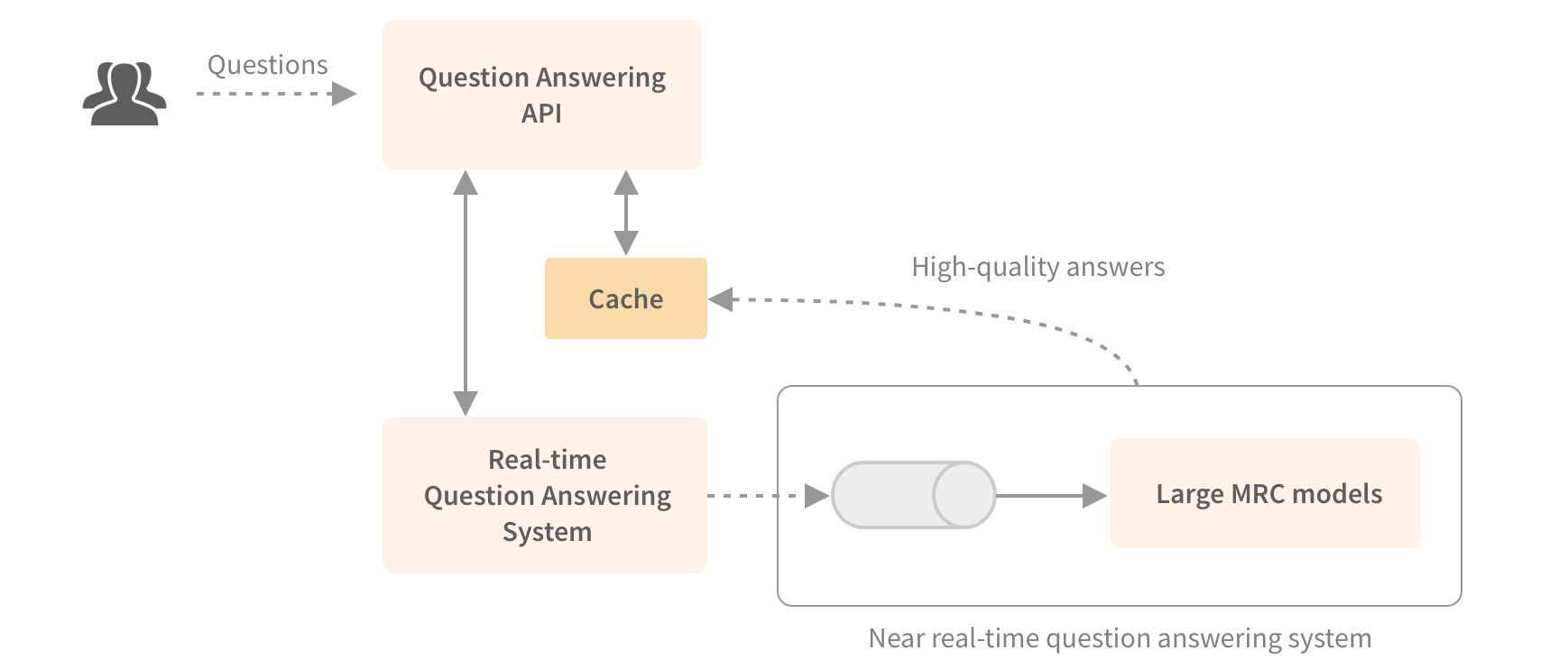

However, for high load systems, it makes sense to consider less complex models and retrieval-only approaches (without the MRC model). You can also implement two types of question answering systems - slower and more accurate vs. faster and less accurate. That will allow you to balance the load between them or use a "slower" model in near real-time mode. You can immediately return the answer provided by the faster QA system, then calculate the answer using more complex models in the background, and provide high-quality answers to similar questions next time.

Utilizing the MRC model even more

In this blog post, we described how the machine reading comprehension can be used for building question answering systems over a set of documents. But the same model can benefit other systems in our photo & video cameras domain.

The first application we discovered is that we can use MRC as a universal information extractor. Why can only users ask questions to our model? We can predefine a set of questions to extract the information for other tasks automatically. One such example is the automatic extraction of the pros and cons of particular products from customer reviews. When a user opens a product page, we can show such information extracted by our MRC model in a separate block.

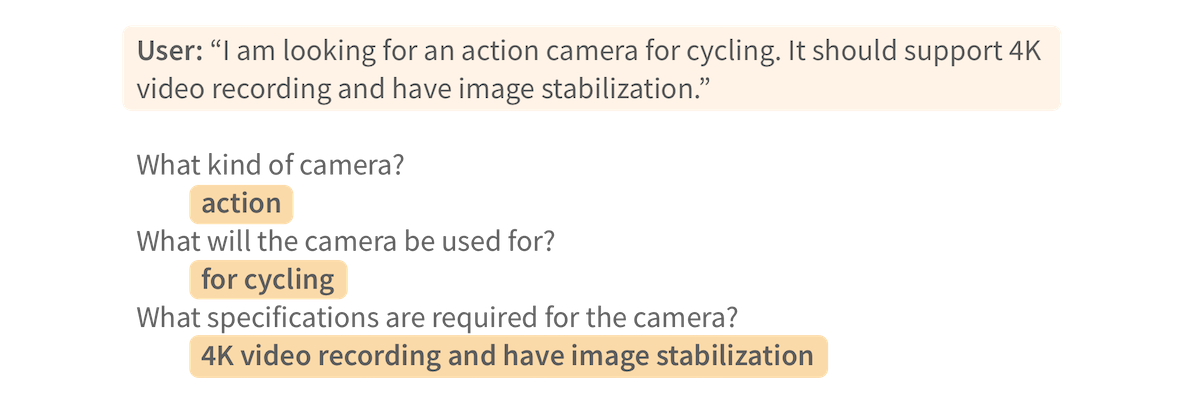

Another native place for MRC is a conversational system, where we can replace a bunch of NLU models with a single MRC model. It can be especially effective for product information questions and FAQs. A less obvious application is using MRC as a NER model, asking the questions to extract information from user utterances (see example below). Such an approach may not be efficient when you have many types of entities, and doesn’t work so well for some entities as the classic NER model, but still a pretty interesting example of zero-shot NER.

Speaking about photos & cameras, and other similar products, not only Q&A and review may have useful information. Nobody reads user manuals any more: when facing with the issue, we tend to google the manual and find the answer there. So, user manuals can be also put into a question answering system along with conversational system to provide a user-friendly access to user manual data.

Conclusion

In this blog post, we took a dive into question answering systems and, in particular, machine reading comprehension models based on Transformer architecture such as BERT. Nowadays, Transformers allow us to build advanced NLP-powered applications and achieve good results with fewer labeled examples. And even though many of these models are huge, modern GPUs and techniques like knowledge distillation allow us to use them in production.

Worth to say that besides the MRC model, there is one more critical component that selects relevant documents for MRC. Even though we didn’t touch that topic in this blog post, document ranking is as crucial as the MRC model. No matter how good the reader model, the system will not return good answers if you feed it with not relevant documents.

Besides the classic multi-stage (ranker + reader) approach to question answering systems, fully retrieval-based methods are a strong alternative that could provide competitive results for some cases and could be more suitable for high-load applications. And we also successfully use such approaches to build a semantic search.

We believe that question answering models could be a great addition to many information retrieval systems and benefit many areas where people work with domain-specific documents and need efficient tools to work with them. We are watching with curiosity in which new places these systems will show themselves, and if you see such places in your domain, we will be glad to discuss it with you.

Footnotes

- In HuggingFace Model Hub you can find several versions of the same model type, but due to different experiment setup (hyperparameters, seed, selected checkpoints, etc.), each model can show inconsistent performance for out-of-domain data. We put the best results to the table, but other versions of ALBERT-xxlarge don’t perform so well on our dataset, even with the same or higher SQuAD 2.0 dev F1/EM. You can also notice that models uploaded to HuggingFace Model Hub are trained not only using the HuggingFace library (e.g., FARM). Because of the defects in specific versions of these libraries, the model trained using one library doesn’t show the same results using the pipeline from another library. We faced such kinds of issues on RoBERTa models.

- F1 is the harmonic mean of the precision and recall.

- F1_span is computed only using examples where an answer exists and ignoring the predictions of the "no answer" classification.

- Even if your LM has enough knowledge after pre-training, you still need to add task-specific layers on top of it and adapt them to a target task as well. And here you still need enough labeled data. That is one of the limitations to achieve even better sample-efficiency during fine-tuning. Approaches applied in models like T5 partially solve this problem formulating all tasks in text-to-text form, so you pre-train and fine-tune your model in the same way without task-specific layers. And models like T5 and GPT, trained on massive datasets, already show pretty good results in zero and few-shot learning.

- We did not use the Next Sentence Prediction (NSP), several papers (including RoBERTa) have shown that this does not help. We also haven't tried to extend or replace the vocabulary of the pre-trained LM, although this usually helps. But even that additional pre-training helped in our case, which was a bit unexpected, but we don't think it might work for every task / domain.

- We used a single NVIDIA 2080TI for all of our experiments. A gradient accumulation allowed us to work with larger models such as ALBERT-xxlarge and RoBERTa-large, and mixed precision (fp16) to train the models faster.

Resources

Transfer Learning and Transformers:

- The State of Transfer Learning in NLP (Ruder)

- Transfer Learning in Natural Language Processing (Ruder, Wolf)

- A Primer in BERTology: What we know about how BERT works

- oLMpics -- On what Language Model Pre-training Captures

Question Answering overview:

- Question Answering Architectures (CS224N Stanford lecture)

- A Survey on Machine Reading Comprehension: Tasks, Evaluation Metrics, and Benchmark Datasets

Analyzing QA datasets and what Transformers learn from them:

- Learning and Evaluating General Linguistic Intelligence (SQuAD, sample-efficiency, generalization)

- DeepLIFTing BERT’s Attention in Question Answering

- How Does BERT Answer Questions? A Layer-Wise Analysis of Transformer Representations

- What do Models Learn from Question Answering Datasets?

- Question and Answer Test-Train Overlap in Open-Domain Question Answering Datasets

- A Qualitative Comparison of CoQA, SQuAD 2.0 and QuAC

Pruning, distillation, quantization:

- Overview of BERT compression techniques (Rasa)

- A Survey of Methods for Model Compression in NLP

- Knowledge distillation and DistilBERT

- Well-Read Students Learn Better: On the Importance of Pre-training Compact Models

Speedup BERT re-ranking models:

- Efficient Document Re-Ranking for Transformers by Precomputing Term Representations

- EARL: Speedup Transformer-based Rankers with Pre-computed Representation

Open Domain QA:

- Recent approaches to document indexing for open domain QA (HuggingFace)

- Real-Time Open-Domain Question Answering with Dense-Sparse Phrase Index

- Contextualized Sparse Representations for Real-Time Open-Domain Question Answering

- REALM: Retrieval-Augmented Language Model Pre-Training

Frameworks to train MRC models: