Semantic vector search: the new frontier in product discovery

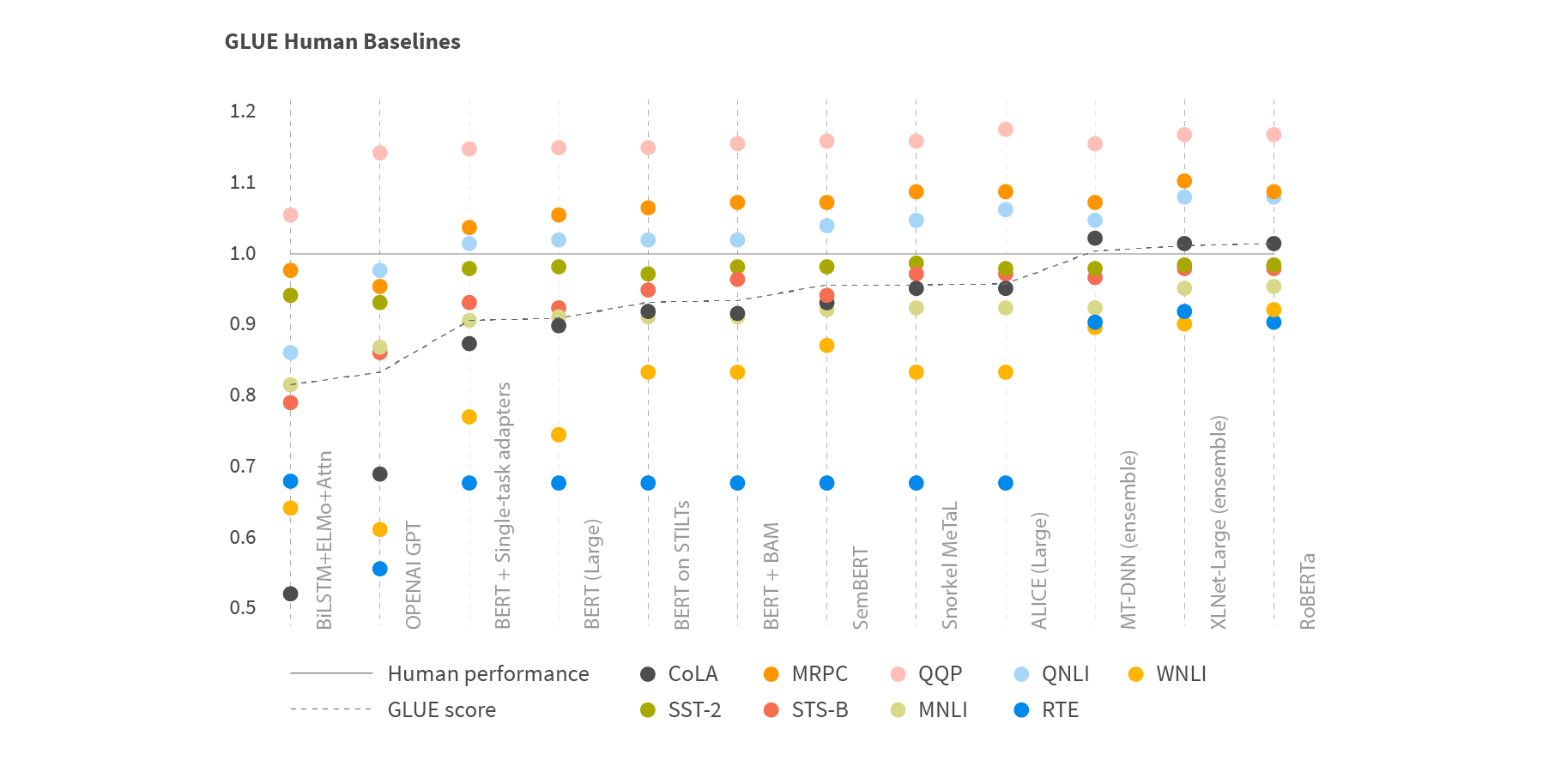

Deep-learning powered natural language processing is growing by leaps and bounds. During the past year, latest NLP models exceeded the human performance baseline in some specific language understanding tasks.

Source: Facebook AI

State-of-the-art NLP models allow AI-enabled systems to represent and interpret the human language better than ever before. At the same time, major retailers and cloud providers accumulate vast volumes of customer behavior data which enable them to train those models at an unprecedented scale.

These two key factors are fueling the current wave of innovation in the field of retail search and product discovery. The new generation of ML-based search engines is rapidly maturing, achieving relevant, timely and personalized search results that closely match customer buying intent.

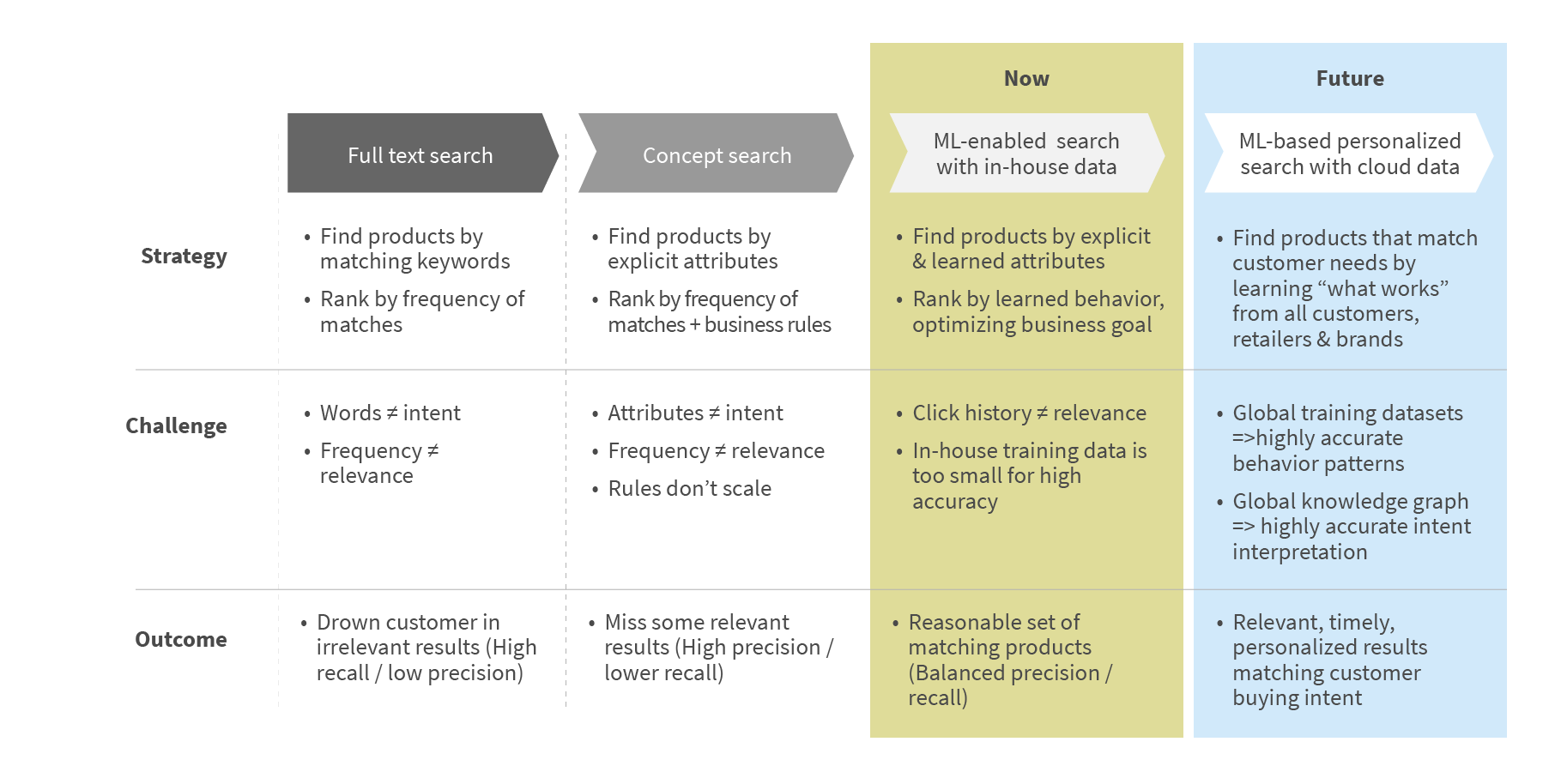

We can visualize the evolution of the retail search engines from their roots in general-purpose full text search algorithms, through the more advanced understanding of queries and the domain using concept search (a.k.a. semantic query parsing) and knowledge graphs, to the current state of the industry, where machine learning is helping traditional search engines to produce more relevant results.

In this post, we will discuss the next major step in the evolution of retail search: the arrival of deep learning-based semantic vector search engines with cloud-scale data.

Search is a solved problem. Or is it?

Search engines have been with us for several decades as an integral part of our digital life. We are casually searching over billions of web pages, millions of products in online catalogs, hundreds of thousands of vacation rental listings without thinking twice about it. So, is search a solved problem?

Electrical engineers often joke that they have only two problems: contact where it shouldn’t be and non-contact where it should. Likewise, search engineers always deal with two phenomena of the human language: polysemy and synonymy. Polysemy means that the same word may represent different concepts, like the word “table” in “table game” vs “accent table”. Synonymy means that the same concept can be represented in many ways by different words, like “Big Apple”, “NYC” and “New York” which all refer to the same city.

Humans are very good at cutting through polysemy and synonymy based on the conversational context and background knowledge, which helps them to deal with intrinsic ambiguity of words. Customers now expect the same from search engines. They want their online search service to be able to “understand” the queries, e.g. return results based on the meaning, or semantics of the query rather than a coincidental word match.

Historically, search engines have approached this problem from many different angles: they have used sophisticated semantic query parsing techniques, constructed and employed vast knowledge graphs capturing the meaning and relationships of words, and trained machine learning models to resolve ambiguity.

However, those methods are largely helpless when it comes to out-of-the-vocabulary searches, when customers use words which can not be found in available data. They also struggle with symptom searches, when a customer is trying to describe his problem, not a product which solves it, such as “headache” or “leaky roof”. Thematic searches where customers want to be inspired by some style, topic or theme, such as “madonna dress”, also represent significant challenges.

To address such queries we need to go deeper into the meaning of both data and queries in a way directly accessible and understandable to computers. This is a central idea behind semantic vector search, made possible by deep learning.

Representing your data AND your queries as semantic vectors

Computers can only deal with numbers, so we need a way to represent our data numerically. Obviously, a single number can not represent all the complexity of a query or a retail product, so we need a whole lot of them. An orderly list of numbers, such as [23, -4.13, 0, -12] is called a vector, and the length of this list is called a vector dimension.

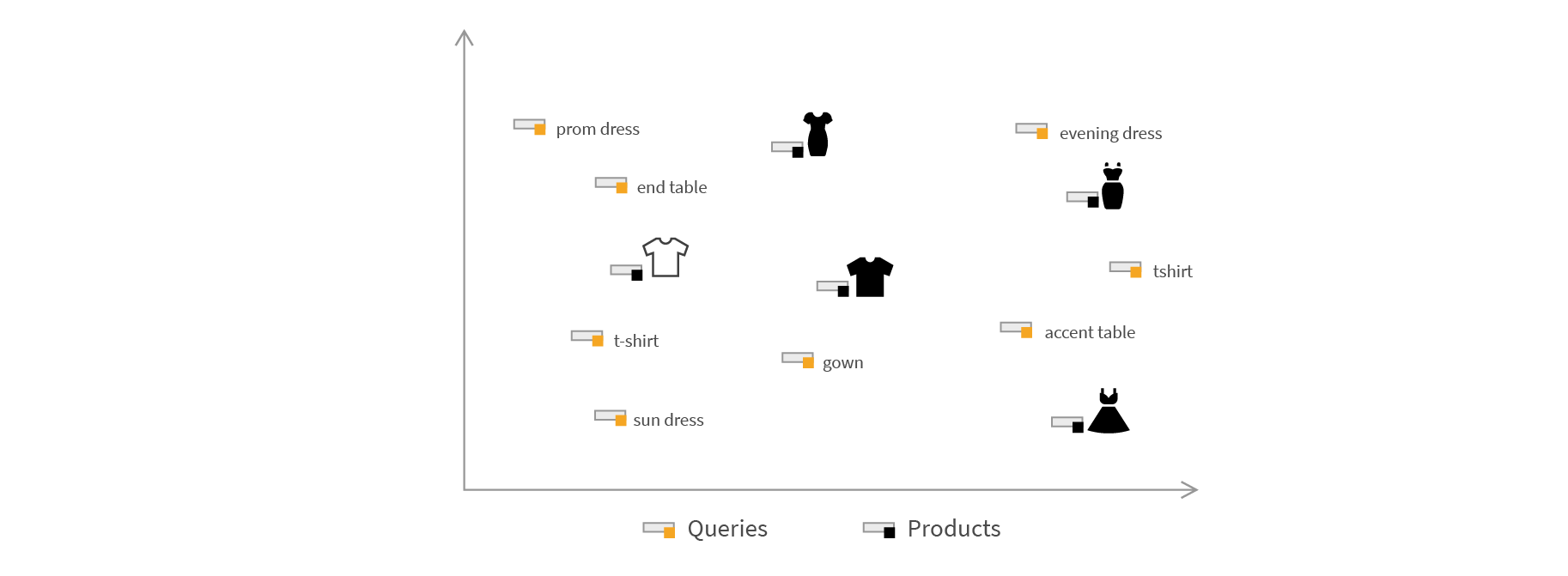

Imagine that we decided to represent all our queries and products as 2-dimensional vectors, such as [1,-3], creating a vector space which we can plot like this:

Such representation is easily accessible by a computer, yet so far it is not very useful for search, as vectors representing our queries and products are scattered randomly all over the space and their positions don’t mean anything.

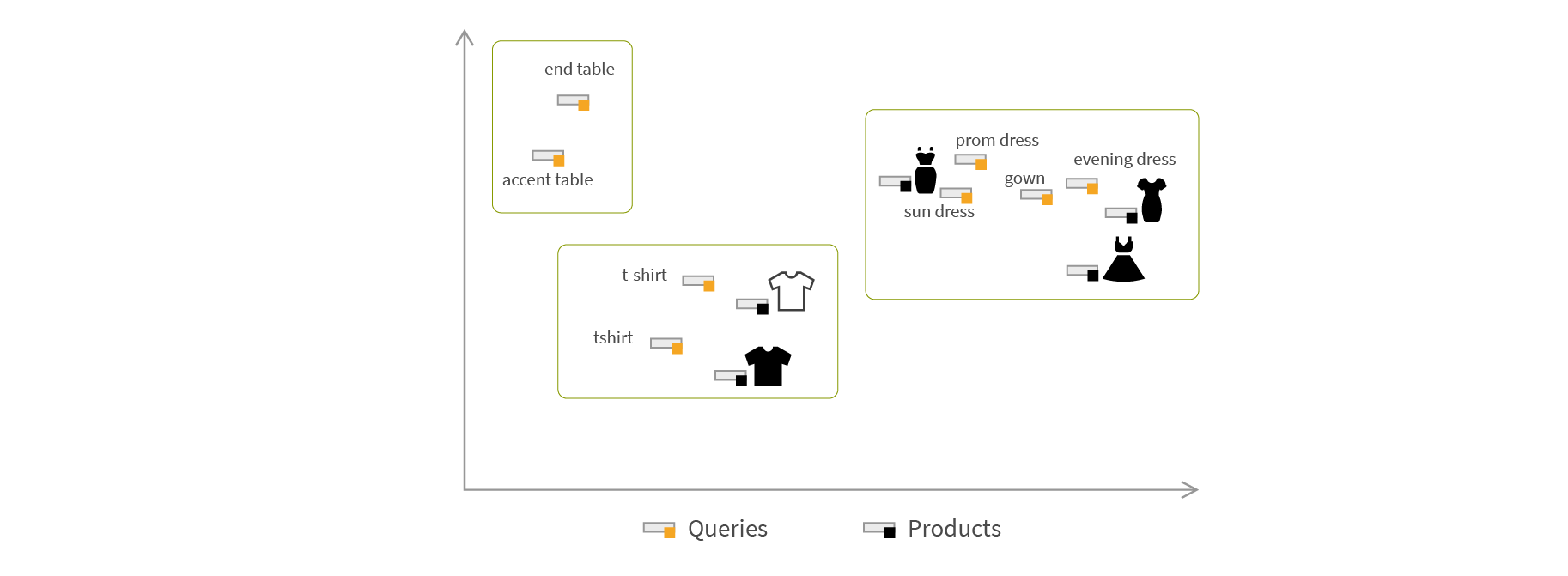

What we really want to do is to transform our data vectors to semantic vectors. With semantic vectors, products and queries which are similar in terms of meaning are represented by vectors which are similar in terms of distance:

Now we have clear clusters of queries and products representing concepts of dresses, t-shirts and tables. Distances between points represent levels of similarity between corresponding concepts. As you can see, t-shirts are much closer to dresses than tables. We have thus built a semantic vector space for our data.

With semantic vector space, the complex and vague problem of searching for relevant products by text queries is transformed into a well-stated problem of searching for closest vectors in vector space, something computers are very good at.

In reality, of course, our products and queries will be represented not as 2D vectors, but as vectors with hundreds of dimensions representing all the complexity necessary to capture the meaning of queries and products. But don’t worry, math works the same way for high- dimensional vectors, and computers have little trouble with them.

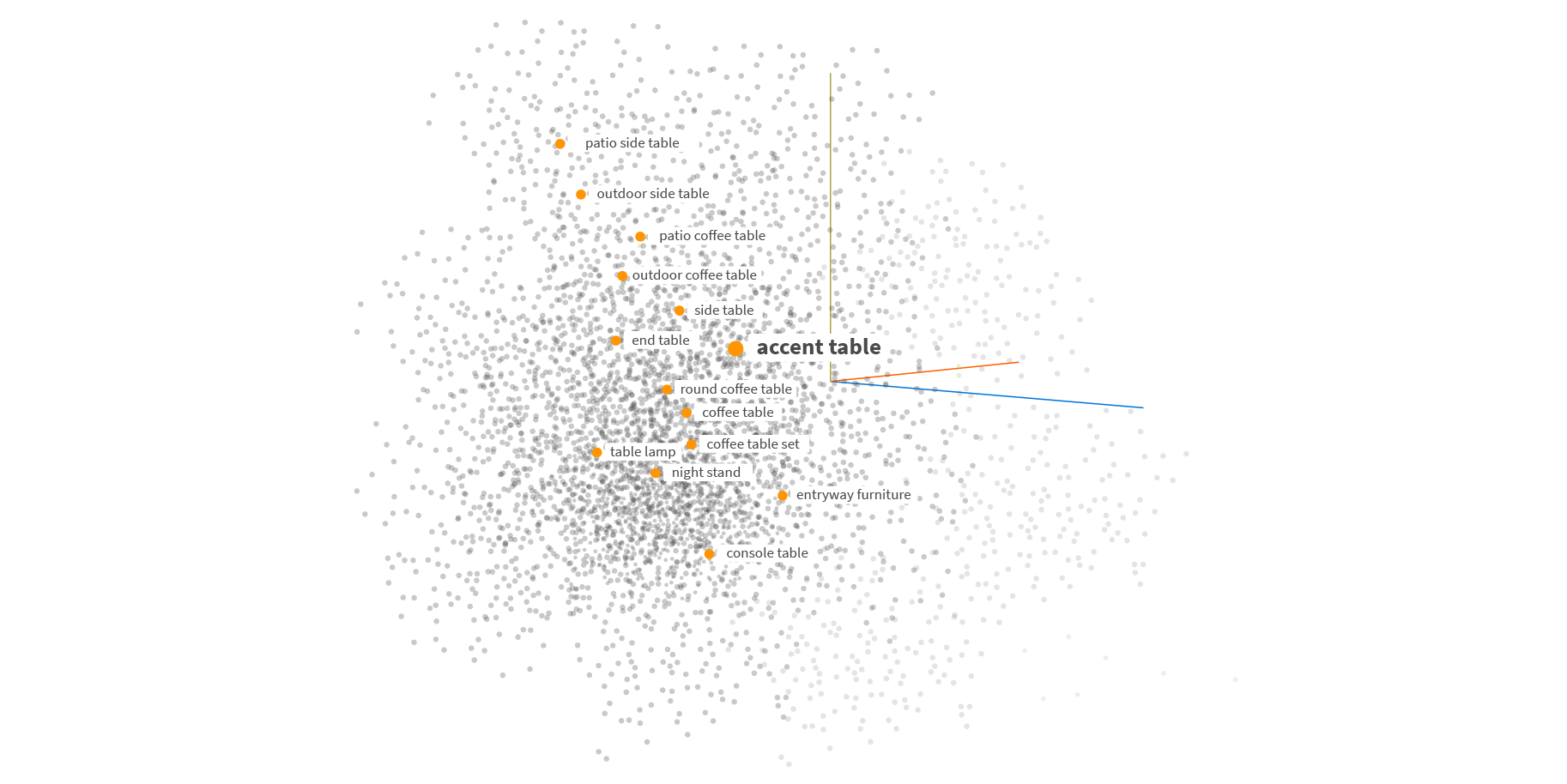

The following figure shows a real-life, 128-dimensional vector space projected to 2 dimensions with 20 results closest to the “accent table” query presented in this space.

As you can see, in our vector space the queries with similar meaning are clustered together.

So far, we didn’t talk about the most crucial step in the whole story: how do we achieve this “clustering by meaning”? In other words, how do we find a semantic vector representation for our products and queries? For that, we need to harness the power of natural language processing and customer behavior data analysis using deep learning.

Training a deep learning model to produce semantic vectors

Natural language processing capabilities made a huge leap forward with the introduction of word and sentence neural encoders which represent words and sentences as semantic vectors. Both early models like word2vec or GloVe, and especially the latest ones like BERT and Albert, are a tremendous help for anybody dealing with deep learning analysis of text data. Those models are trained by analysing huge volumes of texts, such as Wikipedia, trying to guess a word by its context, e.g. surrounding words.

Word and sentence vectors produced by NLP models can be used to encode queries, product attributes and descriptions in a way that somewhat captures their meaning. However, to achieve our goal of building a semantic query/product space useful for search we need to train our own similarity model which will map our products and queries to semantic vectors.

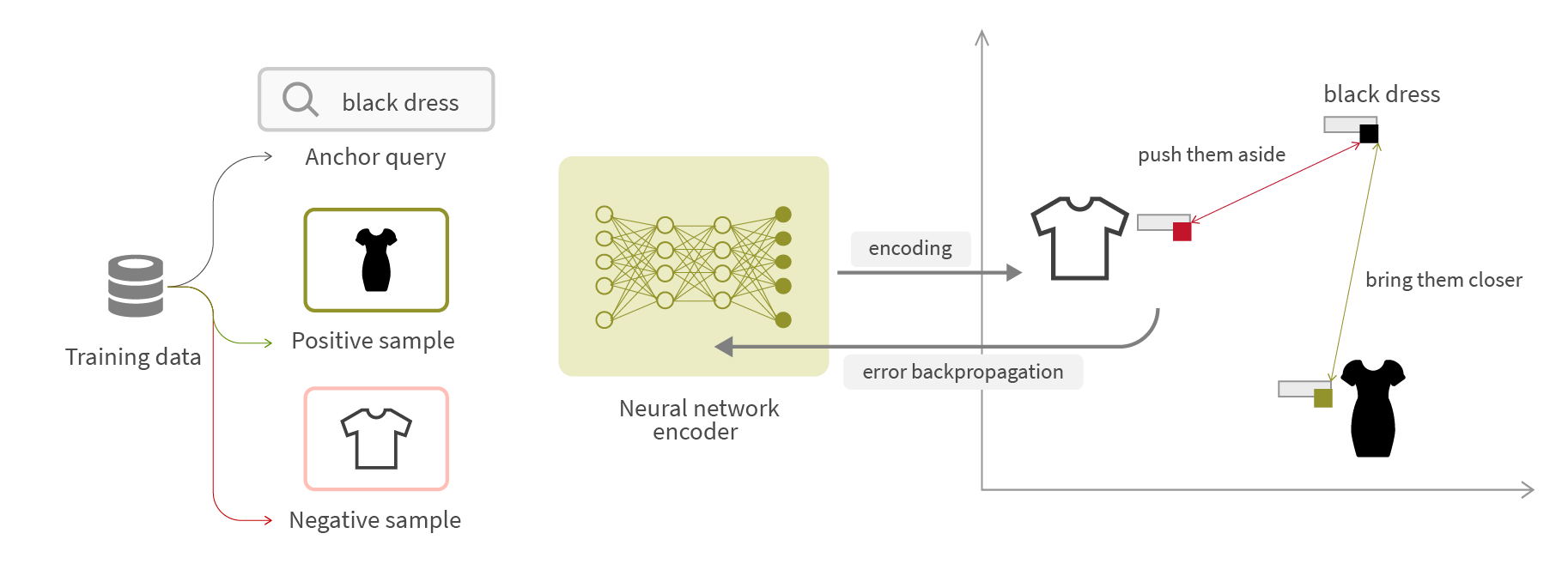

One of the possible approaches to train a similarity model is to employ the triplet loss method:

In this approach, we use our training data to form triplets containing one query called anchor, i.e. one product which we believe is relevant as the positive sample, and another product which we believe is irrelevant as the negative sample.

We encode both the query and the products into vectors and calculate distances between them. We then try to make the query and the positive sample as close to each other as possible, while making the query and the negative sample as far as possible. We calculate our error accordingly and backpropagate it to the neural network, slightly changing its internal weights.

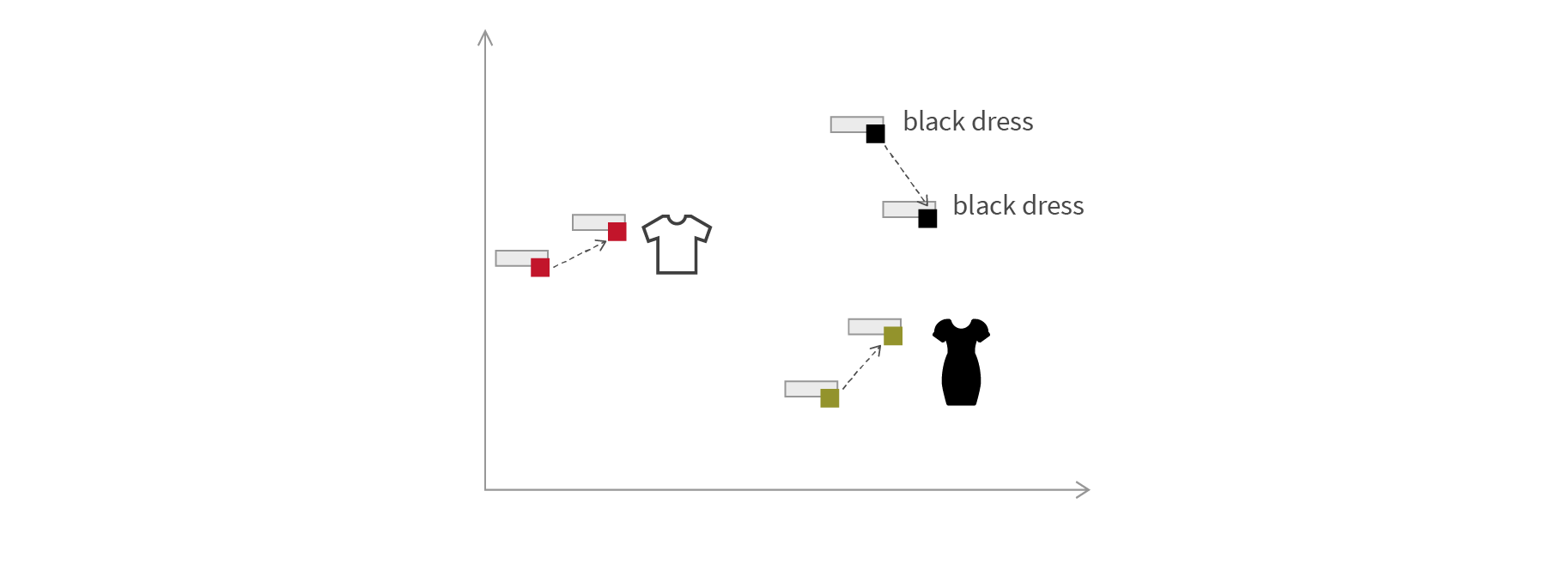

This means that with updated weights our neural network will encode the same query and our products by moving vectors in such a way that a “black dress” query will be slightly closer to the actual black dress and slightly further from the white t-shirt, thus reducing the error:

Repeating this step several million times with different triplets of queries and products, we will have trained our network to find an optimal vector for every product and query, even for those which are not available in the training set. During this process, vectors representing similar concepts will cluster by meaning, thus building a semantic vector space for us.

Training deep learning similarity models requires a lot of data on what is relevant and what is not for particular queries. And getting this volume of high quality training data is not trivial.

Obtaining the training data

The only practical way to get the relevance model training data for the retailer is to crowdsource it from customers. Many retailers are already capturing clickstream events and storing this information in their data warehouse or data lake. This customer engagement data for search and browse sessions is a trove of knowledge from which your similarity model can learn the meaning of out-of-vocabulary words or find products relevant for symptom searches.

All this happens because the model observes what products customers are interacting with, e.g. viewing, adding to the basket and especially purchasing, having used particular terms, phrases and concepts in their queries.

Of course, technology giants and cloud providers running online marketplaces, advertisement platforms and web-scale search engines naturally have access to vast volumes of training data and thus can train their deep learning models on an unprecedented scale. This, in addition to other assets, such as state-of-the-art NLP models and vast knowledge graphs, gives them an advantage and a long-term promise of a leadership position in the semantic vector space search.

Searching with semantic vectors

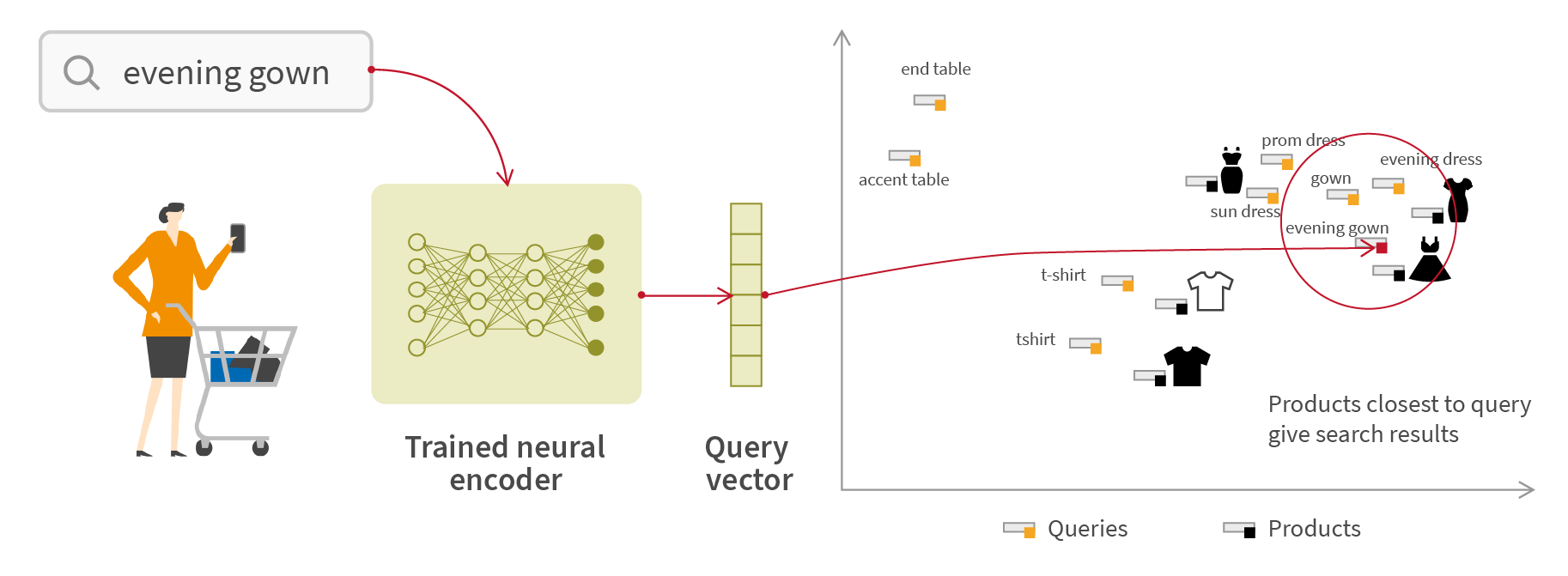

Now, once we have trained a similarity model, we are ready to build a semantic vector search engine. First, we use our trained neural encoder to encode all products of our catalog as semantic vectors. Then, we are creating a vector index - a data structure designed to quickly find the closest vectors in a multi-dimensional vector space.

When a customer is searching, the query is converted by the same neural encoder into its semantic vector representation - a query vector. We then use the vector index to find all the product vectors closest to the query vector in order to get our search results.

It is worth mentioning that the structure of a semantic vector space, in addition to the main use case of searching products by query, provides us with two additional features “for free”: we can now search for queries by query, thus creating “did you mean?” functionality, and for products by product, enabling “similar products” recommendations. Pretty neat, isn’t it?

Conclusion

In this blog post, we described semantic vector search, which we believe to be the next major step in the never-ending quest to provide relevant, timely and personalized search results which match customer’s shopping intents.

Semantic vector technology is emerging from the data science labs, and it has a lot of yet uncovered potential in the recommendations and personalization space which we will address in our upcoming posts.

We believe that everybody interested in the future of retail search should take a closer look at both technological and commercial benefits that semantic vector search promises to bring.

Happy searching (now with vectors)!

If you'd like to get more information on this subject, or to contact the author, please click here.