What is In-Stream Processing?

In-Stream Processing is a powerful technology that can scan huge volumes of data coming from sensors, credit card swipes, clickstreams and other inputs, and find actionable insights nearly instantaneously. For example, In-Stream Processing can detect a single fraudulent transaction in a stream containing millions of legitimate purchases, act as a recommendation engine to determine what ad or promotion to display for a particular customer while he or she is actually shopping or compute the optimal price for a car service ride in only a few seconds.

Why is it called In-Stream?

The term “In-Stream Processing” means that a) the data is coming into the processing engine as a continuous “stream” of events produced by some outside system or systems, and b) the processing engine works so fast that all decisions are made without stopping the data stream and storing the information first.

You can think of an In-Stream Processing engine as a “cyber plant” for event processing. Imagine that events are coming in at high speeds to the front docks of the cyber plant where they are captured, sorted into queues, and sent on to the assembly lines for processing. Inside, on the cyber conveyor, specialized software robots perform analytical computations and transformations that filter, match, count, aggregate, and reason about the events as they are passed down the line. Whenever something interesting is discovered or computed — such as unmasking a fraud or computing a dynamic price — the notification is sent immediately to an external business system to do something about it. At the end of the conveyor, processed events are shipped to a warehouse where other systems can access them for other forms of processing.

Alternative names

Since many applications of In-Stream Processing are analytical in nature, some call these systems In-Stream Analytics. Alternatively, people sometimes drop the prefix “in-” and simply call it Stream Analytics or Stream Processing. Finally, it is worth mentioning that In-Stream Processing technologies fall into a wide class of approaches for dealing with large volumes of events, called Complex Event Processing, or CEP. Because In-Stream Processing is fast and aims to analyze data nearly instantaneously, it is sometimes described as Fast Data, a term that’s growing in use and popularity.

Big Data landscape

In-Stream Processing is only one rather specialized type of processing in a broader landscape of technologies, processes, tools and applications that are commonly referred to as Big Data.

In-Stream Processing typically happens on the front end of data acquisition, and serves a dual purpose of:

- Discovering business insights that can be acted upon immediately by customer-facing online systems or internal service support teams, and

- Pre-processing of event data to make it more convenient to store for further analysis and processing by batch systems. Technically, this is called data ingestion.

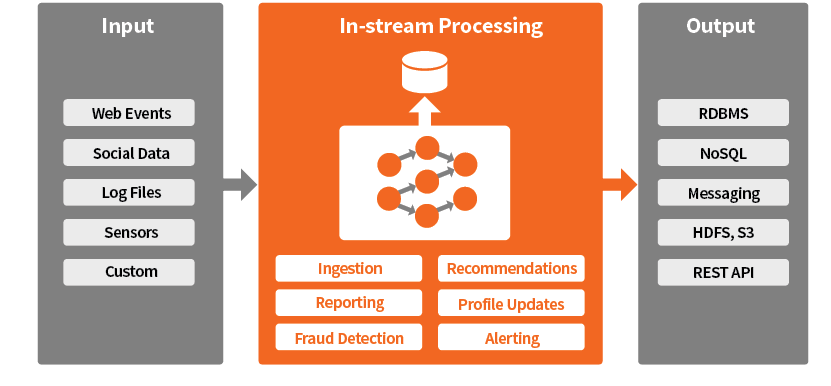

A Typical Relationship Between In-Stream and the Rest of the Big Data Infrastructure

A Typical Relationship Between In-Stream and the Rest of the Big Data Infrastructure

In-Stream Processing cannot exist by itself; it is integrated with the rest of the Big Data infrastructure to deliver real-time processing capabilities and can be added to an existing Big Data infrastructure as a new Big Data Service.

Speeds and feeds

Typical In-Stream Processing happily handles workloads such as these:

- The volume of streamed data is between 1,000 - 100,000 events/second

- Latency of processing is between 2 - 60 seconds per event

- The total time lapse between the event and business action is between 1 - 60 minutes

For fewer than 1,000 events per second, In-Stream Processing might be overkill; modern microservice architecture can do the job. For a sustained rate of more than 100,000 events per second, In-Stream Processing will more than likely still work, but will require a customized design to accommodate specific requirements and infrastructure choices.

For applications with latency requirements under 2 seconds, In-Stream Processing will not be a viable option because the data is handed off too many times between the source system, the In-Stream Processing engine, and the application that actually acts on the insight.

For applications that can wait 60 minutes or more, batch analytics systems like Hadoop probably offer a cheaper, simpler, and more powerful solution than In-Stream Processing

Typical use cases

In-Stream Processing is rapidly gaining popularity and finding applications in various business domains. In future posts we’ll describe the anatomy of specific use cases in detail to illustrate how In-Stream Processing works. For now, here is a short list of well-known, proven applications of In-Stream Processing:

- Clickstream analytics can act as a recommendation engine providing actionable insights used to personalize offers, coupons and discounts, customize search results, and guide targeted advertisements — all of which help retailers enhance the online shopping experience, increase sales, and improve conversion rates.

- Preventive maintenance allows equipment manufacturers and service providers to monitor quality of service, detect problems early, notify support teams, and prevent outages.

- Fraud detection alerts banks and service providers of suspected frauds in time to stop bogus transactions and quickly notify affected accounts.

- Emotions analytics can detect an unhappy customer and help customer service augment the response to prevent escalations before the customer’s unhappiness boils over into anger.

- A dynamic pricing engine determines the price of a product on the fly based on factors such as current customer demand, product availability, and competitive prices in the area.

While business domains are quite diverse, their usage patterns are actually very similar and come down to:

1. feeding events to the stream processing engine

2. implementing processing logic; and

3. delivering results to appropriate output systems that will act on the data insights developed by the processing engine

Common Usage Pattern for In-Stream Analytics

As In-Stream Processing technology matures and more organizations invest in digital transformation, new applications of stream analytics are being identified and implemented across a wide spectrum of industries.

References

Sergey Tryuber, Anton Ovchinnikov, Victoria Livschitz