Why e-commerce search engines must be aware of inventory

In online commerce, catalog navigation functionality is one of the key aspects of the overall user experience. Customers spend the majority of their time on the site discovering and selecting products and making purchase decisions. The mission of quality search and browse functionality is to provide the customer with the smoothest and shortest path to the most relevant products. Removing frustration from the product discovery and selection experience is a big part of this mission.

In this post, we will talk about out-of-stock items in search results. Just imagine the frustration of an online shopper who, after hours of shopping, found a perfect dress only to discover on the product data page, or even worse, on the checkout page, that the desired item is out of stock. This problem is especially painful in fashion, luxury, jewelry, and apparel retail, as well in online specialty marketplaces, where many products are unique, inventory is low, and customers spend significant time discovering products and making purchase decisions.

How modern e-commerce systems handle out-of-stock items

Some systems try to avoid the problem of making search engine inventory-aware. After all, out-of-stock products can be hidden from search results as a post-processing step. This works, and is definitely an improvement over showing out-of-stock inventory as if it was available to buy. However, faceted navigation becomes clunky; as a result of post-filtration, facet counts calculated by the search engine do not match actual product counts seen by the shopper. This may become very visible when the customer uses facet filters and gets "zero result" pages instead of the expected products, which is pretty frustrating.

An alternative approach is to grey-out product thumbnails for unavailable products on the UI. This solves the results vs facets inconsistency problem, but wastes precious real estate on the first page of results with unsellable items.

Neither of these approaches deals with a situation where some products become available at some point in time after being out-of-stock. Depending on the catalog reindexing cycle, this can postpone reappearance of the product on search and browse result pages for hours or even days.

To avoid these issues, e-commerce search system designers need to embed up-to-date information about inventory availability right into the search system. Not only must it accurately and consistently filter out-of-stock items, and reveal in-stock items in a timely fashion, but having this information available also unlocks a range of powerful features. Omnichannel customers can find products available in nearby brick-and-mortar stores. Products can be boosted (or buried) in search results based on underlying inventory levels. In-store customers can filter enterprise catalog by what is available in the store where they are shopping right now.

Embedding availability information into the search index

Making a search engine support inventory availability is easy. After all, a search engine such as Solr excels in filtering. What is hard, though, is keeping inventory data up-to-date. From a technical perspective, displaying up-to-date inventory availability means supporting high volume, high-frequency updates to the search index. This is bad news for most search engines that are designed for read-mostly workloads and are optimized assuming immutability of the index.

In this post, we focus on e-commerce search applications based on Solr, yet the same ideas should be applicable to other search engines and verticals concerned with embedding near real-time signals of various types in their search indexes.

Let’s consider some of the approaches implemented in the industry.

Brute force

The brute force approach would be to delete and reindex all documents affected by each availability change. This is typically easy to implement and is perfectly fine for small-scale, low-traffic web shops. For large-scale, high-traffic sites this approach becomes impractical. Brute-force indexing adds quite a bit of overhead, as product and SKU documents have to be repeatedly retrieved from a data source, processed by business logic, and transmitted to the search service, where they are re-indexed again as they pass through all analysis chains. This is obviously the heavies and lowest-performing solution possible. Can we do better than that? We certainly hope so!

Partial document updates

The brute force approach can be improved upon by not constantly reloading and retransmitting product information. All we need to transmit is information about what actually changed inside the existing document — in this case, the SKU availability field. This is called partial document update, and it is supported in both Solr and Elastic. It seems like we are done now, doesn’t it? Unfortunately, this is not so. Both Solr and Elastic support partial document update through full reindexing under the hood. This means that they store the original document data in the index and, once a partial update request is received, restore the document from the original, patch it according to the partial update data, and reindex it again as a new document, deleting the old version.

In general, this approach takes a high toll on search performance:

- Reindexing of entire documents and passing them through all analysis chains consumes a lot of CPU cycles

- Index size is increased by storing original documents data

- New index segments are created and old documents are marked as deleted in existing segments, causing segment merge policies to kick in and use additional CPU and build up I/O pressure

- Most importantly, searches have to be reopened after commits make changes visible. This wipes all accumulated filter caches, document caches, and query result caches

- Index commits, which make changes visible, wipe filter and field caches as additional segments are added to the index;

- In the case of a block index structure, whole blocks of the documents have to be reindexed, significantly increasing overhead

This makes partial document update suitable only for infrequent, low volume updates, e.g. 10-15 minutes apart, thousands of documents in update.

Query time join

The problem with volume of updates can be addressed by moving inventory information into separate documents and performing query time join in Solr or Elastic (based on Lucene JoinUtil). This way, we will have a product documents and inventory documents which can be separately updated.

This approach has the advantage that separate lightweight inventory-only documents will save a lot of reindexing costs, especially in the case of block index structure.

Few things to keep in mind: in the case of distributed search custom sharding is required to correctly join products and inventory documents. Also, Lucene query time join is not a true join as only parent side of relationship is available in the result, e.g. it is similar to SQL inner select.

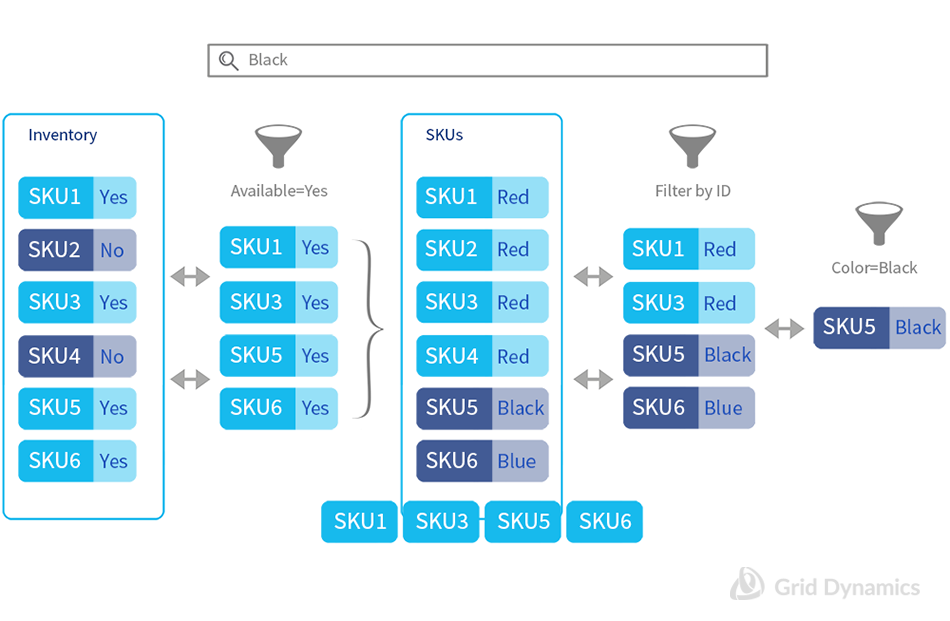

Still, this functionality is enough to support filtering by inventory availability. We can create a join query to select all available SKUs. How will it work under the hood? JoinUtil will first filter all inventory records by availability, then retrieve their SKU IDs, then filter SKUs by those IDs and intersect with the main query applied to the SKUs.

If this doesn’t feel like a high-performance solution, your intuition is correct. Indeed, query time join doesn’t perform all that well out of the box. It can only traverse a relationship in one direction — from child to parent, which can be very wasteful if the main query for the parent is highly selective. It also requires access to the child’s foreign key field value, which can be relatively slow even with docValues. The search by primary key on the parent side is faster, but still requires expensive seek on the terms enumerator.

Also, this solution still suffers from the necessity to commit to the Solr core and reopen searches, losing accumulated caches in the process.

Cross-collection filter warm up

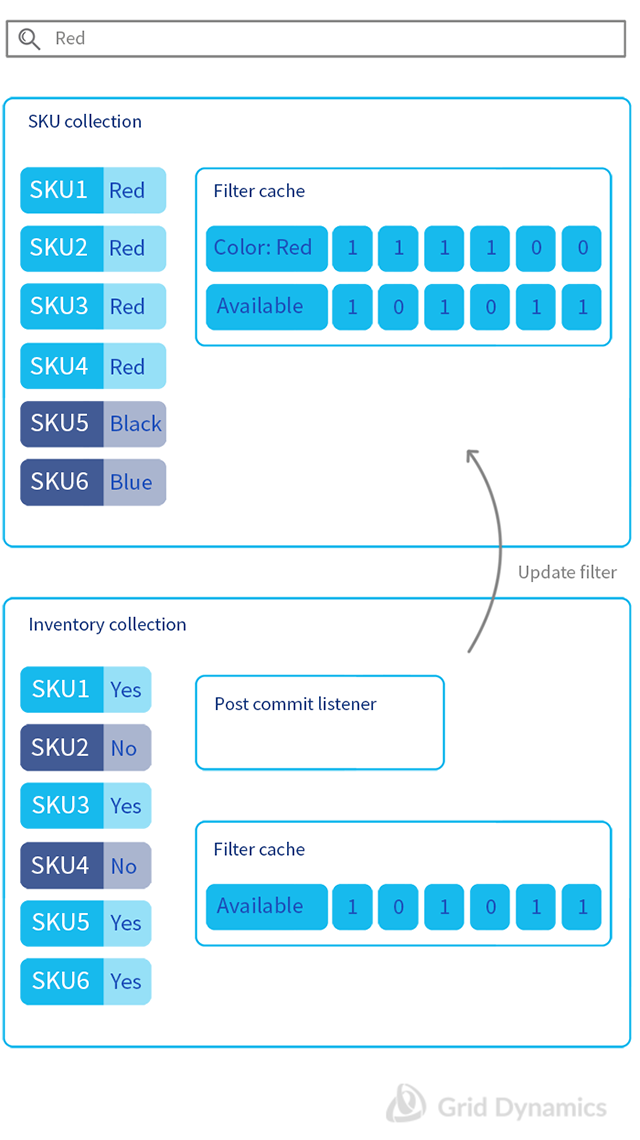

Query time join can be avoided with the following trick. Since Solr 4.0 we have been able to perform query time join across separate collections. Assuming we have separate products and inventory collections, we can have a post-commit listener on inventory collection which will access and patch an availability filter within a product core. This approach eliminates the performance hit of query time join and retains caches in the product collection. Still, there is no such thing as a free lunch. This approach requires a custom Solr plugin and pretty involved code. Out-of-the-box Solr uses filter cache in order to speed up cross-core-join performance. Using the plugin, this cache needs special treatment and must be regenerated asynchronously.

In-place updates

It is well-known that the secret sauce of search engine performance is an inverted index. However, for many tasks which are traditionally performed by search engines, such as faceting and sorting, an inverted index is not efficient. To facet or sort documents you need mapping from the document ID to a value of the field. Historically, in Lucene, this mapping was delivered by fieldCache which was created by “uninverting” the indexed field. Such an approach is not optimal because:

- It is often the case that we facet or search by a particular field, but never filter by it. The FieldCache approach still requires us to keep an inverted index for the field

- FieldCache occupies substantial heap space and it either has to be fully materialized in heap for all necessary fields or you can’t use it

- FieldCache uninverting can cost a lot, especially in the case of frequent index updates. They are lost -- and lazily recreated after every index commit

To address these issues, Lucene developers introduced the concept of docValues, which by now has completely replaced fieldCache. In a few words, “docValues” is a column-oriented database embedded directly into a Lucene index. docValues allow flexible control over memory consumption and are optimized for random access to the field values.

But there is one feature which makes them stand aside: docValues are updatable, and, more importantly, can be updated without touching the rest of the document data. This unlocks true partial updates in the search engine and can support very high throughput of value updates (as in tens to hundreds of thousands per second). Replication of the updates across the cluster also comes out of the box and are pretty efficient, as docValues changes are stored separately from the rest of the segment data and thus do not trigger merge policies or require segments to be replicated.

This makes updatable docValues great candidates to model inventory availability. Unfortunately, updatable docValues are not yet exposed through Solr in the general case (track SOLR-5944). Still, Lucene support is there, so it is pretty easy to plug in your own update handler.

However, there is still a caveat: making docValues updates visible requires commit and reopening of the segment readers and wiping out valuable filter caches, which may take many seconds or even minutes to regenerate. Fortunately, in the case of pure availability updates, no actual segment data is changed so all existing filter caches are still valid, and Solr CacheRegenerator can be used to recover cached filters from previous Searchers into the new ones.

Updatable docValues work well for inventory availability tracking for simple use cases when there is a predictable number of updatable fields to support, and it is acceptable to pay the price of a Solr/Lucene commit periodically, e.g. when updates can be micro-batched, say with a 1-5 minute period.

However, even updatable docValues are not a silver bullet. There are limitations and tradeoffs to be aware of.

- Filtering with docValues is not efficient for sparse fields as it is implemented through direct column scan. Typically, in e-commerce, SKU availability is dense enough that this efficiency loss is not a major problem. With dense fields, the performance of an inverted index scan is similar to the performance of a forward index scan, anyway

- docValues updates are not stacked, at least in current Lucene. This means that on every update a whole changed column is dumped in the index. This may become a problem for a large number of updatable columns or for very large indexes with frequent sparse updates.

- Currently, docValues support numerical and binary data. This is a bit wasteful in situations where we need to track inventory, which is often represented as boolean data. This is especially important when multiple flavors of availability have to be tracked per SKU, or in-store availability has to be tracked for omnichannel search use cases. It would be nice to have an optimized boolean docValues or be able to transparently pack a whole array of boolean values into a set of updatable numeric docValue.

In-place near real-time updates

In case true near real-time availability updates are needed, or the number of available fields to track is very large, off-the-shelf features in Lucene and Solr won’t be much help. Only custom solutions tailored for particular use cases can work.

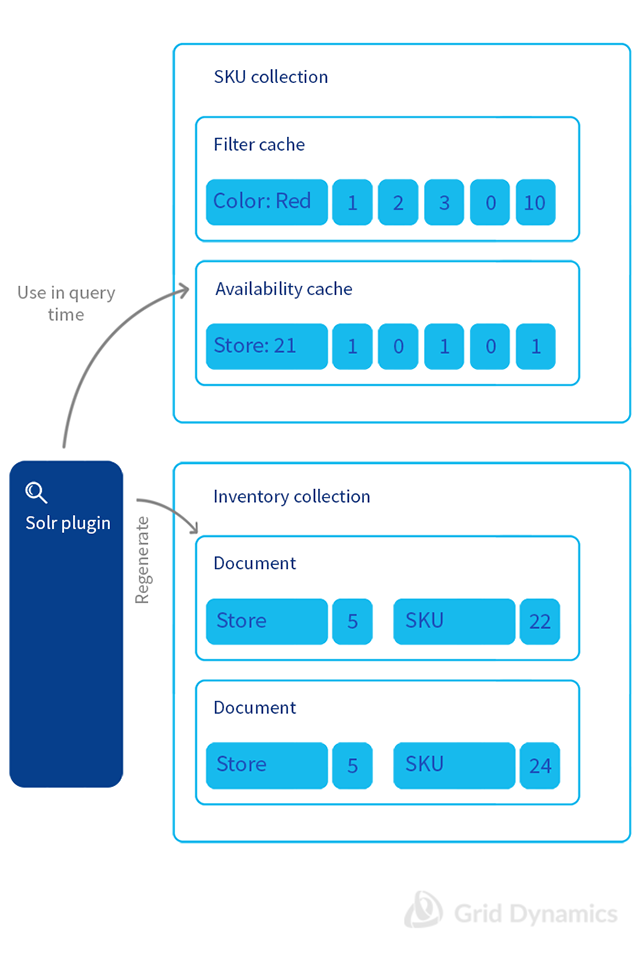

The main idea of such solutions is to embed a highly optimized in-memory store (NRT store) into each Solr instance. Lucene searches can be bridged to this store by a ValueSource interface providing FunctionValues.

Implementing this approach, we are almost completely on our own. We can not rely on Solr/Lucene to keep our NRT data durable or replicate it across the cluster. So, in general, following concerns have to be addressed:

- Updates of the NRT store (obviously). Typically Kafka is used to deliver messages which are applied through custom request handler.

- Fast filtering. The NRT data structure should be optimized for fast filtering, as this is a primary use case for tracking availability. Thus, an efficient implementation of ValueSourceScorer is necessary. For this purpose, sometimes it may be necessary to create and maintain an NRT inverted index optimized for the specific use case.

- Durability of the NRT store. This can be achieved by asynchronous (write-behind) storing of NRT data changes or by periodic checkpointing.

- Cold start of the NRT store. This can be achieved by recovering the durable state of the NRT data, or by replaying NRT update logs from a particular checkpoint.

- Replication of NRT store changes across the cluster. Again, Kafka can be handy to deliver messages across the cluster.

With such a design, it is possible to build a search system which is completely decoupled from the normal Solr document update/commit cycle that can, indeed, support a near real-time view of inventory availability.

Conclusion

Up-to-date inventory availability is a must-have feature in a modern e-commerce search system. There is a whole spectrum of implementation approaches, varying in complexity and cost, which can be applied depending on the actual use case. In our practice, we have found that the updatable docValues approach is sufficient and works well in a majority of e-commerce cases. However, for some high-end implementations a more sophisticated custom solution will be necessary. The best thing to do is to experiment with your data and use cases to see what method of handling inventory availability is the best for you!

References

http://www.slideshare.net/umeshprasad/consuming-realtime-signals-in-solr

https://blog.seecr.nl/2014/02/24/a-faster-join-for-solrlucene/

Ivan Mamontov, Eugene Steinberg